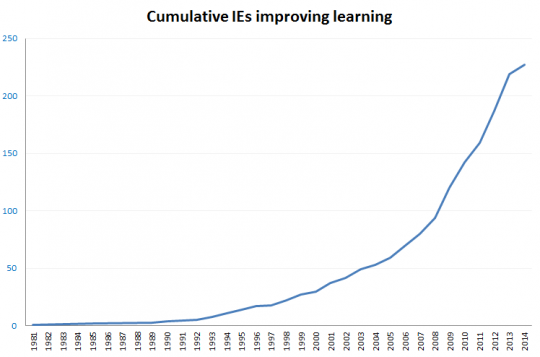

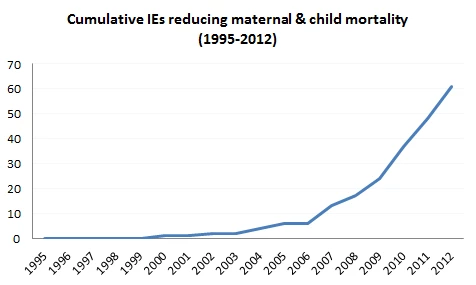

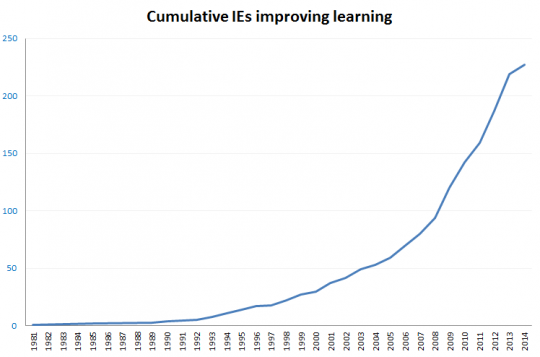

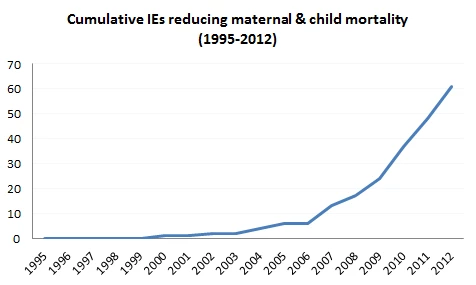

Impact evaluation evidence in developing countries is growing. In case we need evidence of that, here are two pieces: First, the cumulative number of IEs (by publication date) on improving learning from an array of systematic reviews published over the last couple of years, compiled by a

colleague and I. Second, the cumulative number of IEs on reducing maternal and child mortality, from a recent systematic review (

IEG 2013). The evidence is growing fast.

One of the principal goals of all this research is to influence policy. (As Langou & Weyrauch (2013) put it, this has been one of the goals of social science for just “the last couple of centuries.”) There are lots of instruments to affect this policy influence, but a very common instrument is the “policy note.” A policy note has figured into the dissemination plan for almost every impact evaluation proposal I have either written or reviewed. The intuition is straightforward: Policymakers don’t have time to read a full academic paper, much less a sheaf of them, so we’ll write a non-technical, brief document characterizing the main results with implications for policy.

Do they make any difference?

Having made and read all these promises to produce policy notes, I was excited to encounter an experiment to test whether these notes have an impact ( published study; earlier, ungated report). Masset et al. (2013) emailed 75,000 people from development agency mailing lists to invite them to participate. About one percent responded by filling out the baseline survey. So from an external validity perspective, this is an extremely select group of individuals, potentially highly interested in the topic or with a low value of time. Still, I think the experiment provokes some interesting ideas about policy communication of impact evaluation results.

807 participants (the one percent) were emailed one of the following: (1) a policy brief, (2) a policy brief with an opinion piece from the director of a development organization (appeal to authority), (3) a policy brief with the same opinion piece, this time credited to a research fellow (pure opinion), and (4) a placebo policy brief (i.e., a brief about a totally unrelated topic and so unlikely to affect beliefs on the topic under study). They were also emailed a link to fill out a follow-up survey within seven days. Two subsequent emails (over the course of three months) invited participants to fill in additional follow-up surveys.

The five-page brief in question summarized a systematic review on “the effectiveness of agricultural interventions that aim to improve nutritional status of children.” The review had three findings, as summarized in Masset et al’s experiment:

[ LINK]

Placebo Policy Brief

[ LINK]

At the baseline and again at follow-up, participants were asked whether they believed certain interventions were effective at improving the nutritional status of children and also how strong they believed the evidence was for that intervention. (“I don’t know” was an option.) They were asked these questions for the four interventions included in the policy brief (e.g., biofortification and home gardens) as well as four unrelated interventions (e.g., cash transfers and deworming).

Attrition was about 50% for the first follow-up and about 70% for the final follow-up (three months later). Again, this makes interpretation of results challenging, moving them from the convincing category to the interesting category. That said, the authors show that there isn’t differential attrition across treatment groups. It looks like non-attritors are a little more likely to have opinions about the interventions covered than attritors (i.e., 49% of non-attritors versus 43% of attritors had an opinion about the strength of evidence on biofortification), as well as more likely to believe the interventions are effective (among those that have an opinion at baseline), although these differences are never significant.

Using a difference-in-differences regression analysis, the authors find that the experiment significantly increased participants’ likelihood of having any opinion at all about the strength of the evidence on the topics under review, and – for biofortification – the experiment increased the likelihood of having an opinion about the actual effectiveness of the intervention. (There was no impact on the unrelated interventions, like deworming.) There are no significant differences across the treatment groups.

In terms of how the brief actually affected beliefs (rather than just encouraging people to have them), there is only an impact for biofortification: Participants slightly downgraded their view on the strength of evidence, and – in two of three treatment groups – they revised downward their view on the actual effectiveness of biofortification. It’s unsurprising that the effect would be concentrated on this topic: Biofortification is the only intervention highlighted in the policy implications on the first page of the policy brief: “Bio-fortification can help but is not yet a proven solution.” This (limited) impact is driven by participants who had no expressed opinion on the topics before reading the brief.

So in short, the authors find that the brief helped people who didn’t have an opinion on the topic to form one, specifically one in line with the findings of the brief.

Not all policy notes are created equal

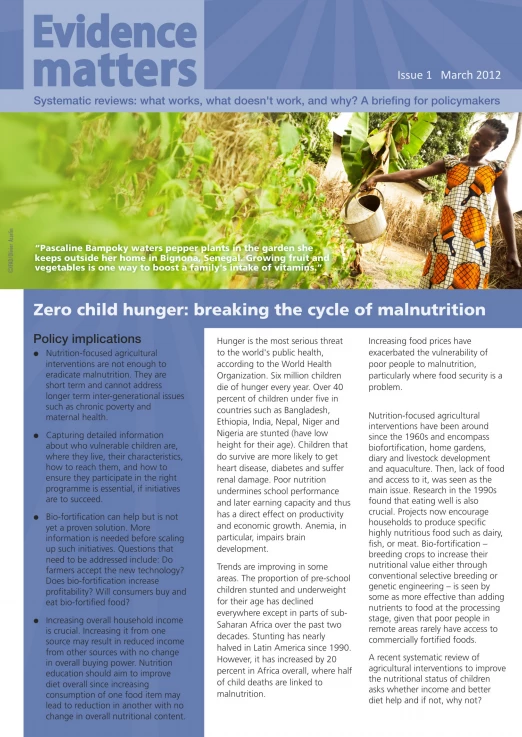

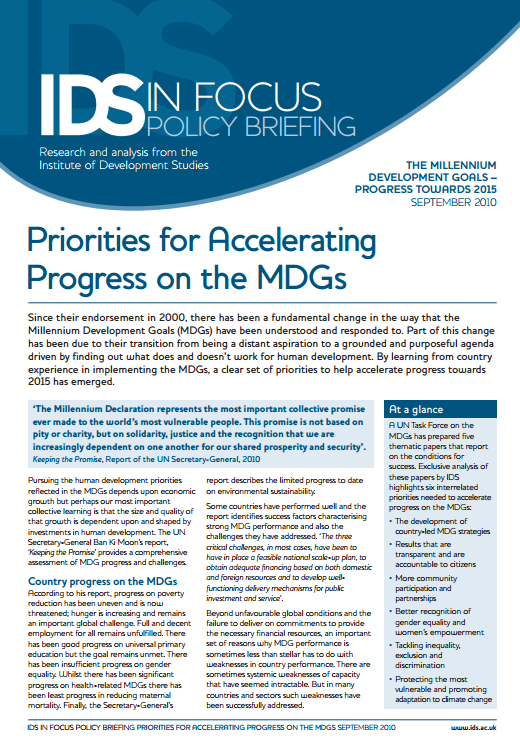

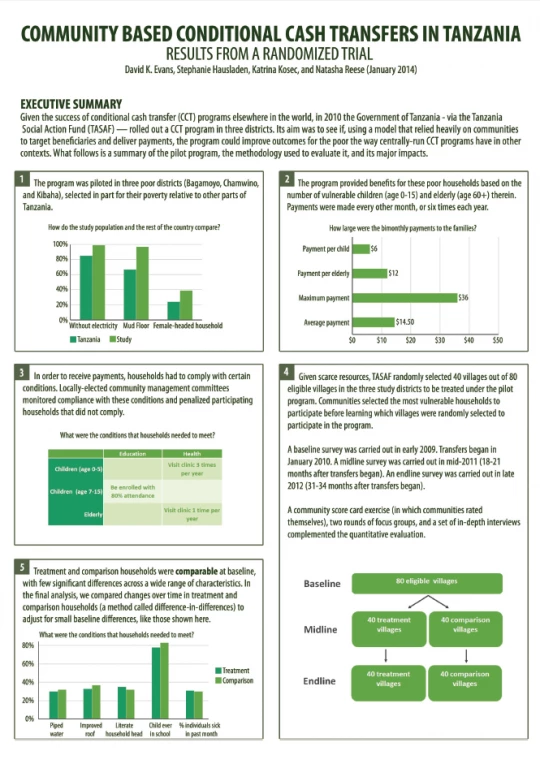

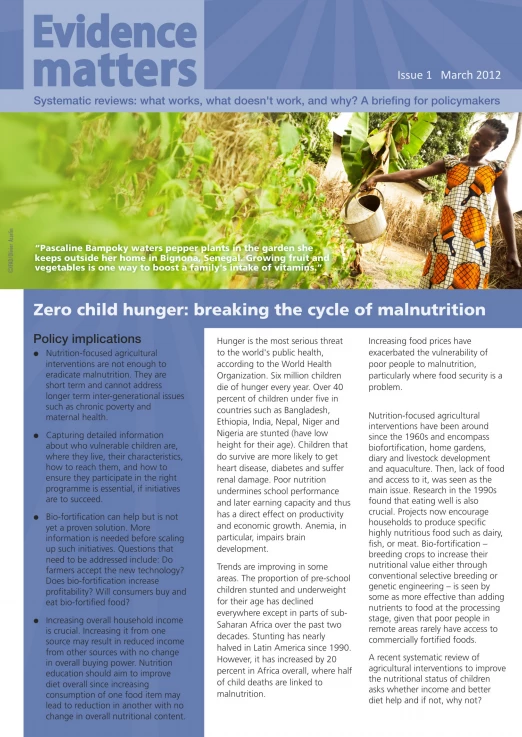

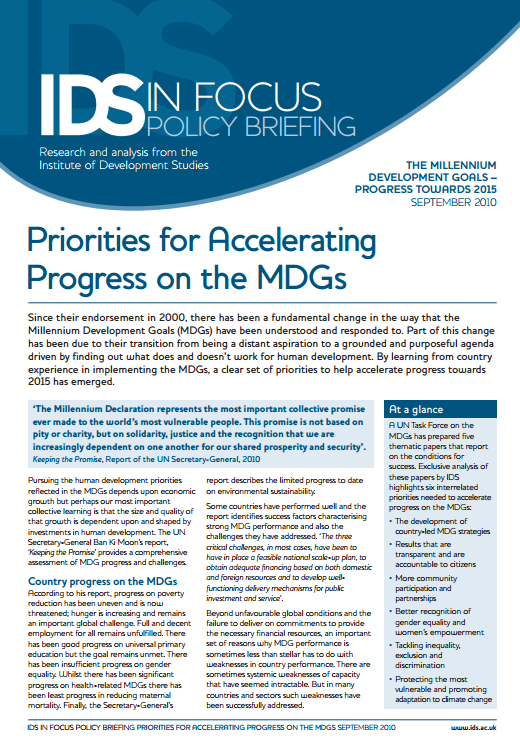

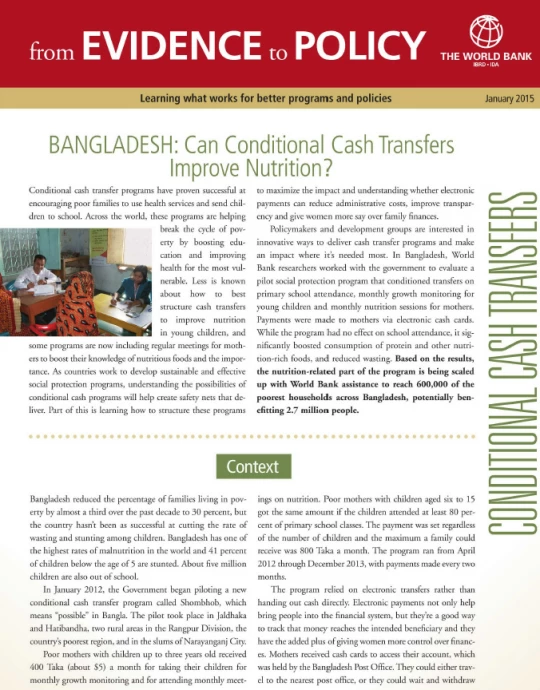

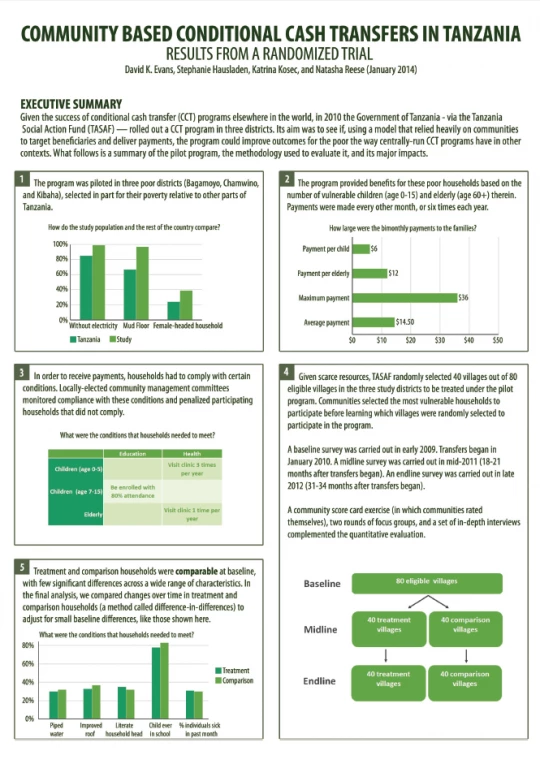

I’d love to see this kind of experiment done (with a lower attrition rate) with different kinds of briefs and other communication tools. I briefly reviewed a few policy note series: the World Bank’s From Evidence to Policy, Finance & Private Sector Development Impact, and DIME Briefs; the Poverty Action Lab’s Policy Briefcase series, the joint 3ie and the Institute for Development Studies’ Evidence Matters series; and one stand-alone note from my one work. As you can see from the examples below, all between 2 and 5 pages, there are a lot of similarities (attractive photo on half of them, lots of text on most of them). But how the information is presented is pretty diverse: A couple present the results through graphs or figures, others highlight key findings, others do neither (for the truly dedicated reader). Some policy notes have weak findings (“We reviewed the evidence and there isn’t much,” as in the subject of the experiment above); others have a “sharp” finding (such as, “Cash transfers improve nutrition” or “Cash transfers have impacts that persist after the program”).

Which design makes people more likely to read or share or understand or believe the results? We don’t really know.

[ LINK]

[ LINK]

[ LINK]

[ LINK]

[ LINK]

[ LINK]

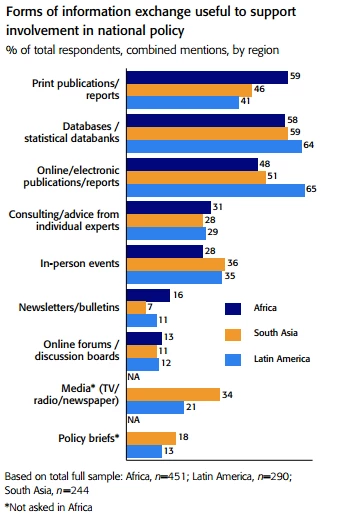

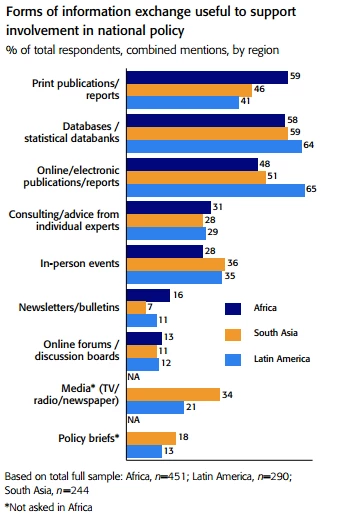

On the other hand, maybe “Which of these models works?” is the wrong question. A survey of about 500 policy stakeholders (government, non-government organization, private sector, media, etc.) conducted between 2009 and 2011 in Latin America and South Asia placed policy briefs at about the bottom of the list of information exchange useful to support policy.

So as long as we (as a development research community) are going to keep writing these briefs about our rigorous impact evaluations, it would be valuable to provide some rigorous research on the effectiveness of the resulting products as well. And to take the policy brief with you to your next in-person event or while providing in-person advice.

One of the principal goals of all this research is to influence policy. (As Langou & Weyrauch (2013) put it, this has been one of the goals of social science for just “the last couple of centuries.”) There are lots of instruments to affect this policy influence, but a very common instrument is the “policy note.” A policy note has figured into the dissemination plan for almost every impact evaluation proposal I have either written or reviewed. The intuition is straightforward: Policymakers don’t have time to read a full academic paper, much less a sheaf of them, so we’ll write a non-technical, brief document characterizing the main results with implications for policy.

Do they make any difference?

Having made and read all these promises to produce policy notes, I was excited to encounter an experiment to test whether these notes have an impact ( published study; earlier, ungated report). Masset et al. (2013) emailed 75,000 people from development agency mailing lists to invite them to participate. About one percent responded by filling out the baseline survey. So from an external validity perspective, this is an extremely select group of individuals, potentially highly interested in the topic or with a low value of time. Still, I think the experiment provokes some interesting ideas about policy communication of impact evaluation results.

807 participants (the one percent) were emailed one of the following: (1) a policy brief, (2) a policy brief with an opinion piece from the director of a development organization (appeal to authority), (3) a policy brief with the same opinion piece, this time credited to a research fellow (pure opinion), and (4) a placebo policy brief (i.e., a brief about a totally unrelated topic and so unlikely to affect beliefs on the topic under study). They were also emailed a link to fill out a follow-up survey within seven days. Two subsequent emails (over the course of three months) invited participants to fill in additional follow-up surveys.

The five-page brief in question summarized a systematic review on “the effectiveness of agricultural interventions that aim to improve nutritional status of children.” The review had three findings, as summarized in Masset et al’s experiment:

- “Food-based agricultural interventions effectively increase the production and consumption of the food they promote, and there is some evidence that this leads to higher vitamin A intake.”

- “There is no evidence of effect of these interventions on nutritional status of children (anthropometry).”

- “Studies assessing this evidence are few and often relying on weak methodologies.”

[ LINK]

Placebo Policy Brief

[ LINK]

At the baseline and again at follow-up, participants were asked whether they believed certain interventions were effective at improving the nutritional status of children and also how strong they believed the evidence was for that intervention. (“I don’t know” was an option.) They were asked these questions for the four interventions included in the policy brief (e.g., biofortification and home gardens) as well as four unrelated interventions (e.g., cash transfers and deworming).

Attrition was about 50% for the first follow-up and about 70% for the final follow-up (three months later). Again, this makes interpretation of results challenging, moving them from the convincing category to the interesting category. That said, the authors show that there isn’t differential attrition across treatment groups. It looks like non-attritors are a little more likely to have opinions about the interventions covered than attritors (i.e., 49% of non-attritors versus 43% of attritors had an opinion about the strength of evidence on biofortification), as well as more likely to believe the interventions are effective (among those that have an opinion at baseline), although these differences are never significant.

Using a difference-in-differences regression analysis, the authors find that the experiment significantly increased participants’ likelihood of having any opinion at all about the strength of the evidence on the topics under review, and – for biofortification – the experiment increased the likelihood of having an opinion about the actual effectiveness of the intervention. (There was no impact on the unrelated interventions, like deworming.) There are no significant differences across the treatment groups.

In terms of how the brief actually affected beliefs (rather than just encouraging people to have them), there is only an impact for biofortification: Participants slightly downgraded their view on the strength of evidence, and – in two of three treatment groups – they revised downward their view on the actual effectiveness of biofortification. It’s unsurprising that the effect would be concentrated on this topic: Biofortification is the only intervention highlighted in the policy implications on the first page of the policy brief: “Bio-fortification can help but is not yet a proven solution.” This (limited) impact is driven by participants who had no expressed opinion on the topics before reading the brief.

So in short, the authors find that the brief helped people who didn’t have an opinion on the topic to form one, specifically one in line with the findings of the brief.

Not all policy notes are created equal

I’d love to see this kind of experiment done (with a lower attrition rate) with different kinds of briefs and other communication tools. I briefly reviewed a few policy note series: the World Bank’s From Evidence to Policy, Finance & Private Sector Development Impact, and DIME Briefs; the Poverty Action Lab’s Policy Briefcase series, the joint 3ie and the Institute for Development Studies’ Evidence Matters series; and one stand-alone note from my one work. As you can see from the examples below, all between 2 and 5 pages, there are a lot of similarities (attractive photo on half of them, lots of text on most of them). But how the information is presented is pretty diverse: A couple present the results through graphs or figures, others highlight key findings, others do neither (for the truly dedicated reader). Some policy notes have weak findings (“We reviewed the evidence and there isn’t much,” as in the subject of the experiment above); others have a “sharp” finding (such as, “Cash transfers improve nutrition” or “Cash transfers have impacts that persist after the program”).

Which design makes people more likely to read or share or understand or believe the results? We don’t really know.

[ LINK]

[ LINK]

[ LINK]

[ LINK]

[ LINK]

[ LINK]

On the other hand, maybe “Which of these models works?” is the wrong question. A survey of about 500 policy stakeholders (government, non-government organization, private sector, media, etc.) conducted between 2009 and 2011 in Latin America and South Asia placed policy briefs at about the bottom of the list of information exchange useful to support policy.

So as long as we (as a development research community) are going to keep writing these briefs about our rigorous impact evaluations, it would be valuable to provide some rigorous research on the effectiveness of the resulting products as well. And to take the policy brief with you to your next in-person event or while providing in-person advice.

Join the Conversation