Every year, a child lives 8,760 hours (that’s 24 hours times 365 days). Let’s say she sleeps 9 hours a night. That leaves 5,475 hours awake. How many of those does she spend in school? Official compulsory instructional time for primary school ranges from under 600 hours (Russia) to nearly 1,200 hours (Costa Rica) in

the OECD database. Actual days may be significantly fewer with school closures and teacher absenteeism. In many low- and middle-income countries, school days are at the low end of that due to short school days. That means that only between 10 and 20 percent of children’s waking hours are spent in school [1].

At the same time, the World Development Report 2018 argues convincingly that there is a global learning crisis, with too many children failing to learn foundational skills – like functional reading – in school [2]. Clearly there is a need to improve the quality of schooling. But at the same time, why not leverage those other 80 to 90 percent of waking hours?

One way that parents try to use those extra hours is by paying for out-of-school tutoring. This is prevalent in many countries. But because parents pay for private tutoring, it creates a particular burden on the poorest children and their families. And some evidence suggests that it may create disincentives to teach during the school day (since teachers themselves are often tutors), as in Jayachandran (2014).

Save the Children has developed an approach – “life-wide learning” – which seeks to leverage those extra hours with out-of-school literacy activities. The program is called Literacy Boost. Friedlander and Goldenberg (2016) recently published the results of a randomized controlled trial (RCT) of Literacy Boost in Rwanda, focusing on the early grades of primary education. In the past, Dowd et al. have published non-experimental analysis showing sizeable gains of LB participants relative to comparators in various countries.

Literacy Boost has two main components: (1) teacher training, including training in formative assessment (let’s improve the quality of the time that children are in school) and (2) community action (let’s make literacy activities accessible outside of school). The RCT assigns some students to just the teacher training (TT), others to teacher training plus community action (TT+CA), and others continue with business-as-usual schooling.

What do you mean by teacher training?

The teacher training consists of 6-9 sessions over the course of the school year, carried out on Saturdays or Sundays. The sessions covered topics like “Vocabulary” and “Effective use and management of storybooks in the classroom. Teachers received a travel allowance for participating, since the training took place at one school within a cluster. Literacy Boost would visit teachers in their schools between sessions to help them implement what they had learned, and each training session began with a period of reflection in which teachers brought up challenges they faced since the last session. Training that includes these elements of repeated training combined with coaching has the best chance of success, according to synthesis work that I’ve done in the past (with Popova and Arancibia).

What are these community activities?

Paid community facilitators working with a local NGO ( Umuhuza) provided families of students with a 7-session training on “reading awareness,” which includes encouragement and guidance on reading together as well as stimulating oral language skills. They invited volunteers to run Reading Clubs, where children came together read storybooks aloud and play word games. They also organized Reading Buddies, pairing up a competent reader with a struggling reader and letting them borrow books to read together. (This didn’t work so well when organized through schools, since buddies might not live together, so they later revised it to be organized through the Reading Clubs.) The facilitators also put together Reading Festivals, where Reading Clubs would practice reading storybooks and then compete. A donor-funded project provided age-appropriate storybooks.

The right level of randomization and statistical power

The authors argue for randomizing at the level of existing administrative structures. In Rwanda, that’s the “sector,” which is a unit that has a Sector Education Officer who supervises schools. Ben Piper at RTI has made the argument that implementing pilots in the context of existing administrative structures make for more straightforward scale-up (cited in this report, although I can’t find the presentation). This seems sensible.

But here’s the challenge: there were only 21 sectors in the pilot district. So with 7 sectors in each treatment group and 7 in the control group, the study has very limited statistical power. In some of the analysis (teacher attitudes), the authors explicitly discuss that they’ve clustered the standard errors at the level of randomization; in others (student results), it isn’t discussed explicitly.

If you’re going to be underpowered, can you make up for it with rich data?

The program evaluated student learning ability, of course. It assessed a wide range of reading skills (from letter recognition to reading comprehension) along with basic data about students. The program also surveyed teachers about their teaching and assessment strategies. They observed teachers, and they took pictures of the classroom (to see the “print environment” of the classroom). They surveyed the “literacy ecology” of students’ homes and communities: reading habits at home, attitudes toward reading, reading materials availability, etc. An ethnographer followed a small sub-set of students over the course of several days. Finally, the program collected extensive data on the implementation of the activities and how much people participated in them.

I don’t the reasons why the pilot was implemented in just one district. From a pure cost perspective, I might have traded off some of the richness of the data collection for a larger sample size. That said, it’s not always possible to affect the sample size, and conditional on being underpowered, it’s a rich data set.

So, what did Literacy Boost do?

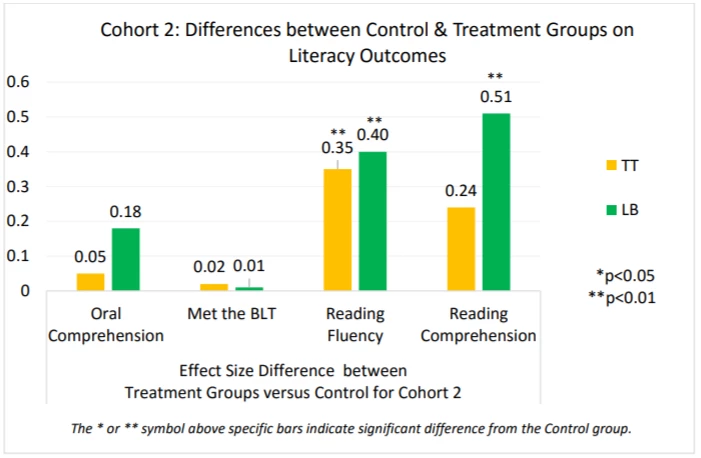

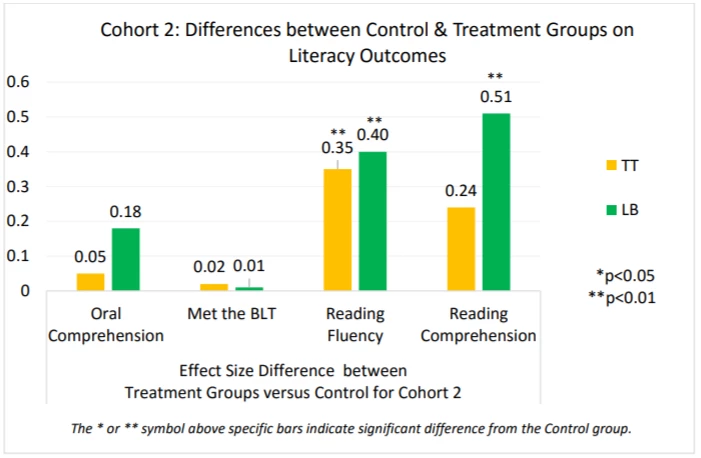

Both the TT and the TT+CA improved reading skills, and TT+CA improved reading by more than TT alone.

Source: Friedlander and Goldenberg (2016)

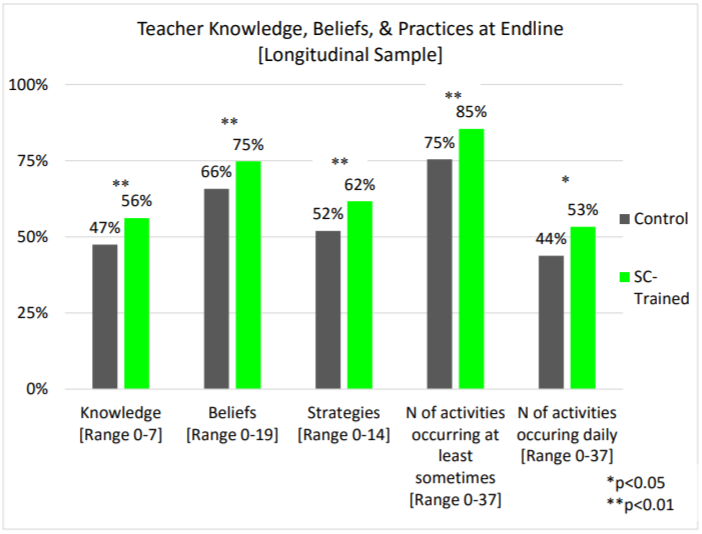

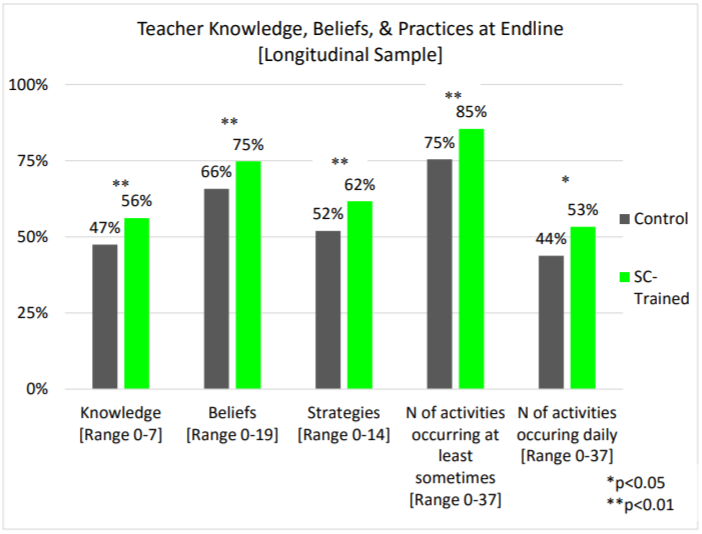

But it’s still not enough: by the end of the study, more than 30 percent of participating students still didn’t pass a basic literacy threshold. This may not be surprising given that just 20 percent of households participated in the workshops (and only one-third reported being aware of the workshops). Classrooms in both TT and TT+CA sectors had more print materials on their walls. Teachers in those schools scored higher on evaluations of their knowledge, belief, and practices about reading instruction.

Source: Friedlander and Goldenberg (2016)

Students in the TT+CA group had more reading habits and interactions, more reading materials, and more child interest and engagement in reading.

How much does all this cost?

Unfortunately, the study (all 200+ pages) has no analysis or systematic reporting of costs. Most impact evaluations don’t, but an increasing number are reporting costs or analyzing relative cost-effectiveness. Piper et al. have a nice example of this with a series of education technology interventions in Kenya.

Take away

Literacy Boost hits on the crucial point that children spend relatively little of their time in school, and so if we really care about literacy, it makes sense to leverage those additional hours. The program improves children’s literacy. Whether it’s a cost effective package of interventions relative to other interventions, however, remains to be researched.

Further reading

[1] This calculation is adapted from Friedlander and Goldenberg (2016).

[2] The World Development Report is not the first report to highlight the learning crisis, but it's a good collection of the latest evidence on it. (I'm biased; I'm a co-author.)

At the same time, the World Development Report 2018 argues convincingly that there is a global learning crisis, with too many children failing to learn foundational skills – like functional reading – in school [2]. Clearly there is a need to improve the quality of schooling. But at the same time, why not leverage those other 80 to 90 percent of waking hours?

One way that parents try to use those extra hours is by paying for out-of-school tutoring. This is prevalent in many countries. But because parents pay for private tutoring, it creates a particular burden on the poorest children and their families. And some evidence suggests that it may create disincentives to teach during the school day (since teachers themselves are often tutors), as in Jayachandran (2014).

Save the Children has developed an approach – “life-wide learning” – which seeks to leverage those extra hours with out-of-school literacy activities. The program is called Literacy Boost. Friedlander and Goldenberg (2016) recently published the results of a randomized controlled trial (RCT) of Literacy Boost in Rwanda, focusing on the early grades of primary education. In the past, Dowd et al. have published non-experimental analysis showing sizeable gains of LB participants relative to comparators in various countries.

Literacy Boost has two main components: (1) teacher training, including training in formative assessment (let’s improve the quality of the time that children are in school) and (2) community action (let’s make literacy activities accessible outside of school). The RCT assigns some students to just the teacher training (TT), others to teacher training plus community action (TT+CA), and others continue with business-as-usual schooling.

What do you mean by teacher training?

The teacher training consists of 6-9 sessions over the course of the school year, carried out on Saturdays or Sundays. The sessions covered topics like “Vocabulary” and “Effective use and management of storybooks in the classroom. Teachers received a travel allowance for participating, since the training took place at one school within a cluster. Literacy Boost would visit teachers in their schools between sessions to help them implement what they had learned, and each training session began with a period of reflection in which teachers brought up challenges they faced since the last session. Training that includes these elements of repeated training combined with coaching has the best chance of success, according to synthesis work that I’ve done in the past (with Popova and Arancibia).

What are these community activities?

Paid community facilitators working with a local NGO ( Umuhuza) provided families of students with a 7-session training on “reading awareness,” which includes encouragement and guidance on reading together as well as stimulating oral language skills. They invited volunteers to run Reading Clubs, where children came together read storybooks aloud and play word games. They also organized Reading Buddies, pairing up a competent reader with a struggling reader and letting them borrow books to read together. (This didn’t work so well when organized through schools, since buddies might not live together, so they later revised it to be organized through the Reading Clubs.) The facilitators also put together Reading Festivals, where Reading Clubs would practice reading storybooks and then compete. A donor-funded project provided age-appropriate storybooks.

The right level of randomization and statistical power

The authors argue for randomizing at the level of existing administrative structures. In Rwanda, that’s the “sector,” which is a unit that has a Sector Education Officer who supervises schools. Ben Piper at RTI has made the argument that implementing pilots in the context of existing administrative structures make for more straightforward scale-up (cited in this report, although I can’t find the presentation). This seems sensible.

But here’s the challenge: there were only 21 sectors in the pilot district. So with 7 sectors in each treatment group and 7 in the control group, the study has very limited statistical power. In some of the analysis (teacher attitudes), the authors explicitly discuss that they’ve clustered the standard errors at the level of randomization; in others (student results), it isn’t discussed explicitly.

If you’re going to be underpowered, can you make up for it with rich data?

The program evaluated student learning ability, of course. It assessed a wide range of reading skills (from letter recognition to reading comprehension) along with basic data about students. The program also surveyed teachers about their teaching and assessment strategies. They observed teachers, and they took pictures of the classroom (to see the “print environment” of the classroom). They surveyed the “literacy ecology” of students’ homes and communities: reading habits at home, attitudes toward reading, reading materials availability, etc. An ethnographer followed a small sub-set of students over the course of several days. Finally, the program collected extensive data on the implementation of the activities and how much people participated in them.

I don’t the reasons why the pilot was implemented in just one district. From a pure cost perspective, I might have traded off some of the richness of the data collection for a larger sample size. That said, it’s not always possible to affect the sample size, and conditional on being underpowered, it’s a rich data set.

So, what did Literacy Boost do?

Both the TT and the TT+CA improved reading skills, and TT+CA improved reading by more than TT alone.

Source: Friedlander and Goldenberg (2016)

But it’s still not enough: by the end of the study, more than 30 percent of participating students still didn’t pass a basic literacy threshold. This may not be surprising given that just 20 percent of households participated in the workshops (and only one-third reported being aware of the workshops). Classrooms in both TT and TT+CA sectors had more print materials on their walls. Teachers in those schools scored higher on evaluations of their knowledge, belief, and practices about reading instruction.

Source: Friedlander and Goldenberg (2016)

Students in the TT+CA group had more reading habits and interactions, more reading materials, and more child interest and engagement in reading.

How much does all this cost?

Unfortunately, the study (all 200+ pages) has no analysis or systematic reporting of costs. Most impact evaluations don’t, but an increasing number are reporting costs or analyzing relative cost-effectiveness. Piper et al. have a nice example of this with a series of education technology interventions in Kenya.

Take away

Literacy Boost hits on the crucial point that children spend relatively little of their time in school, and so if we really care about literacy, it makes sense to leverage those additional hours. The program improves children’s literacy. Whether it’s a cost effective package of interventions relative to other interventions, however, remains to be researched.

Further reading

- Dowd et al.: “Lifewide learning for Early Reading Development” (2017): “Using longitudinal reading scores of 6,874 students in 424 schools in 12 sites across Africa and Asia, there was 1) a modest but consistent relationship between students’ home literacy environments and reading scores, and 2) a strong relationship between reading gains and participation in community reading activities, suggesting that interventions should consider both home and community learning environments and their differential influences on interventions across different low-resource settings.”

- Dowd et al.: “Literacy Boost: Cross Country Analysis Results” (2013): Do at-risk groups benefit more from Literacy Boost? (Regression evidence suggests “yes.”)

[1] This calculation is adapted from Friedlander and Goldenberg (2016).

[2] The World Development Report is not the first report to highlight the learning crisis, but it's a good collection of the latest evidence on it. (I'm biased; I'm a co-author.)

Join the Conversation