About a year ago, I

blogged on a paper that had tried to replicate results on 61 papers in economics and found that in 51% of the cases, they couldn’t get the same result. In the meantime, someone brought to my attention a paper that takes a wider sample and also makes us think about what “replication” is, so I thought it would be worth looking at those results.

The paper in question is by Maren Duvendack, Richard Palmer-Jones and Robert Reed and appeared last year in Econ Journal Watch. The paper starts with an interesting history of replication in economics. It turns out that replication goes pretty far back. Duvendack and co. cite the introductory editorial to Econometrica, where Frisch wrote “In statistical and other numerical work presented in Econometrica the original raw data will, as a rule, be published, unless their volume is excessive. This is important to stimulate criticism, control and further studies.” That was in 1933.

Various journals have made similar affirmations of the need for replication over the years. The Journal of Human Resources put it in its policy statement in 1990 – explicitly saying that it welcomed the submission of studies that replicated studies that had appeared in the JHR in the last five years. But this is missing from the current policy, which focuses more on making data and code available with published papers. The Journal of Political Economy took a different approach, and had a “confirmations and contradictions” section from 1976-1999. These explicit publication opportunities may have declined in recent times, but there has been a sharp surge in a different path to replication – the requirement that authors submit their code and dataset for a given paper. Duvendack and co. find 27 journals that regularly publish data and code – and many of these are top journals. The only development field journal that makes this list is the World Bank Economic Review. In addition, many funders now require that, after a decent interval, the data they funded be made publicly available in its entirety.

Before we look at Duvendack and co.’s review of replication trends, it’s worth taking a short detour as to what exactly replication means. Unfortunately, as it’s used in many conversations, it’s imprecise. Michael Clemens has a very nice (and very precise) paper where he lays out a number of distinctions. In this case, precision requires some verbosity, so hang on. Clemens lays out four different types (in two groups):

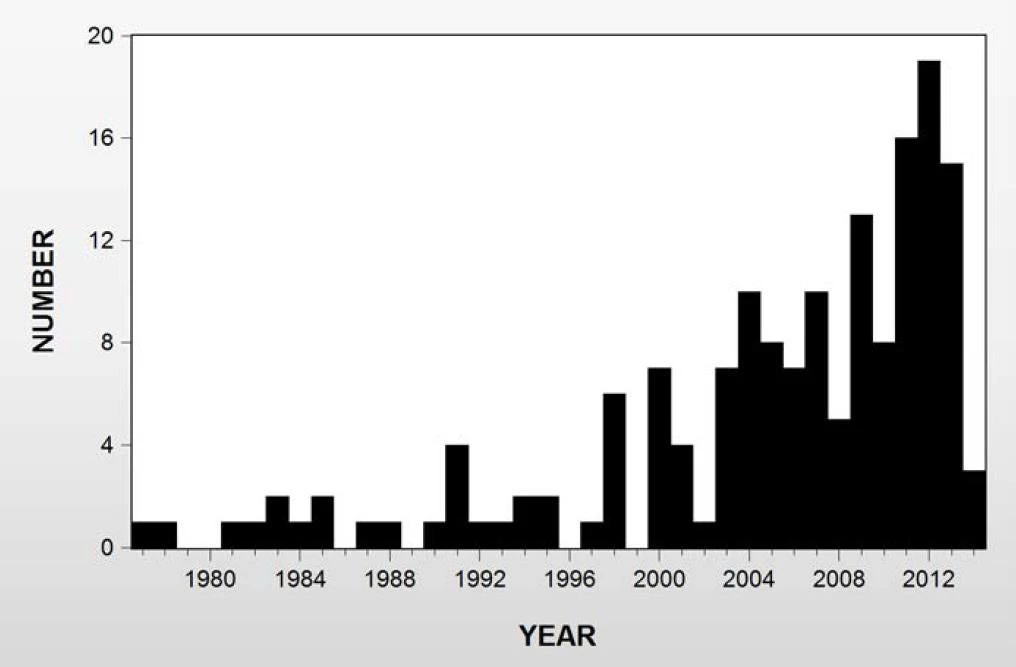

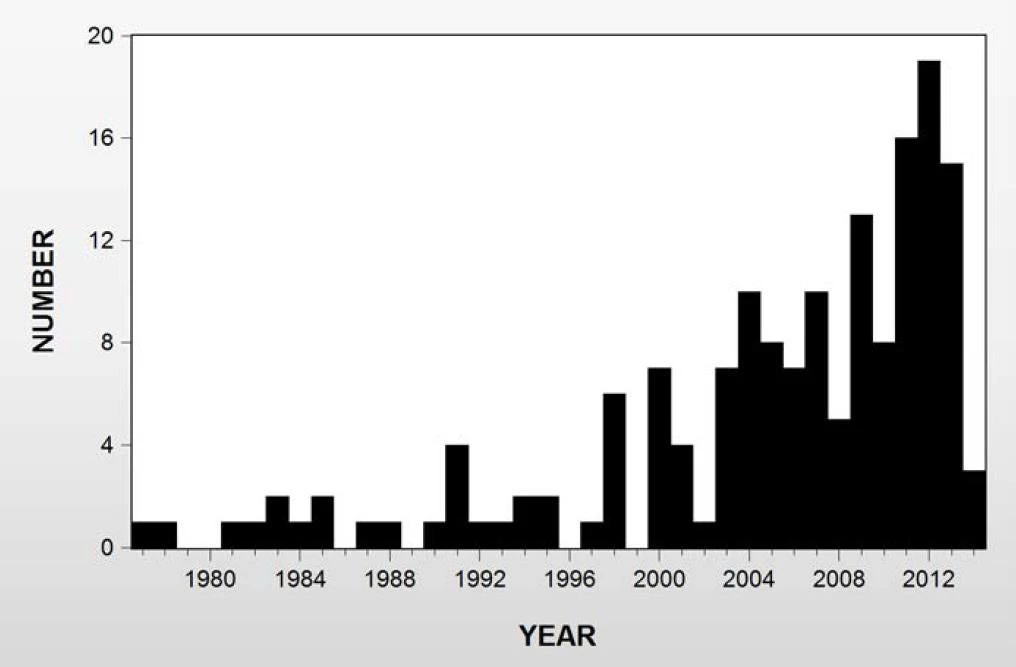

Duvendack and co. are using a broader definition of replication (especially when compared to the paper I blogged on last year): they’re including what Clemens calls robustness. They go out, casting a wide net to look for replication studies (they include not only Google Scholar and the Web of Science, and the Replication in Economics wiki, but also suggestions from journal editors, their own collections and a systematic search of the top 50 economics journals). This search gives them 162 published studies. The time trend is interesting, as the figure below (reproduced from their paper) shows what could be an upwards trend:

One development journal that contributed significantly to these 162 studies was the Journal of Development Studies. We can’t tell how many other development papers are in the 162 since the rest of the main contributors are more general interest journals. The Journal of Applied Econometrics (JAE) is the main overall contributor to this body of work – they clock in with 31 replications – in large part because they have a dedicated replication section which can consist of pretty short summaries.

Duvendack and co. then look at the characteristics of these replications. I am going to focus on the non-JAE, non-experimental (as in experimental economics, not field experiments) studies which number 119. About 61 percent of these studies are an “exact” replication or, to use Clemens’ taxonomy, a verification study. 55 percent of studies extend the original findings. As might be expected, the majority of the 119 studies (73 percent) find a significant difference with the original result. About 17 percent confirm the previous study and 10 percent are mixed. And 26 percent of studies have a reply by the original study authors.

As Duvendack and co. point out, we shouldn’t think of the published studies as a random sample. Which brings us to incentives. Clearly getting confirmatory studies published in major journals is going to be hard, and particularly hard for simple verification studies. Turning to results that disagree with the original study, Duvendack and co. speculate that some journals might be reluctant to publish contradictions of influential authors. I can also imagine that younger researchers may be averse to taking on this particular challenge, given that influential senior researchers may show up in their career futures. Returning to the journal side of the equation, it also doesn’t look particularly good for the journal to take down one of their own papers. On another level, for journals the citation per page count is likely to be significantly lower for a replication than for an original paper (although Duvendack and co. suggest this could be alleviated by publishing very short replication papers with the longer paper as an online appendix). Finally, the incentives for authors of the original study to make replication easy are pretty weak – if someone confirms your study it’s not really a big deal, but it’s a big deal if they don’t.

So all of these factors point towards the lower likelihood of replications. There are a couple of factors that might make replications more likely. The first is there are somewhat more communities to support this than there used to be. Beyond the wiki mentioned above, Duvendack and co. have a list inside economics (e.g. 3ie’s work), but also for other disciplines. In addition, the growth in online storage, the growth in computer processing power, plus the increasing number of journals requiring the posting of code and data lower the costs of replication dramatically. And the trend is positive, so maybe there is some social support. It will be interesting to see what the future brings.

The paper in question is by Maren Duvendack, Richard Palmer-Jones and Robert Reed and appeared last year in Econ Journal Watch. The paper starts with an interesting history of replication in economics. It turns out that replication goes pretty far back. Duvendack and co. cite the introductory editorial to Econometrica, where Frisch wrote “In statistical and other numerical work presented in Econometrica the original raw data will, as a rule, be published, unless their volume is excessive. This is important to stimulate criticism, control and further studies.” That was in 1933.

Various journals have made similar affirmations of the need for replication over the years. The Journal of Human Resources put it in its policy statement in 1990 – explicitly saying that it welcomed the submission of studies that replicated studies that had appeared in the JHR in the last five years. But this is missing from the current policy, which focuses more on making data and code available with published papers. The Journal of Political Economy took a different approach, and had a “confirmations and contradictions” section from 1976-1999. These explicit publication opportunities may have declined in recent times, but there has been a sharp surge in a different path to replication – the requirement that authors submit their code and dataset for a given paper. Duvendack and co. find 27 journals that regularly publish data and code – and many of these are top journals. The only development field journal that makes this list is the World Bank Economic Review. In addition, many funders now require that, after a decent interval, the data they funded be made publicly available in its entirety.

Before we look at Duvendack and co.’s review of replication trends, it’s worth taking a short detour as to what exactly replication means. Unfortunately, as it’s used in many conversations, it’s imprecise. Michael Clemens has a very nice (and very precise) paper where he lays out a number of distinctions. In this case, precision requires some verbosity, so hang on. Clemens lays out four different types (in two groups):

- Replication (both sub-types use the same sampling distribution of parameter estimates and are looking for discrepancies that come from random chance, error, or fraud):

- Verification – uses the same specification, same population and same sample

- Reproduction – uses the same specification, same population but not the same sample

- Robustness (uses different sampling distribution for parameter estimates and is looking for discrepancies that come from changes in the sampling distribution – as Clemens notes they need not give identical results in expectation):

- Reanalysis – uses a different specification, the same population and not necessarily the same sample

- Extension – uses the same specification, different population and a different sample.

Duvendack and co. are using a broader definition of replication (especially when compared to the paper I blogged on last year): they’re including what Clemens calls robustness. They go out, casting a wide net to look for replication studies (they include not only Google Scholar and the Web of Science, and the Replication in Economics wiki, but also suggestions from journal editors, their own collections and a systematic search of the top 50 economics journals). This search gives them 162 published studies. The time trend is interesting, as the figure below (reproduced from their paper) shows what could be an upwards trend:

One development journal that contributed significantly to these 162 studies was the Journal of Development Studies. We can’t tell how many other development papers are in the 162 since the rest of the main contributors are more general interest journals. The Journal of Applied Econometrics (JAE) is the main overall contributor to this body of work – they clock in with 31 replications – in large part because they have a dedicated replication section which can consist of pretty short summaries.

Duvendack and co. then look at the characteristics of these replications. I am going to focus on the non-JAE, non-experimental (as in experimental economics, not field experiments) studies which number 119. About 61 percent of these studies are an “exact” replication or, to use Clemens’ taxonomy, a verification study. 55 percent of studies extend the original findings. As might be expected, the majority of the 119 studies (73 percent) find a significant difference with the original result. About 17 percent confirm the previous study and 10 percent are mixed. And 26 percent of studies have a reply by the original study authors.

As Duvendack and co. point out, we shouldn’t think of the published studies as a random sample. Which brings us to incentives. Clearly getting confirmatory studies published in major journals is going to be hard, and particularly hard for simple verification studies. Turning to results that disagree with the original study, Duvendack and co. speculate that some journals might be reluctant to publish contradictions of influential authors. I can also imagine that younger researchers may be averse to taking on this particular challenge, given that influential senior researchers may show up in their career futures. Returning to the journal side of the equation, it also doesn’t look particularly good for the journal to take down one of their own papers. On another level, for journals the citation per page count is likely to be significantly lower for a replication than for an original paper (although Duvendack and co. suggest this could be alleviated by publishing very short replication papers with the longer paper as an online appendix). Finally, the incentives for authors of the original study to make replication easy are pretty weak – if someone confirms your study it’s not really a big deal, but it’s a big deal if they don’t.

So all of these factors point towards the lower likelihood of replications. There are a couple of factors that might make replications more likely. The first is there are somewhat more communities to support this than there used to be. Beyond the wiki mentioned above, Duvendack and co. have a list inside economics (e.g. 3ie’s work), but also for other disciplines. In addition, the growth in online storage, the growth in computer processing power, plus the increasing number of journals requiring the posting of code and data lower the costs of replication dramatically. And the trend is positive, so maybe there is some social support. It will be interesting to see what the future brings.

Join the Conversation