We are learning more about interventions that work in education. Back in October,

I wrote about

Patrick McEwan’s meta-analysis of 76 randomized trials on student learning in primary education alone. That was bookended by

a meta-analysis of 75 randomized and quasi-experimental evaluations and a

systematic review on the same topic in Science. So we know something (not everything!) about helping children learn.

Contrast that with our knowledge of “what works” in adult education in low-income environments: __________. Yep, that’s about it. (To be more precise, here’s a study on an adult literacy program in Venezuela that probably had no impact; and here’s a study from a program in Kenya with some suggestive positive results but with massive attrition. So THAT’S what we know.)

Two years ago Aker et al added to that with a study from Niger showing that a mobile phone-based component added to a standard adult literacy program improved math and literacy scores for participants, and those results persisted, at least in math. So simple mobile phones can make standard adult literacy programs better.

Fast forward to last month, when Ksoll et al. put out a working paper on a new, purely mobile phone based adult literacy intervention in Los Angeles, California. So this is a literacy program with no teachers. Not as resource poor an environment as Venezuela, Kenya, or Niger, to be sure, but when we think about the quality of education and the penetration of cell phones in many low-income environments, the model seems relevant…and bold!

How the intervention – Cell-Ed – worked: Participants call a certain phone number from their simple cell phone – no smartphone required – and thereby activate the next lesson (called a “micromodule”). Each lesson consists of (a) an audio lesson that lasts one to three minutes, (b) a text message reinforcing the lesson sent to the participant, and (c) an additional text message asking the participant to text back a micro-assignment, which the participant then receives feedback on. If they pass, they move on to the next micromodule. If they fail, they repeat the micromodule. There are 437 of these micromodules in total, adapted from 43 lessons in a more traditional adult literacy program, Leamos. One more element: participants received pre-recorded audio messages encouraging them along the way.

How the study was designed: The researchers worked with community centers and schools in Los Angeles to find 250 potential participants. Initial screening eliminated individuals who could read sentences, were over 80 years old, or needed eyeglasses but didn’t own them (maybe we need the Gansu Vision Intervention Project transplanted to L.A., evaluated to be highly effective by Glewwe et al.) The remaining 124 individuals were invited to a baseline evaluation: 89 showed up. After a reading evaluation, people who scored lower than “basic” in two out of three reading sub-tests were considered eligible, leaving a sample of 70 participants. Stratified random assignment to the program or the control group took place before the evaluation, but participants only found out after evaluation. (Literacy evaluators were unaware of assignment.) Participants received some small monetary compensation in the form of a gift card for each round of data collection and for completing the program within 4.5 months.

To be clear on the literacy levels, baseline assessments revealed that participants (and comparators) had reading scores equivalent to an average American child age six years and six months, i.e., about that of a first-grader.

The data: They administered a battery of literacy tests at baseline and again 3 to 4.5 months later. (They used the Woodcock-Muñoz III Language Survey, if you’re into those kinds of details.) They also gathered data on socio-demographic characteristics, self-esteem ( Rosenberg scale), and self-efficacy ( General Self Efficacy scale), at baseline and follow-up. They administered both of these again to the control group 4.5 months after the first follow-up. They also used Cell-Ed usage data and brief phone calls.

Balance across the treatment and control groups looks pretty good overall with one BIG exception: Treatment individuals were more likely to have a cell phone at baseline (61% versus 41%!). Participants who didn’t have a cell phone at baseline received a basic phone from Cell-Ed. The authors control for baseline values of this and the one other (small) significant difference, but still, it gives pause. Everyone assigned to treatment at least started the program (and no one in the control group did), so the intent-to-treat and the average-treatment-on-the-treated are the same.

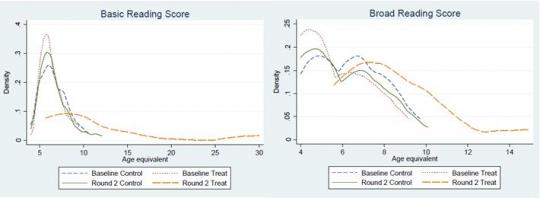

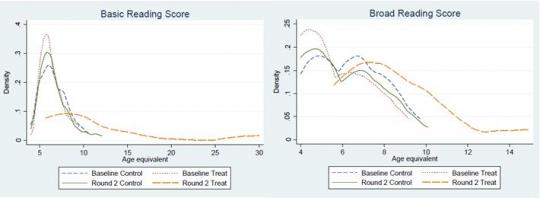

Basic results: Basic reading scores (letter-word identification and word attack) went up about five years (i.e., from the level of an average 6.5 year old to an 11.5 year old). Broad reading scores (letter-word identification, reading fluency, and passage comprehension) went up about two and a half years. In about four months. Without a teacher.

Here’s the distribution of basic and broad reading scores from the paper:

These results move almost not at all when the authors control for baseline differences in cell phone ownership and self-esteem, when they include fixed effects, and a few other specifications. So, wow.

But wait, before you pull your children out of school, there’s unbalanced attrition! [Cue the ominous music.] 25% of people in the treatment group didn’t participate in the follow-up survey, versus about 9% in the control group. This is half the dropout rate (about 50% reported elsewhere for adult literacy programs): So from a program perspective, it’s a win. From an evaluation perspective, not so much.

What to do? First, the authors implement standard Lee bounds (and provide a pretty intuitive exposition of how the bounds work). The difference in attrition between treatment and control is 16%: For the lower bound, they drop the 16% “worst” (in outcomes) observations from the control group. For the upper bound, they drop the 16% “best”. The lower bounds for both broad and basic reading are positive. They then tighten those bounds a little bit by ordering the control group based on how often they responded to the three surveys, called the “adjusted Lee bound.” The results are similar. Finally, they test a couple of other assumptions and construct another set of lower bounds, finding smaller but still positive effects.

So the learning results look pretty robust. The self-esteem and self-efficacy results look positive but are less robust to different specifications. Interestingly, weekly self-esteem assessments seem to track performance in the program: If you’re failing your micromodules, you’re feeling worse about yourself.

The sample is pretty small, there is some baseline imbalance (although the results are robust to controlling for it), and there is unbalanced attrition (although the results are robust to an array of bounds). This intervention is exciting and the results are promising: It’s time to test it rigorously at a larger scale.

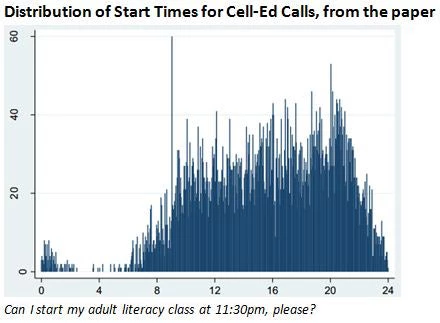

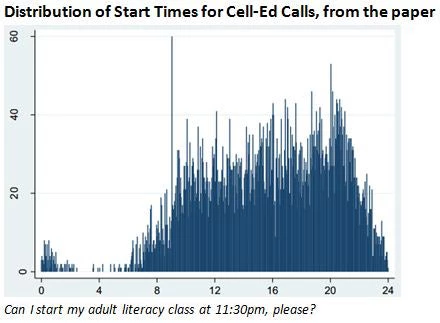

Why did this program seem to work so well? The authors put forth the hypothesis that the flexibility of this program, relative to standard adult education classes, is crucial to its success. Of course, there was no “standard adult education” treatment arm, so they can’t test that rigorously. But they do report detailed data on how participants access the program, and it is certainly suggestive. Participants call into the program at all times of the day and night, mostly between 8am and midnight.

Participants call in at all days of the week; and most interactions with the system are about ten minutes. This is unlike any adult education class I’ve seen. It’s consistent with what Mullainathan and Shafir’s book, Scarcity, calls making programs to help the poor more fault-tolerant, to borrow a term from engineering. In other words, make it so that low-income individuals don’t have to drop out of a training or literacy program just because they missed a class due to an extra shift at work or a daycare failure. The cell phone platform achieves exactly that.

Going mobile: Mobile phones clearly aren’t the silver bullet to all of our development problems: We’ll probably be waiting a while for the iPhone app that physically vaccinates children. (Anyone?) Aker herself, one of the authors on this paper, has an interview from 2011 trying to separate the hope from the hype.

But they are at the center of some promising interventions: increasing adherence to rapid diagnostic tests among malaria patients or to antiretroviral therapy among HIV-positive individuals. And in some cases, like this one, the cell phone program manages not just to complement what we think of as a crucial input, but actually to replace it.

Contrast that with our knowledge of “what works” in adult education in low-income environments: __________. Yep, that’s about it. (To be more precise, here’s a study on an adult literacy program in Venezuela that probably had no impact; and here’s a study from a program in Kenya with some suggestive positive results but with massive attrition. So THAT’S what we know.)

Two years ago Aker et al added to that with a study from Niger showing that a mobile phone-based component added to a standard adult literacy program improved math and literacy scores for participants, and those results persisted, at least in math. So simple mobile phones can make standard adult literacy programs better.

Fast forward to last month, when Ksoll et al. put out a working paper on a new, purely mobile phone based adult literacy intervention in Los Angeles, California. So this is a literacy program with no teachers. Not as resource poor an environment as Venezuela, Kenya, or Niger, to be sure, but when we think about the quality of education and the penetration of cell phones in many low-income environments, the model seems relevant…and bold!

How the intervention – Cell-Ed – worked: Participants call a certain phone number from their simple cell phone – no smartphone required – and thereby activate the next lesson (called a “micromodule”). Each lesson consists of (a) an audio lesson that lasts one to three minutes, (b) a text message reinforcing the lesson sent to the participant, and (c) an additional text message asking the participant to text back a micro-assignment, which the participant then receives feedback on. If they pass, they move on to the next micromodule. If they fail, they repeat the micromodule. There are 437 of these micromodules in total, adapted from 43 lessons in a more traditional adult literacy program, Leamos. One more element: participants received pre-recorded audio messages encouraging them along the way.

How the study was designed: The researchers worked with community centers and schools in Los Angeles to find 250 potential participants. Initial screening eliminated individuals who could read sentences, were over 80 years old, or needed eyeglasses but didn’t own them (maybe we need the Gansu Vision Intervention Project transplanted to L.A., evaluated to be highly effective by Glewwe et al.) The remaining 124 individuals were invited to a baseline evaluation: 89 showed up. After a reading evaluation, people who scored lower than “basic” in two out of three reading sub-tests were considered eligible, leaving a sample of 70 participants. Stratified random assignment to the program or the control group took place before the evaluation, but participants only found out after evaluation. (Literacy evaluators were unaware of assignment.) Participants received some small monetary compensation in the form of a gift card for each round of data collection and for completing the program within 4.5 months.

To be clear on the literacy levels, baseline assessments revealed that participants (and comparators) had reading scores equivalent to an average American child age six years and six months, i.e., about that of a first-grader.

The data: They administered a battery of literacy tests at baseline and again 3 to 4.5 months later. (They used the Woodcock-Muñoz III Language Survey, if you’re into those kinds of details.) They also gathered data on socio-demographic characteristics, self-esteem ( Rosenberg scale), and self-efficacy ( General Self Efficacy scale), at baseline and follow-up. They administered both of these again to the control group 4.5 months after the first follow-up. They also used Cell-Ed usage data and brief phone calls.

Balance across the treatment and control groups looks pretty good overall with one BIG exception: Treatment individuals were more likely to have a cell phone at baseline (61% versus 41%!). Participants who didn’t have a cell phone at baseline received a basic phone from Cell-Ed. The authors control for baseline values of this and the one other (small) significant difference, but still, it gives pause. Everyone assigned to treatment at least started the program (and no one in the control group did), so the intent-to-treat and the average-treatment-on-the-treated are the same.

Basic results: Basic reading scores (letter-word identification and word attack) went up about five years (i.e., from the level of an average 6.5 year old to an 11.5 year old). Broad reading scores (letter-word identification, reading fluency, and passage comprehension) went up about two and a half years. In about four months. Without a teacher.

Here’s the distribution of basic and broad reading scores from the paper:

These results move almost not at all when the authors control for baseline differences in cell phone ownership and self-esteem, when they include fixed effects, and a few other specifications. So, wow.

But wait, before you pull your children out of school, there’s unbalanced attrition! [Cue the ominous music.] 25% of people in the treatment group didn’t participate in the follow-up survey, versus about 9% in the control group. This is half the dropout rate (about 50% reported elsewhere for adult literacy programs): So from a program perspective, it’s a win. From an evaluation perspective, not so much.

What to do? First, the authors implement standard Lee bounds (and provide a pretty intuitive exposition of how the bounds work). The difference in attrition between treatment and control is 16%: For the lower bound, they drop the 16% “worst” (in outcomes) observations from the control group. For the upper bound, they drop the 16% “best”. The lower bounds for both broad and basic reading are positive. They then tighten those bounds a little bit by ordering the control group based on how often they responded to the three surveys, called the “adjusted Lee bound.” The results are similar. Finally, they test a couple of other assumptions and construct another set of lower bounds, finding smaller but still positive effects.

So the learning results look pretty robust. The self-esteem and self-efficacy results look positive but are less robust to different specifications. Interestingly, weekly self-esteem assessments seem to track performance in the program: If you’re failing your micromodules, you’re feeling worse about yourself.

The sample is pretty small, there is some baseline imbalance (although the results are robust to controlling for it), and there is unbalanced attrition (although the results are robust to an array of bounds). This intervention is exciting and the results are promising: It’s time to test it rigorously at a larger scale.

Why did this program seem to work so well? The authors put forth the hypothesis that the flexibility of this program, relative to standard adult education classes, is crucial to its success. Of course, there was no “standard adult education” treatment arm, so they can’t test that rigorously. But they do report detailed data on how participants access the program, and it is certainly suggestive. Participants call into the program at all times of the day and night, mostly between 8am and midnight.

Participants call in at all days of the week; and most interactions with the system are about ten minutes. This is unlike any adult education class I’ve seen. It’s consistent with what Mullainathan and Shafir’s book, Scarcity, calls making programs to help the poor more fault-tolerant, to borrow a term from engineering. In other words, make it so that low-income individuals don’t have to drop out of a training or literacy program just because they missed a class due to an extra shift at work or a daycare failure. The cell phone platform achieves exactly that.

Going mobile: Mobile phones clearly aren’t the silver bullet to all of our development problems: We’ll probably be waiting a while for the iPhone app that physically vaccinates children. (Anyone?) Aker herself, one of the authors on this paper, has an interview from 2011 trying to separate the hope from the hype.

But they are at the center of some promising interventions: increasing adherence to rapid diagnostic tests among malaria patients or to antiretroviral therapy among HIV-positive individuals. And in some cases, like this one, the cell phone program manages not just to complement what we think of as a crucial input, but actually to replace it.

Join the Conversation