On Friday I linked to a description of the

survey procedures for an opinion poll in Cuba. This contained the description “At least three attempts were made to reach the selected individual, after which interviewers moved to the next house”. This recommendation for

three callbacks seems to be taken as standard in a lot of market research, but also taken as given in a lot of survey documentation I come across. For example, Gero Carletto of the LSMS team (*) informs me that they recommend a minimum of three callbacks at the most appropriate time; see also

examples from RAND;

from USAID; and the

DHS in India. While many of these say a minimum of three callbacks, often this becomes the maximum to a contracted survey firm given the costs of going back again.

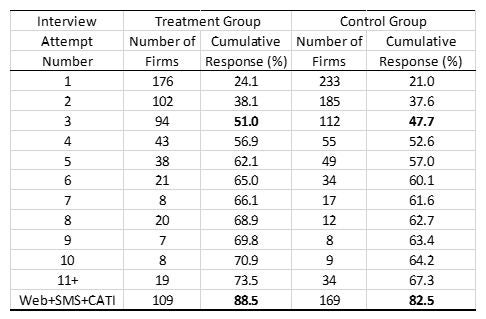

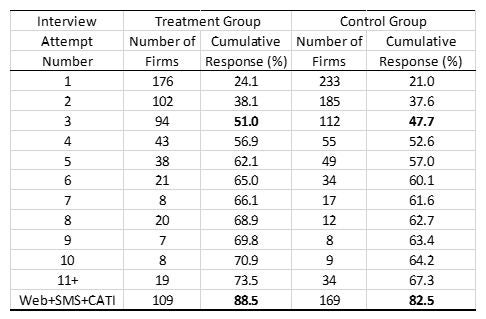

I also just finally finished and received collection of follow-up data for a business plan competition I am evaluating in Nigeria. This is a panel survey, where the experimental group is just over 1800 individuals, located all around the country. Motivated by a paper on using call attempts to form bounds in the case of attrition that I had previously blogged about, I made sure the survey company (TNS RMS Nigeria Ltd.) carefully recorded each in-person visit attempt. Then after all the visits had been conducted, we did a final phase of trying to get more responses by giving a much shorter version of the questionnaire by email, computer-aided telephone (CATI), and by sending SMS’s directing them to the web survey. This table summarizes the results:

If I had contracted the survey firm on the basis of up to three callbacks, the response rate would have been 51% for the treatment group and 48% for the control group. Ultimately we managed to get this up to 89% for treatment and 83% for the control. So a few points here:

* I should also note that Gero emphasized the importance of when the callback is made, in addition to how many callbacks are done, stressing the need to try at times like early morning or evening, or other times when you may be more likely to find people in the home, and that the number of callback attempts then depends on the number of days they are working in an enumeration area. The callback issue is perhaps less of a concern for the initial cross-sectional survey than for panel surveys.

Thanks to DFID for funding the survey used here.

I also just finally finished and received collection of follow-up data for a business plan competition I am evaluating in Nigeria. This is a panel survey, where the experimental group is just over 1800 individuals, located all around the country. Motivated by a paper on using call attempts to form bounds in the case of attrition that I had previously blogged about, I made sure the survey company (TNS RMS Nigeria Ltd.) carefully recorded each in-person visit attempt. Then after all the visits had been conducted, we did a final phase of trying to get more responses by giving a much shorter version of the questionnaire by email, computer-aided telephone (CATI), and by sending SMS’s directing them to the web survey. This table summarizes the results:

If I had contracted the survey firm on the basis of up to three callbacks, the response rate would have been 51% for the treatment group and 48% for the control group. Ultimately we managed to get this up to 89% for treatment and 83% for the control. So a few points here:

- Persistence really pays off – even forgetting the Web+SMS+Cati category, we get another 25% of the sample responding after more than 3 attempts had been made already at different times/days to reach them.

- Using the short survey to try and get data on a few key outcomes even when we couldn’t administer the full survey really helped here in boosting overall response too, yielding another 15% of the sample when we might have thought we had got all the data we could.

- The treatment-control gap in response rates is actually larger at the end of all this effort (6%) than it would have been after 3 callbacks (3.3%), but I feel a lot better about the accuracy of the results and bounding exercises with 80%+ response rates than I would with small treatment-control differences in response rates at 50% attrition.

- An extreme version of the callback is going back in another survey wave. 72 of the respondents were individuals who I didn’t get any data from one year ago, while 196 of the attritors I had managed to get data from last year – another reason for multiple follow-up rounds.

- These types of business surveys can be a lot tougher than household surveys because the businesses can be mobile and close for periods, and you have very few individuals in any particular geographic location – so more attempts may be needed here.

* I should also note that Gero emphasized the importance of when the callback is made, in addition to how many callbacks are done, stressing the need to try at times like early morning or evening, or other times when you may be more likely to find people in the home, and that the number of callback attempts then depends on the number of days they are working in an enumeration area. The callback issue is perhaps less of a concern for the initial cross-sectional survey than for panel surveys.

Thanks to DFID for funding the survey used here.

Join the Conversation