When I was a graduate student and setting off on my first data collection project, my advisors pointed me to the ‘

Blue Books’ to provide advice on how to make survey design choices. The Glewwe and Grosh volumes are still an incredibly useful resource on multi-topic household survey design. Since the publication of this volume, the rise of panel data collection, increasingly in the form of randomized control trials, has prompted a discussion about survey design in

academic papers in development economics and this blog (for example

here and

here). Given the multiple survey design choices that are possible and that these choices may be survey module specific, how do we know which survey design choices affect data quality? Or put a different way, if one were to update the Glewwe and Grosh volumes, what is the survey methodology ‘methodology’ that would permit us to causally estimate the effect of survey design choices?

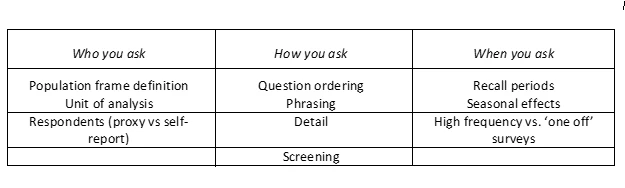

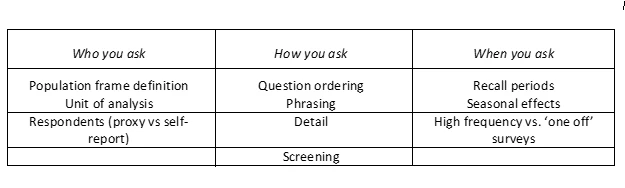

There are lots of survey design choices that one might make to design a questionnaire including who you ask, how you ask, and when you ask your survey questions. Any one of these survey design choices could affect data quality.

A potential survey methodology ‘methodology’ would be to compare between surveys. It is important to remember though that multi-topic surveys are complex instruments. Comparisons between surveys do not allow researchers to necessarily attribute survey design choices to differences in data. This is because there are multiple differences between any two surveys that might be relevant in changing data quality. Sampling variation, seasonality, or enumerator effects could affect data quality apart from variation in the who, how, and when questions above. It is also likely that several survey design choices may vary between surveys, confounding the estimation of any one choice on data quality.

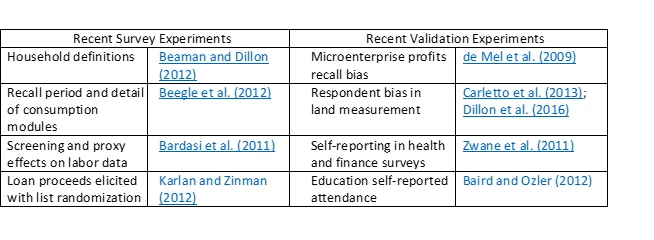

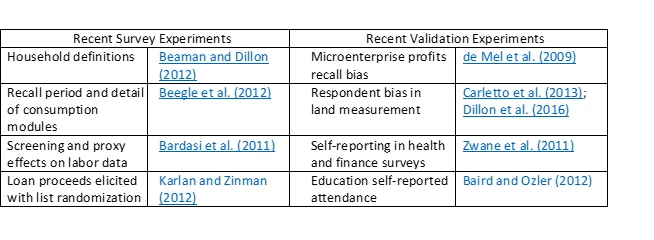

There seem to be two promising survey methodology ‘methodologies’ commonly used in the recent measurement literature that move us past descriptive between-survey comparisons, survey experiments and validation studies. Each of these methodologies identifies a causal effect of survey design choice on data quality.

1. Survey Experiments

Survey experiments randomly assign survey design choices to assess their relative causal effect. The relative causal effect is a key observation about survey experiments. Random assignment provides strong internal validity which is important due to multiple design choices contained in a multi-topic questionnaire. However, the strength of this approach is also a potential limitation. In particular, the measurement error associated with each choice is not measured against ‘objective truth’. We can know the tradeoffs between design choices and their magnitude, but both design choices could be measured with error.

2. Validation Studies

Validation studies rely on comparing alternative designs to an objective truth such as administrative data, a gold standard or repeated observations over time. The advantage of this survey methodology ‘methodology’ is that the causal effect of survey design choice is estimated relative to this benchmark, quantifying the measurement error associated with each choice. The limitation is that ‘objective truth’ might not be measured in administrative data if it too is reported with error or the gold standard itself may not have been validated. When validation studies rely on repeated observations to assess consistency of responses, assuming that convergence reflects the true survey response, the effect of repeated interviewing may bias estimates.

As development economists become more interested in survey design, it is important to understand how to make progress on measurement issues, to be able assess what we know, and use this knowledge to tackle knowledge gaps in the emerging measurement literature. Survey experiments and validation experiments provide a survey methodology ‘methodology’ that can move us further towards causal estimates of survey design choices, confirming or refuting observations that we collect in the field during piloting and fieldwork.

There are lots of survey design choices that one might make to design a questionnaire including who you ask, how you ask, and when you ask your survey questions. Any one of these survey design choices could affect data quality.

A potential survey methodology ‘methodology’ would be to compare between surveys. It is important to remember though that multi-topic surveys are complex instruments. Comparisons between surveys do not allow researchers to necessarily attribute survey design choices to differences in data. This is because there are multiple differences between any two surveys that might be relevant in changing data quality. Sampling variation, seasonality, or enumerator effects could affect data quality apart from variation in the who, how, and when questions above. It is also likely that several survey design choices may vary between surveys, confounding the estimation of any one choice on data quality.

There seem to be two promising survey methodology ‘methodologies’ commonly used in the recent measurement literature that move us past descriptive between-survey comparisons, survey experiments and validation studies. Each of these methodologies identifies a causal effect of survey design choice on data quality.

1. Survey Experiments

Survey experiments randomly assign survey design choices to assess their relative causal effect. The relative causal effect is a key observation about survey experiments. Random assignment provides strong internal validity which is important due to multiple design choices contained in a multi-topic questionnaire. However, the strength of this approach is also a potential limitation. In particular, the measurement error associated with each choice is not measured against ‘objective truth’. We can know the tradeoffs between design choices and their magnitude, but both design choices could be measured with error.

2. Validation Studies

Validation studies rely on comparing alternative designs to an objective truth such as administrative data, a gold standard or repeated observations over time. The advantage of this survey methodology ‘methodology’ is that the causal effect of survey design choice is estimated relative to this benchmark, quantifying the measurement error associated with each choice. The limitation is that ‘objective truth’ might not be measured in administrative data if it too is reported with error or the gold standard itself may not have been validated. When validation studies rely on repeated observations to assess consistency of responses, assuming that convergence reflects the true survey response, the effect of repeated interviewing may bias estimates.

As development economists become more interested in survey design, it is important to understand how to make progress on measurement issues, to be able assess what we know, and use this knowledge to tackle knowledge gaps in the emerging measurement literature. Survey experiments and validation experiments provide a survey methodology ‘methodology’ that can move us further towards causal estimates of survey design choices, confirming or refuting observations that we collect in the field during piloting and fieldwork.

Join the Conversation