- Sure, that intervention delivered great results in a well-managed pilot. But it doesn’t tell us anything about whether it would work at a larger scale.

- Does this result really surprise you? (With both positive results and null results, I often hear, Didn’t we already know that intuitively?)

A recent paper – “Cognitive science in the field: A preschool intervention durably enhances intuitive but not formal mathematics” – by Dillon et al., provides answers to both of these, as well as giving new insights into the design of effective early child education.

The background. Preschool has positive impacts. Experts say that quality really matters. Efforts to improve quality in Malawi and Chile have not shown impacts on child development when tested with randomized-controlled trials. There is an association between child development outcomes and preschool quality (as in Brazil), but that could easily be driven by – say – richer households living in areas with higher quality preschools and also providing lots of other investments in their children.) So how do we make preschool better?

Children begin to develop basic numeric abilities from very early ages: judging more versus less, for example (“Hey, my sister got more than me!”). These are largely “nonsymbolic” (guessing which shape doesn’t belong or comparing sizes). Later, they learn “symbolic” math (such as that 5 is bigger than 3, even if they’re the same font size). Researchers believe that (a) children can get better at nonsymbolic math through exercises, and (b) getting better at nonsymbolic math will help them get better at symbolic math. There is some evidence on the latter (here and here, for example), but it tends to be short term (with the final measurement taking place less than a month after the end of training).

The experiment. The team worked with the Indian organization Pratham in 214 preschools in Delhi, India. Preschools were divided into three groups. The Math Games group played math games for three 1-hour sessions per week over 4 months. The math games focused on nonsymbolic abilities, like the one in the image below.

Source: Dillon et al. 2017

The Social Games group played similarly engaging games for a similar amount of time, but the games focused on “emotion reading and gaze following.” The Control schools (and the treatment schools when not playing the games) used a preschool curriculum designed by Pratham. So comparing the Social Games to the Control tells us whether just playing games improves math ability, and comparing the Math Games to the Social Games tells us whether the content of the nonsymbolic math games improves math ability. Children were evaluated before the intervention, and then three times afterward (from 0-3 months after the intervention up to 12-15 months after the intervention). The paper describes the games in more detail, and the supplementary materials describe the games and testing in MUCH more detail.

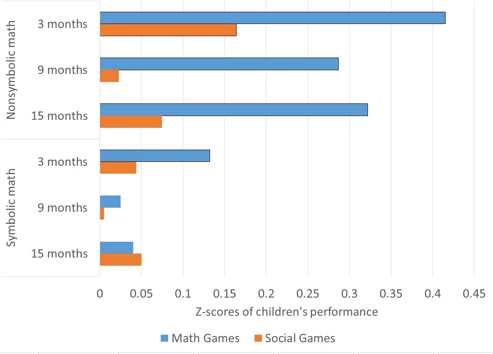

The findings. First, kids played the games. That’s an implementation win in and of itself. Second, in the short run (0-3 months after the intervention), the Math Games improve both nonsymbolic math performance (i.e., the kids got better at the games) and symbolic math performance (yay, it translates). This backs up what had been found elsewhere. The Social Games also improved nonsymbolic math performance in the short run, but not as much as the Math Games.

In the subsequent follow-ups, two things changed. The impact of the Social Games on any math ability disappeared. But also, the impact of the Math Games on symbolic math ability disappeared. So kids were still better at the kinds of activities that were in the Math Games, but that no longer translated into the kind of math that you want kids to learn in school.

Impact of Math Games and Social Games on Children's Math Performance

Source: My construction, based on Dillon et al. 2017

So, the verdict from a field experiment with at longer follow-up on the two hypotheses?

- Do children can get better at nonsymbolic math through exercises? Yes!

- Does getting better at nonsymbolic math will help children to get better at symbolic math? No. (Okay, yes in the very short run, but not in a way that persists in this context.)

- “A math treatment might be more effective at fostering school readiness if the games were presented in a way that connects their nonsymbolic mathematical content directly to the mathematical language and symbols used in school.”

- “Nonsymbolic math games training might be more effective if training coincided with children’s learning of formal mathematics rather than preceding that learning.”

What this tells us about impact evaluation. Let’s go back to those two earlier responses to impact evaluation results.

- Sure, that intervention delivered great results in a well-managed pilot. But it doesn’t tell us anything about whether it would work at a larger scale.

- Does this result really surprise you?

Not every impact evaluation result is surprising, but it requires evaluating a wide range of interventions – and we use theory to guide which interventions within that range – to figure out which results will be surprisingly overturned. Surprising results, whether negative ( Training high schools in active teaching pedagogies reduced student learning!) or positive ( Providing bicycles improves test scores!), come together with unsurprising results ( Throwing laptops into schools doesn’t improve student learning!). And one policy maker’s surprising result is another’s yawn-inducing “duh!” moment, depending on their prior beliefs.

I’ll give the last word to the authors: “Our findings underscore both the promise and the necessity of rigorous testing of reforms to school curricula inspired by basic science, using scalable programs over extended time frames in the environments in which those curricula will be implemented.”

Miscellany

- You can hear three of the authors -- Spelke, Duflo, and Dillon -- talking about their experiment here.

- I noticed that the authors use a “headline” title: “A preschool intervention durably enhances intuitive but not formal mathematics.” These are not very common in economics papers, but it certainly gets the point across. I wish someone would replicate Weinberger et al.’s analysis of more than one million abstracts, but with titles. Patrick Dunleavy has a nice think piece on improving article titles in social science.

Join the Conversation