A new working paper by Blattman, Chaskel, Jamison, and Sheridan evaluates the (long-term, 10-year) effects of providing cognitive behavioral therapy (CBT) cross-randomized with lump-sum cash transfers at the end of the eight-week intervention on antisocial behaviors among high-risk men in Liberia. There has been some discussion of the findings in the media, including this opinion piece by one of the authors: the general takeaway seems to have been that low-cost CBT combined with lump-sum economic assistance sustainably behaviors, such as selling drugs, carrying weapons, disputes and arrests, aggressive behaviors, IPV, etc.

The question I want to take up here is the more interesting, at least to me, question of whether CBT+cash outperformed CBT alone (we will ignore the cash arm as it pretty much had no discernible effects in the short or the longer run). This is important from a policy perspective because the cash increases the program cost by about 60%, so it’s important to know if it is justified. Furthermore, while one could take the position that “in a country with a robust social safety net, many of these people would be receiving cash anyway, so the effect of CBT alone is less pertinent,” it is not clear that (a) the study participants would be regular safety net programs, and (b) that such programs would provide large one-time lump-sum transfers rather than small monthly payments. As the study is designed to test this question explicitly; and we have more evidence that CBT is promising (as presented by the authors); and the authors explicitly hypothesize themselves that “…Therapy+cash would be more durable than Therapy Only,” it’s good to focus on that question.

The truth is neither in the main results, nor in the heterogeneity analysis, there is any daylight between CBT+cash vs. CBT: As can be seen in Tables 3 and Figure 1, at the 10-year follow-up, the index of antisocial behaviors (ASB) is reduced by 0.251 standard deviations (SD) in the former and 0.204 SD in the latter. The difference between these is nowhere near significant p-value=0.6). Looking at the component of the index, some of them favor CBT+cash while some CBT Only. The heterogeneity analysis in Table 4 is even more striking: the authors show that both programs are very effective for people in the top quartile of the ASB index at baseline, i.e., those who were displaying the most antisocial behaviors at baseline. The effects for the remaining groups are negligible in comparison, which is useful for targeting this program in the future. Even when we look at the very nice Table A.12, which presents different ways of creating the index, including a pooled composite, pooled individual questions, and an inverse-covariance weighted index, the results are robust: with pooled individual questions, e.g., the effect sizes are -0.20 SD in both groups. [It is so nice that the authors have this table – I always ask for it in referee reports whenever a paper is using indices for outcomes, which can influence the takeaways depending on how they are constructed.]

So, it is clear that – at least in the main analysis, heterogeneity, and some robustness checks to index construction – CBT also had lasting impacts, with the effect sizes always slightly smaller than CBT+cash and never statistically significant. So, where is the takeaway that CBT+cash had lasting effects and CBT alone did not (or might not have) coming from? It’s the attrition…

Table 2 presents attrition from the study sample after four years. The mean lost-to-follow-up rate in the control group is about 17%. However, this is up by about 4 percentage points (pp) in CBT Only and down by roughly the same amount in CBT+cash. These results are not statistically significant, but I have a suspicion that a test of the 8.3-pp difference in average attrition between CBT+cash vs. CBT Only would have a low p-value. In any case, it is a big difference between these two groups…

The authors do not interact the treatment indicators with baseline characteristics, so it is not easy to know what types of people left each group (we just see this overall for the entire study population). But, both column (3) in Table 2, as well as Tables 5 and 6 give us clues that there may be some differences in the attrition between these two groups: while the differences are never statistically significant, there are some more deaths in CBT Only, the cause of which seems to be more due to violent deaths. The absolute numbers are really in the teens here with respect to these differences (as each group has about 250 people in it, with a study population at baseline of 999): but this matters, as robustness checks will be exactly manipulating such small numbers of people.

Just as a side note here, since the authors will be doing extensive Kling-Liebman type of attrition bounds next (discussed below), it is less important that they convince us there are no issues with attrition in the study. In fact, they probably want to tell us that there are issues, as this is exactly what leads to the relatively brighter highlight on CBT+cash than CBT Only. This is always a good takeaway for attrition analysis in RCTs: if you will do pretty much assumption-free bounds (as Kling-Liebman bounds more or less are), the attrition table does not have to convince the reader that differences (in levels and characteristics) are balanced across study arms. However, it is still useful to know these differences, as sometimes they become important – as they do here).

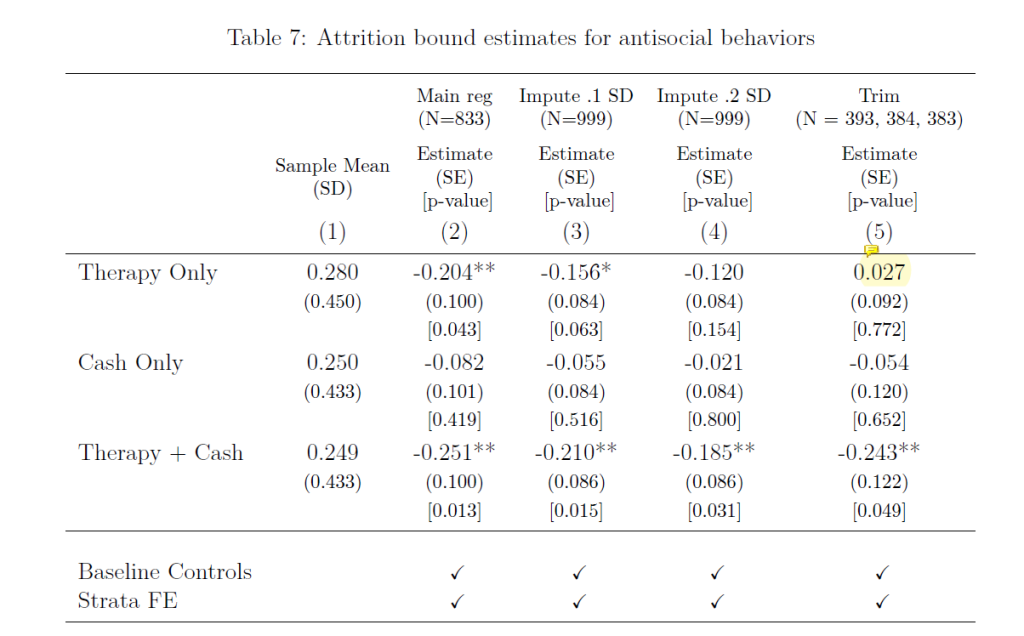

Table 7 presents the bounding exercise. To remind everyone, what Kling-Liebman bounds do is to fill in the missing values in the outcomes for those lost to follow-up, trying to make the impacts (if any) go away: what if the people missing in the control group had better outcomes, while those in the treatment had worse. When you do this by, say, as the authors do (column 3), adding 0.1 SD to control outcomes and subtracting the same from treatment outcomes, you open up a 0.2 SD gap between attritors in these groups. You can then do 0.2 SD (column 4), opening up a 0.4 SD gap, etc. The only assumption you’re making is that the gaps would not be larger than the ones you tried. This seems reasonable, but you can keep going and let the reader decide.

What happens when the authors do this is that, as expected, the effect sizes (ES) start getting smaller: -0.21 vs. -0.16 (col. 3) and -0.185 vs. -0.120 (and no longer significant at the 10% level; col. 4) for CBT+cash and CBT Only, respectively. There is a bit more daylight between these ES now between the two groups, but still nowhere near any statistical significance. Still no sign of the smoking gun that led to the main takeaway…

[Before we get to that, however, remember that one could do this another way: since the worrying difference in attrition is between the two treatment groups, one could do the same exercise with the two of them: maybe the young men who died violent deaths in the CBT Only group were getting their lives together while those in CBT+cash were not. Or, vice versa. That exercise would likely produce statistically significant differences between the two groups – going both ways. It is not super interesting from an evidence standpoint here, but important to keep in mind from a methods perspective that you can do this a bunch of ways with more than one treatment arm…]

Well, the whole thing, IMHO, hinges on column 5 of Table 7. Here, what the authors do is a trimming exercise: for CBT+cash, attrition is lower than the control by 3.9 pp. So, what if we make attrition the same by dropping the observations with the lowest ASB index scores, such that the attrition rate is equalized in the two groups. These are the so-called Lee bounds (I have written about them here). When the authors do this, the point estimate for CBT+cash declines very little (from -0.25 to -0.24). But, when they do this for CBT Only, the ES crashes (from the original -0.201) to +0.027! What happened?

There are two important things to notice here. First, since the attrition rate in CBT Only is larger than the control group, we are no longer removing observations with the lowest ASB scores from treatment: we are, rather, removing observations with the highest ASB scores from the control group. Notice how this is asymmetrical to the process for CBT+cash. Second, perhaps as importantly, the huge change in the ES for CBT Only comes from dropping nine (or at most 10, due to unknown rounding) observations from the control group: that is a big shake-up from such a small change. The implication must be that while the lowest scores are not very low in CBT+cash (such that dropping them did not alter ES), the top-ten highest scores must be very high in the control group, such that its ASB index declines hugely (by 0.23 SD or so, if my calculations are correct).

This is where I think some forensics would have been nice and why a more detailed Table 2 on attrition would have been useful: what are the characteristics of these people who are dropping out in each group: are they meaningfully different than each other, as seems plausible by the numbers of deceased and cause of death in each group? More importantly, here is the best column 6 the authors could add: what happens to the ES for CBT+cash if the same nine or 10 control observations with the highest ASB scores are dropped: it has to be that the effect is now close to 0 and insignificant, just as it is in the CBT Only group.

The takeaway from this really nice and important paper that CBT+cash had lasting effects is technically correct. But it feels like it leaves the story incomplete. From a policy perspective, it’s important to know whether it outperformed CBT Only – in an experiment that was designed to test this. When that hinges primarily on one specification in one robustness-check table, it feels like some nuance about the value of CBT for the LT welfare of individuals at high-risk at baseline is lost…

Join the Conversation