When people ask about “development impact” they are often referring to the impact of some set of development policies and projects; let’s call it a “development portfolio.” The portfolio of interest may be various things that are (ostensibly) financed by the domestic resources of developing countries. Or it might be a set of externally-financed projects spanning multiple countries—a portfolio held by a donor country or international organization, such as the World Bank.

Yet the bulk of the new evaluative work going on today is assessing the impact of specific projects, one at a time. Each such project is only one component of the portfolio. (And each project may itself have multiple elements.) As evaluators we worry mainly about whether we are drawing valid conclusions about the impact of that project in its specific setting, including its policy environment. The fact that each project happens to be in some development portfolio gets surprisingly little attention.

So an important question is begging: How useful will all this evaluative effort be for assessing development portfolios?

The (possibly big) biases we don’t much talk about

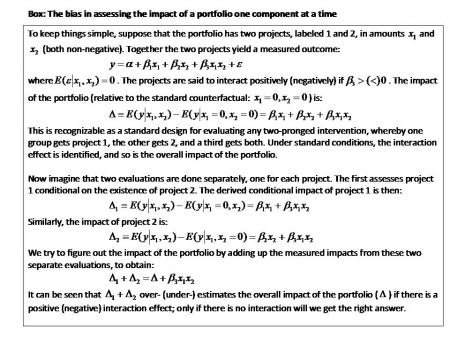

When you think about it, assessing a development portfolio by evaluating its components one-by-one and adding up the results requires some assumptions that are pretty hard to accept. For one thing, it assumes that there are negligible interaction effects amongst the components. Yet the success of an education or health project (say) may depend crucially on whether infrastructure or public sector reform projects (say) within the same portfolio have also worked. Indeed, the bundling of (often multi-sectoral) components in one portfolio is often justified by claimed interaction effects. But evaluating each bit separately and adding up the results will not (in general) give us an unbiased estimate of the portfolio’s impact. If the components interact positively (more of one yields higher impact of the other) then we will overestimate the portfolio’s impact; negative interactions yield the opposite bias (see Box).

For another thing, it assumes that we can either evaluate everything in the portfolio or we can draw a representative sample, such that there is no selection bias in which components we choose to assess. If the components are in fact roughly independent (as implicitly assumed) then ideally we should be evaluating a random sample of all the things in the portfolio, so as to make a valid overall assessment. (When interaction effects are also present one might need to sample differently.) But I have never heard of any development project being randomly sampled for an evaluation.

(Here is a larger version of the box if you can't read the above easily)

I can suggest a number of reasons why our present approach to impact evaluation risks giving us a biased assessment of the impact of a development portfolio. There is both a demand side and a supply side to evaluations. On the demand side, the choices about what gets put up for an evaluation appear to be heavily decentralized and largely un-coordinated. Interaction effects are sometimes studied, but for the most part the components are looked at in isolation. Then we will have little option but to add up the results across multiple evaluations and hope that interaction effects are minimal.

On the supply side, the currently favored tool kit for evaluation relies heavily on methods that are tailored to assigned programs, meaning that some units get the intervention and some do not, and it is assumed that there are negligible spillover effects to mess up the evaluation. Within this class of tools, the current fashion is for randomized control trials (RCTs) and social experiments more generally. These are thought to be very good at removing one of the potential biases, namely that stemming from purposive assignment to the treatment and comparison groups. (Although the claim that even this particular bias is removed by an RCT requires some rather strong behavioral assumptions, as Jim Heckman, Michael Keene and others have emphasized—let alone all the other, generic, concerns, including external validity.)

The problem is that the interventions for which currently favored methods are feasible constitute a non-random subset of the things that are done by donors and governments in the name of development. It is unlikely that we will be able to randomize road building to any reasonable scale, or dam construction, or poor-area development programs, or public-sector reforms, or trade and industrial policies—all of which are commonly found in development portfolios. One often hears these days that opportunities for evaluation are being turned down by analysts when they find that randomization is not feasible. Similarly, we appear to invest too little in evaluating projects that are likely to have longer-term impacts; standard methods are not well suited to such projects, and (just like everyone else) evaluators discount the future.

Another source of bias is the heterogeneity in the political acceptability of evaluations. The (potentially serious) ethical and political concerns about social experiments will have greater salience in some settings than others. The menu of feasible experiments will then look very different in different places, and the menu will probably change over time.

Our knowledge needs to improve in areas where there are both significant knowledge gaps and an a priori case for government intervention. However, it is not clear that we are focusing enough on evaluating the sorts of things that governments need to be doing. As Jishnu Das, Shantayana Devarajan and Jeffrey Hammer have pointed out, it is not clear that RCTs are well suited to programs involving the types of commodities for which market failures are a concern, such as those with large spillover effects in production or consumption. (See their 2009 article, “Lost in Translation,” in the Boston Review.)

For these reasons, I would contend that our evaluative efforts are progressively switching toward things for which certain methods are feasible, whether or not those things are important sources of the knowledge gaps relevant to assessing development impact at the portfolio level. There is a positive “output effect” of the current enthusiasm for impact evaluation, but there is also likely to be a negative “substitution effect.” We appear to be building up our knowledge in a worryingly selective way that is unlikely to compensate for the distortions generating existing, policy-relevant, knowledge gaps—stemming from the combination of decentralized decision making about project evaluation with the inevitable externalities in knowledge generation. (You can read more about this in my article “Evaluation in the Practice of Development.”)

What do we need to do?

There are two sorts of things we need to do to address this problem, though both are likely to meet resistance. The first requires some “central planning” in terms of what gets evaluated. Nobody much likes central planning, but we often need some form of it in public goods provision, and knowledge is a public good. A degree of planning, with judicious use of incentives, could create a compensating mechanism to assure that decentralized decision making about evaluation can better address policy-relevant knowledge gaps to assure that we really do get at development impact. For example, in the early years of the Bank’s Development Impact Monitoring and Evaluation (DIME) initiative, a successful effort was made to promote evaluations of conditional cash transfer programs, which led to the (very good) 2009 Policy Research Report on this topic. Maybe this was just the low-lying fruit, since CCTs are often amenable to currently favored methods, at least at the pilot stage. Nonetheless, this example demonstrates that a degree of planning is possible to assure that pre-identified and policy-relevant knowledge gaps are addressed. The challenge is to apply this idea to development portfolios, typically involving multiple sectors and diverse projects.

Second, we need to think creatively about how best to go about evaluating the portfolio as a whole, allowing for interaction effects amongst its components, as well as amongst economic agents. This is not going to be easy. Standard tools of impact evaluation will have to be complemented by other tools, such as structural modeling and cross-country and cross-jurisdictional comparative work. We will need to look at public finance issues such as fungibility and flypaper effects. And we will almost certainly be looking at general equilibrium effects—big time in some cases!—which can readily overturn the partial equilibrium picture that emerges from standard impact evaluations. There is scope for more eclectic approaches, combining multiple methods, spanning both “macro” and “micro” tools for economic analysis. The tools needed may not be the favored ones by today’s evaluators. But the principle of evaluation is the same, including the key idea of assessing impact against an explicit counterfactual.

If we are serious about assessing “development impact” then we will have to be more interventionist about what gets evaluated and more pragmatic and eclectic in how that is done.

Martin Ravallion is Director of the World Bank’s Development Research Group.

Join the Conversation