Last week the “State of Economics, State of the World” conference was held at the World Bank. I had the pleasure of discussing (along with Martin Ravallion) Esther Duflo’s talk on “The Influence of Randomized Controlled Trials on Development Economics Research and on Development Policy”. The website should have links to the papers and video stream replay up (if not already, then soon).

The first part of Esther’s talk traced out the growth in RCTs in development economics. She pointed out that in 2000 the top-5 journals published 21 articles in development, of which 0 were RCTs, while in 2015 there were 32, of which 10 were RCTs – so pretty much all the growth in development papers in top journals comes from RCTs. She also showed that the more recently BREAD members had received their PhD, the more likely they were to have done at least one RCT.

In my discussion I expanded on these facts to put them in context, and argue against what I see as a couple of strawman arguments: 1) that top journals only publish RCTs, and that RCTs have taken over development research; and 2) that young researchers have a “randomize or bust” attitude and refuse to do anything but RCTs. I thought I’d summarize what I said on both here.

Have RCTs taken over development research?

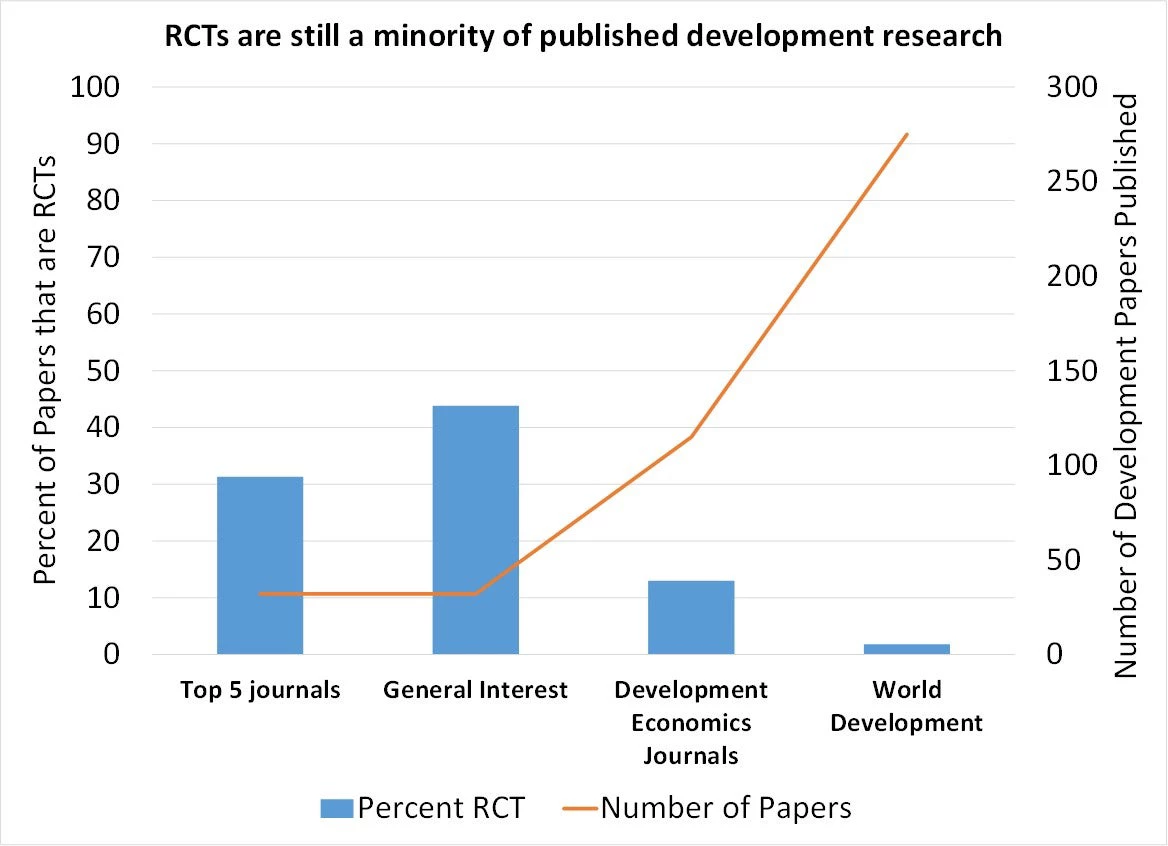

I looked also at development articles published in the next tier of good general interest journals (AEJ Applied, EJ, and ReStat); at the three leading development economics journals (JDE, EDCC and WBER), and at World Development. This resulted in the graph below, which shows the proportion of development articles in each group that were RCTs in 2015, as well as the total number of development papers they published in 2015.

We see that RCTs are a much higher proportion of the development papers published in general interest journals than in development journals. However, even in these journals they are the minority of development papers – there are more non-RCT development papers than RCTs even in these general journals. Moreover, since most of the development papers are published in field journals, RCTs are a small percentage of all development research: out of the 454 development papers published in these 14 journals in 2015, only 44 are RCTs (and this included a couple of lab-in-the-field experiments). As a result, policymakers looking for non-RCT evidence have no shortage of research to choose from.

Randomize or bust?

Another claim is that the “best and brightest talent of a generation of development economists been devoted to producing rigorous impact evaluations” about topics which are easy to randomize and that they take a “randomize or bust” attitude whereby they turn down many interesting research questions if they can’t randomize

To explore this, I examined the publication records of the 65 BREAD affiliates (this is the group of more junior members), restricting attention to the 53 researchers who had graduated in 2011 or earlier (to give them time to have published). The median researcher had published 9 papers, and the median share of their papers which were RCTs was 13 percent. Focusing on the subset of those who have published at least one RCT, the mean (median) percent of their published papers that are RCTs is 35 percent (30 percent), and the 10-90 range is 11 to 60 percent. So young researchers who publish RCTs also do write and publish papers that are not RCTs. Indeed this is also true of Esther and her co-authors on this paper (Abhijit Banerjee and Michael Kremer) – although known as the leaders of the “randomista” movement, the top-cited papers of all three researchers are not RCTs.

--------------------------------------------

The rest of Esther’s talk was also well-worth watching or reading – she discusses how RCTS have influenced the practice of development research (making the points that they have increased the standards of non-experimental work, led to innovations in measurement, among other things), and development policy (using the case of USAID’s Development Innovation Ventures as an example).

Join the Conversation