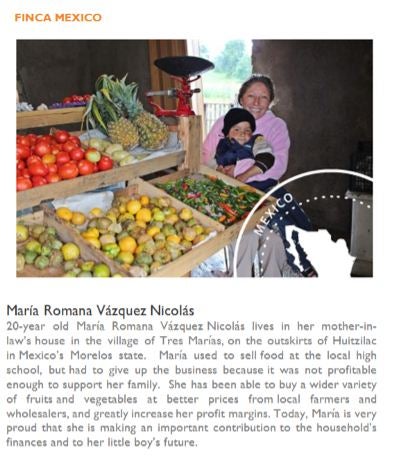

There is arguably little that makes development economists sharpen their fangs as the use of tear-jerking, heart-warming, credit-card-mobilizing anecdotes by development NGOs to support impact claims. Recently in an informal conversation about NGO websites at a recent conference, Paul Niehaus half-jokingly suggested that a good use of graduate research assistant time might be a compilation of the top 25 most egregious “impact” webpages, based purely on narratives of outliers by (well-meaning) non-profits. If nothing else, it would serve as an excellent tool for teaching undergraduates about causal effects while massaging the egos of development economists by reminding us there is least the ONE reason we have value to the rest of society.

But why so often is narrative used in place of good data in impact claims? There are at least two reasons. The first is well-known: a lack of understanding of causal effects, or in some cases, an unwillingness to submit a program to rigorous evaluation. The second is more interesting: a good narrative soundly beats even the best data. Economists and scientists of all ilks need to digest what for many is an unpleasant fact: In the battle for hearts and minds of human beings, narrative will consistently outperform data in its ability to influence human thinking and motivate human action. And if we fail to grasp this fact, even the best impact evaluations that generate the best counterfactuals, with the most statistically efficient estimations, and the most thoughtfully crafted standard errors are likely to inspire less real change in policy and behavior…than someone else’s really good story.

The reason is that the human brain has difficulty connecting emotionally with data, even an expert analysis of data. And it is emotion that typically produces the motivation necessary to elicit active response. This is unfortunate news for development economists, but has been demonstrated by psychologist Paul Slovic in a series of papers ( 2007, 2008, 2010) to which he ascribes the term, psychic numbing, for the way in which people behaviorally ignore data overload. In these studies he and co-authors demonstrate that people generate sympathy toward an identifiable victim of poverty or war with whom they are more able to identify, but fail to generate sympathy toward statistical victims. As a result, even the most convincing analysis of data often fails to create change.

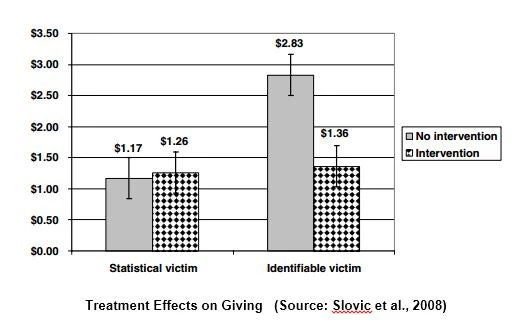

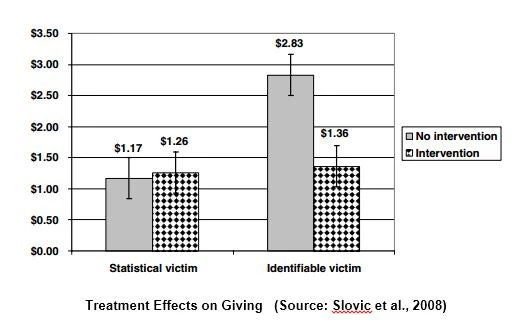

This they discovered in an experiment among subjects in which they offered subjects the opportunity to contribute $5 to Save the Children. Half the subjects received a message with factual information taken from the Save the Children website describing poverty conditions for millions of affected individuals in Sub-Saharan Africa (the statistical victim). The other told the story of one impoverished girl in Mali and showed her picture (an identifiable victim). The results showed that $2.83 was given by subjects with the identifiable victim, and $1.17 by subjects given the statistical victim. The researchers embodied a cross treatment, in which they presented half the subjects of each of the previous two treatments with the following text:

“We’d like to tell you about some research conducted by social scientists. This research shows that people typically react more strongly to specific people who have problems than to statistics about people with problems. For example, when ‘‘Baby Jessica’’ fell into a well in Texas in 1989, people sent over $700,000 for her rescue effort. Statistics—e.g., the thousands of children who will almost surely die in automobile accidents this coming year—seldom evoke such strong reactions.”

The result, as seen in the figure, was that subjects dramatically decreased giving to the identifiable victim, but unfortunately gave little more to the statistical victim.

Narrative, and the personalization of truth in the broader sense, appears to influence behavior more strongly than even very convincing data. Consider the foot-dragging by many in instituting change in the face of mountains of data supporting human impact on global climate change. In contrast the “ Crying Indian” public service announcements radically changed American behavior regarding public litter and pollution. Frankly, climate change needs a Crying Indian because narrative represents a tremendously powerful force for collective action for good, or for ill.

Narrative has displayed considerable power to create vigorous movements in microeconomic development. The number of clients served by microfinance grew from 13 million to over 200 million between 1997 and 2012--not because researchers had carefully demonstrated positive impacts--but largely due to the wide appeal of a compelling narrative of entrepreneurialism among the poor, buoyed by thousands of inspiring anecdotes. Everyone embraced this narrative, on the left, the right, the center. Recently RCT impact data has contributed to a waning enthusiasm for microfinance, but arguably no more so than narratives of over-indebtedness and abusive threats by microfinance debt collectors.

Narrative is also a powerful vehicle for communicating esoteric concepts. Some of the most influential economic models--Nobel Prize-winning models--such as Akerlof’s use of the “market for lemons” to explain the consequences of information asymmetries, and Diamond’s “coconut model” explaining multiple equilibria in the unemployment rate, have been presented in the context of narrative or parable. Indeed the tremendous impact of these models on the way we think about economic life may stem from their ability to harness story to convey abstract truth. Both theory and empirics benefit from narrative.

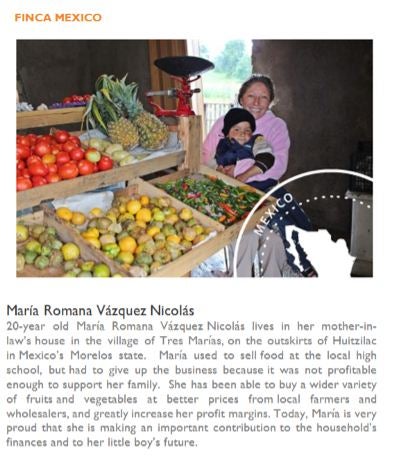

Development economists need to become more skillful at the use of weaving narrative, story, and parable in and around their empirical work. But how can we incorporate the power of narrative into our impact research papers? Our impact studies will have a bigger impact itself if we learn to incorporate narrative into the presentation of our research, so that at issue is not narrative vs. data, but the distinction between “biased narratives,” which promote a misleading view of an average treatment effect on the treated (ATT), and “unbiased narratives,” which help a consumer of our research better grasp what we present as an unbiased estimate of the ATT.

I want to suggest one particular tool that I will call the “median impact narrative,” which (though not precisely the average--because the average typically does not factually exist) recounts the narrative of the one or a few of the middle-impact subjects in a study. So instead of highlighting the outlier, Juana, who has built a small textile empire from a few microloans, we conclude with a paragraph describing Eduardo, who after two years of microfinance borrowing, has dedicated more hours to growing his carpentry business and used microloans to weather two modest-size economic shocks to his household, an illness to his wife and the theft of some tools. If one were to choose the subject for the median impact narrative rigorously it could involve choosing the treated subject whose realized impacts represent the closest Euclidean distance (through a weighting of impact variables via the inverse of the variance-covariance matrix) to the estimated ATTs.

Consider, for example, the “median impact narrative” of the outstanding 2013 Haushofer and Shapiro study of GiveDirectly, a study finding an array of substantial impacts from unconditional cash transfers in Kenya. The median impact narrative might recount the experience of Joseph, a goat herder with a family of six who received $1100 in five electronic cash transfers. Joseph and his wife both have only two years of formal schooling and have always struggled to make ends meet with their four children. At baseline, Joseph’s children went to bed hungry an average of three days a week. Eighteen months after receiving the transfers, his goat herd increased by 51%, bringing added economic stability to his household. He also reported a 30% reduction in his children going to bed hungry in the period before the follow-up survey, and a 42% reduction in number of days his children went completely without food. Tests of his cortisol indicated that Joseph experienced a reduction in stress, about 0.14 standard deviations relative to same difference in the control group. This kind of narrative on the median subject from this particular study cements a truthful image of impact into the mind of a reader.

A false dichotomy has emerged between the use of narrative and data analysis; either can be equally misleading or helpful in conveying truth about causal effects. As researchers begin to incorporate narrative into their scientific work, it will begin to create a standard for the appropriate use of narrative by non-profits, making it easier to insist that narratives present an unbiased picture that represents a truthful image of average impacts.

Bruce Wydick is Professor of Economics at the University of San Francisco and author of the new development economics novel, The Taste of Many Mountains (Thomas Nelson/HarperCollins, 2014), a fiction narrative based on fieldwork related to the recent study of de Janvry, McIntosh, and Sadoulet on the impact of fair trade coffee.

But why so often is narrative used in place of good data in impact claims? There are at least two reasons. The first is well-known: a lack of understanding of causal effects, or in some cases, an unwillingness to submit a program to rigorous evaluation. The second is more interesting: a good narrative soundly beats even the best data. Economists and scientists of all ilks need to digest what for many is an unpleasant fact: In the battle for hearts and minds of human beings, narrative will consistently outperform data in its ability to influence human thinking and motivate human action. And if we fail to grasp this fact, even the best impact evaluations that generate the best counterfactuals, with the most statistically efficient estimations, and the most thoughtfully crafted standard errors are likely to inspire less real change in policy and behavior…than someone else’s really good story.

The reason is that the human brain has difficulty connecting emotionally with data, even an expert analysis of data. And it is emotion that typically produces the motivation necessary to elicit active response. This is unfortunate news for development economists, but has been demonstrated by psychologist Paul Slovic in a series of papers ( 2007, 2008, 2010) to which he ascribes the term, psychic numbing, for the way in which people behaviorally ignore data overload. In these studies he and co-authors demonstrate that people generate sympathy toward an identifiable victim of poverty or war with whom they are more able to identify, but fail to generate sympathy toward statistical victims. As a result, even the most convincing analysis of data often fails to create change.

This they discovered in an experiment among subjects in which they offered subjects the opportunity to contribute $5 to Save the Children. Half the subjects received a message with factual information taken from the Save the Children website describing poverty conditions for millions of affected individuals in Sub-Saharan Africa (the statistical victim). The other told the story of one impoverished girl in Mali and showed her picture (an identifiable victim). The results showed that $2.83 was given by subjects with the identifiable victim, and $1.17 by subjects given the statistical victim. The researchers embodied a cross treatment, in which they presented half the subjects of each of the previous two treatments with the following text:

“We’d like to tell you about some research conducted by social scientists. This research shows that people typically react more strongly to specific people who have problems than to statistics about people with problems. For example, when ‘‘Baby Jessica’’ fell into a well in Texas in 1989, people sent over $700,000 for her rescue effort. Statistics—e.g., the thousands of children who will almost surely die in automobile accidents this coming year—seldom evoke such strong reactions.”

The result, as seen in the figure, was that subjects dramatically decreased giving to the identifiable victim, but unfortunately gave little more to the statistical victim.

Narrative, and the personalization of truth in the broader sense, appears to influence behavior more strongly than even very convincing data. Consider the foot-dragging by many in instituting change in the face of mountains of data supporting human impact on global climate change. In contrast the “ Crying Indian” public service announcements radically changed American behavior regarding public litter and pollution. Frankly, climate change needs a Crying Indian because narrative represents a tremendously powerful force for collective action for good, or for ill.

Narrative has displayed considerable power to create vigorous movements in microeconomic development. The number of clients served by microfinance grew from 13 million to over 200 million between 1997 and 2012--not because researchers had carefully demonstrated positive impacts--but largely due to the wide appeal of a compelling narrative of entrepreneurialism among the poor, buoyed by thousands of inspiring anecdotes. Everyone embraced this narrative, on the left, the right, the center. Recently RCT impact data has contributed to a waning enthusiasm for microfinance, but arguably no more so than narratives of over-indebtedness and abusive threats by microfinance debt collectors.

Narrative is also a powerful vehicle for communicating esoteric concepts. Some of the most influential economic models--Nobel Prize-winning models--such as Akerlof’s use of the “market for lemons” to explain the consequences of information asymmetries, and Diamond’s “coconut model” explaining multiple equilibria in the unemployment rate, have been presented in the context of narrative or parable. Indeed the tremendous impact of these models on the way we think about economic life may stem from their ability to harness story to convey abstract truth. Both theory and empirics benefit from narrative.

Development economists need to become more skillful at the use of weaving narrative, story, and parable in and around their empirical work. But how can we incorporate the power of narrative into our impact research papers? Our impact studies will have a bigger impact itself if we learn to incorporate narrative into the presentation of our research, so that at issue is not narrative vs. data, but the distinction between “biased narratives,” which promote a misleading view of an average treatment effect on the treated (ATT), and “unbiased narratives,” which help a consumer of our research better grasp what we present as an unbiased estimate of the ATT.

I want to suggest one particular tool that I will call the “median impact narrative,” which (though not precisely the average--because the average typically does not factually exist) recounts the narrative of the one or a few of the middle-impact subjects in a study. So instead of highlighting the outlier, Juana, who has built a small textile empire from a few microloans, we conclude with a paragraph describing Eduardo, who after two years of microfinance borrowing, has dedicated more hours to growing his carpentry business and used microloans to weather two modest-size economic shocks to his household, an illness to his wife and the theft of some tools. If one were to choose the subject for the median impact narrative rigorously it could involve choosing the treated subject whose realized impacts represent the closest Euclidean distance (through a weighting of impact variables via the inverse of the variance-covariance matrix) to the estimated ATTs.

Consider, for example, the “median impact narrative” of the outstanding 2013 Haushofer and Shapiro study of GiveDirectly, a study finding an array of substantial impacts from unconditional cash transfers in Kenya. The median impact narrative might recount the experience of Joseph, a goat herder with a family of six who received $1100 in five electronic cash transfers. Joseph and his wife both have only two years of formal schooling and have always struggled to make ends meet with their four children. At baseline, Joseph’s children went to bed hungry an average of three days a week. Eighteen months after receiving the transfers, his goat herd increased by 51%, bringing added economic stability to his household. He also reported a 30% reduction in his children going to bed hungry in the period before the follow-up survey, and a 42% reduction in number of days his children went completely without food. Tests of his cortisol indicated that Joseph experienced a reduction in stress, about 0.14 standard deviations relative to same difference in the control group. This kind of narrative on the median subject from this particular study cements a truthful image of impact into the mind of a reader.

A false dichotomy has emerged between the use of narrative and data analysis; either can be equally misleading or helpful in conveying truth about causal effects. As researchers begin to incorporate narrative into their scientific work, it will begin to create a standard for the appropriate use of narrative by non-profits, making it easier to insist that narratives present an unbiased picture that represents a truthful image of average impacts.

Bruce Wydick is Professor of Economics at the University of San Francisco and author of the new development economics novel, The Taste of Many Mountains (Thomas Nelson/HarperCollins, 2014), a fiction narrative based on fieldwork related to the recent study of de Janvry, McIntosh, and Sadoulet on the impact of fair trade coffee.

Join the Conversation