Today, I cover two papers from two ends of the long publication spectrum – a paper that is forthcoming in the Review of Economic Studies on the effect of decriminalizing indoor prostitution on rape and sexually transmitted infections (STIs); and another working paper that came out a few days ago on the effect of police wearing cameras on use of force and civilian complaints. While these papers are from the U.S.A, each of them has something to teach us about methods and policies in development economics. I devote space to each paper proportional to the time it has been around…

The first paper is by Scott Cunningham and Manisha Shah, who are proud editors of the Oxford Handbook of the Economics of Prostitution ( full disclosure, I have a chapter in this handbook co-authored with Sarah Baird). Cunningham and Shah (forthcoming) is a brilliant paper that cleverly takes advantage of an unanticipated law change on prostitution, cobbles together a huge amount of data on the composition of the indoor sex markets, rape statistics, and gonorrhea incidence using traditional and novel data sources (think Yelp for sex workers and massage parlors), and uses state of the art causal inference techniques to tell a convincing story.

The event that causes a natural experiment in decriminalization of indoor prostitution is the discovery that an amendment to Rhode Island’s law governing (prohibiting sex work) in 1980 had actually left a gaping loophole for indoor prostitution while attempting to crack down on street prostitution, which went unnoticed for more than two decades. The details are hazy, but in my mind the scene plays like this: John Turturro is a public defender, who takes the case of a massage parlor, whose employees are arrested and charged with loitering with the purpose of street prostitution. In the made-for-TV climax in the courtroom, a friend/legal aide (played by Danny DeVito) runs in at the last minute with the text of the amended law and whispers to Turturro re: the long forgotten language in § 11-34 of the General Laws of Rhode Island. When Turturro asks the judge to dismiss all charges because the law does not apply to massage parlors, the judge has no choice but to agree. The case gets a fair amount of media attention and indoor prostitution is de facto legal in Rhode Island for the next 6 something years until, that is, it is recriminalized – roll credits...

What is nice about this, other than being a fascinating story, for Cunningham and Shah is that this satisfies all you want in a shock: it is unanticipated; it is unlikely to be correlated with any other policy changes; and it has bite (as I will discuss in a minute). Hence, the exogenous change in policy in 2003 (23 years after the amendment!)…

Before talking methods and findings, what would we expect to see from such a policy shift? The authors argue that the theory is clear that the decriminalization would increase the size of the indoor sex market (perhaps by shifting some from the still illegal street market) and change its composition. But the effects on rape and STIs are ambiguous: more sex work may increase rapes and STIs. But, the composition effects may actually reduce them if safer workers and clients enter the market and there is more safe sex on the intensive margin (and more reporting of violence now that police can extract fewer rents from sex workers).

But… Rhode Island (RI) is just one state with no within-variation in policy: so, what is the counterfactual for Rhode Island? The authors start with all other states of the U.S. and run a difference-in-difference (DD) regression (pre- vs. post-2003 X RI vs. rest of the US). Since the asymptotic assumptions for DD required for proper inference are not satisfied (because there is only one treated unit), the authors have to calculate standard errors using, a variant of Fisher’s permutation or randomization test (1935). I find that many people ask me how one can do a DD regression when there are only one or a few units treated (and many untreated): this is how. David has written about doing randomization inference in Stata here. Figure 1 in the paper shows you how it works: this is a demanding test because if RI is not in the top or bottom two among the 50 states of the US, the two-tailed test is not going to be significant at the 10% level. In other words, among all the placebo treatments, RI pretty much needs to be standing by itself away from the relevant tail of the distribution. And, nicely for everyone, it does exactly that for the outcomes under examination here: Table 2 shows that “massage” provision increases and prices come down…

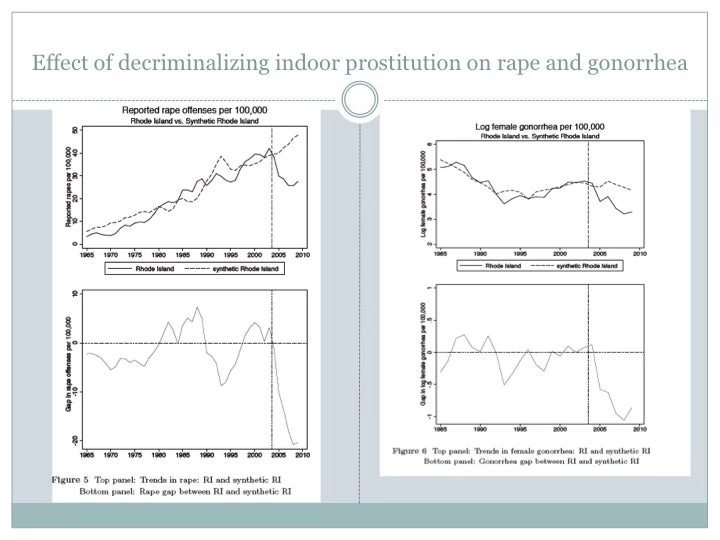

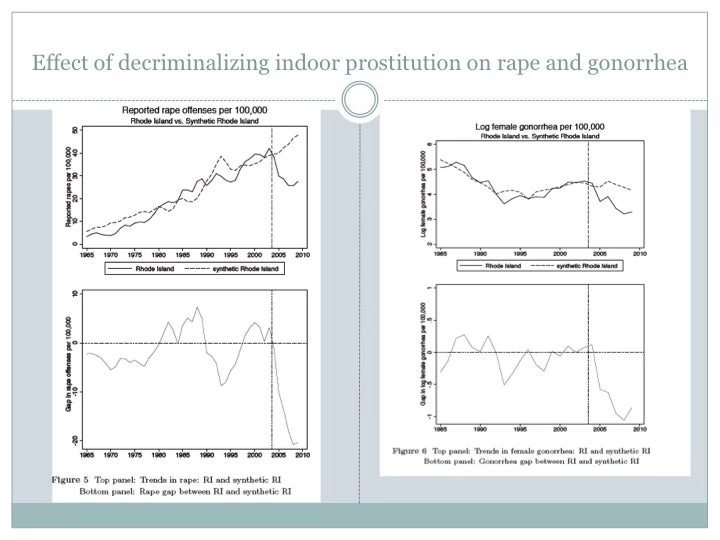

An alternative method is synthetic control, which is a generalization of the DD framework. Instead of the counterfactual group including all US states (and the District of Columbia), it now includes a convex combination of a subset of them. The method is more data intensive than DD, requiring repeated observations of the units in the pre-intervention period, which helps with the robustness of the method against unobserved heterogeneity. After extending their data to go back as far as 1985 (1965 for rape and STI), the authors show us the synthetic control findings. The results speak for themselves*:

The authors find convincing evidence of an intervention that has a strong first stage, meaning that the law change bites: the indoor sex market grows and prices go down. This then has knock-on effects: there is a large decrease in reported rapes and gonorrhea infections in the general population (not just among sex workers and their clients). Given the costs of these outcomes to society and the public health implications of the findings that extend beyond just gonorrhea (such as HIV transmission – directly from the policy change and indirectly), the savings from decriminalization look quite large, indeed. These savings are of course balanced by the fact that prostitution is morally repugnant for some individuals. There is also the concern that it may increase human trafficking.

Some of the most interesting ideas in the paper, for which the authors can only provide suggestive evidence, have to do with the pathways. For example, rapes may have gone down partly because men who are on the margin between rape and prostitution may choose the latter when it becomes cheaper and more available, i.e. the legal sex market may be a substitute for some men, who would otherwise commit acts of gender-based violence. Similarly, for gonorrhea, the authors provide suggestive evidence that the composition of the sex market has become safer after decriminalization in RI: the percentage of transactions by Asian workers roughly doubles after 2003, almost catching up to the share of White workers. It turns out that Asians also have the lowest rates of gonorrhea in RI by a country mile. So, the pool of sex workers may have gotten safer. Other data suggest that massage parlors mainly provide manual stimulation, which is safer than vaginal or anal sex, both of which also decrease in Cunningham and Shah’s own data.

The story does not come with happy endings, which also happens to be the name of a documentary about the efforts to re-criminalize indoor sex work in RI that finally succeeded in 2009. The authors’ DD analysis does not find symmetric effects of re-criminalization in RI, which likely speaks to the fact that, this time, the policy change was completely anticipated.

* The only thing that gives me slight hesitation with this analysis is how the “synthetic RI” is composed of completely different states of the US for each outcome (see Table 10): for rapes, RI looks like the Dakotas and New Hampshire, while for gonorrhea, it looks like Louisiana and Montana. There is no rule that synthetic controls should be stable across outcomes, just that it would soothe me if they were…

...

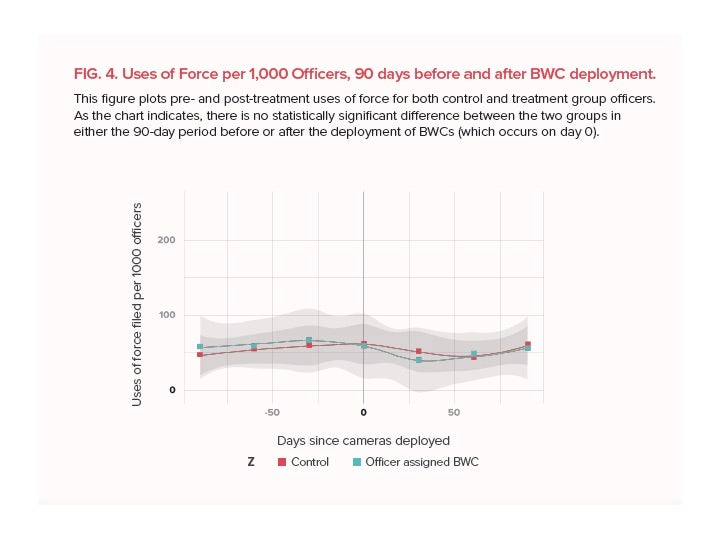

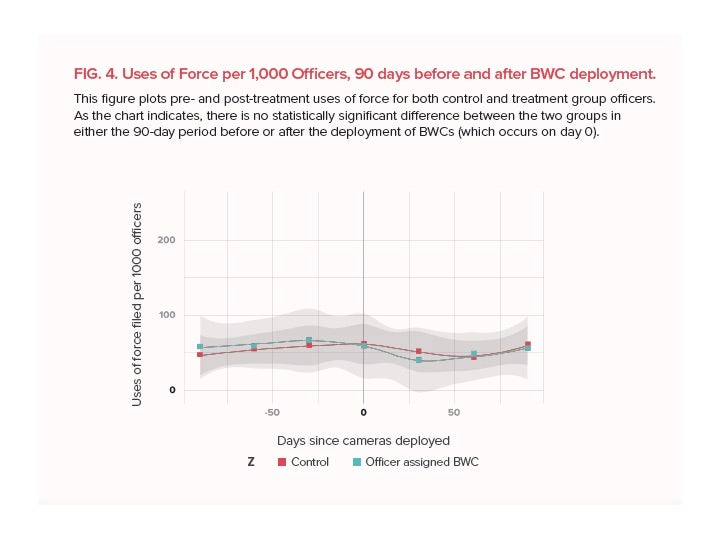

The other paper I want to briefly touch on is about the effect of body-worn cameras by police in Washington, DC by Yokum, Ravishankar, and Coppock. The New York Times covered it here and you can find a write-up by the authors here. Officers from 7 districts of the DCPD were block randomized into wearing body cameras or not. The authors found no effects on any outcome of interest, such as complaints or use of force – despite the fact that the police in the treatment group used the cameras and made many more videos:

My quibble with study is with its design, and not its findings. The time trend above suggests that the authors got lucky in the sense that their interpretation of the finding of no effects seems supported by the fact that there is no change in either group in outcomes after the introduction of cameras.

My quibble with study is with its design, and not its findings. The time trend above suggests that the authors got lucky in the sense that their interpretation of the finding of no effects seems supported by the fact that there is no change in either group in outcomes after the introduction of cameras.

But, what if we had seen a significant downtick in both groups? Would we have said “no effect” then or would we have been much more equivocal? This is a case, unlike the Rhode island case discussed above, where (a) the introduction of the cameras were anticipated; (b) spillovers within blocks are more than likely (with the direction of resulting bias ambiguous); and (c) there was already a large discussion of policing practices across the country. So, even though the assignment is random and the sample size is large, worries about SUTVA violations are first order here. The authors may well have had no choice but to individually randomize in a real world, high stakes situation. And, this is a carefully conducted study with great authors, a pre-analysis plan, etc. I am inclined to believe “these” findings. But, some pure control clusters sure would have gone a long way to improve study design and, therefore, confidence…Plus, perhaps variation in intensity of treatment within clusters.

When I aired these concerns over Twitter, one of the authors engaged nicely and wrote a little thread of responses to FAQs that you can read here: Among his points is the very reasonable one that bystanders’ phone recordings may have already had such a large effect on the conduct of police that the BWCs did not have any marginal effect. A different kind of SUTVA violation…

Finally, Chris Blattman also chimed in on Twitter saying that people should judge papers by sample size first and sample size second. I understand the sentiment re: small sample sizes and the high probability of chance findings, but I disagree. Study design is equally important if not more so here: in this case, a pilot study that treated a much smaller number of officers could have shown different effects – perhaps the estimand that we care the most about: the one without any spillovers.

If we think spillovers are important for policy at scale, then we need a cluster-randomized design, where treatment intensity is randomized (or fixed at paper for a formal treatment of these so-called “randomized saturation” designs.

The first paper is by Scott Cunningham and Manisha Shah, who are proud editors of the Oxford Handbook of the Economics of Prostitution ( full disclosure, I have a chapter in this handbook co-authored with Sarah Baird). Cunningham and Shah (forthcoming) is a brilliant paper that cleverly takes advantage of an unanticipated law change on prostitution, cobbles together a huge amount of data on the composition of the indoor sex markets, rape statistics, and gonorrhea incidence using traditional and novel data sources (think Yelp for sex workers and massage parlors), and uses state of the art causal inference techniques to tell a convincing story.

The event that causes a natural experiment in decriminalization of indoor prostitution is the discovery that an amendment to Rhode Island’s law governing (prohibiting sex work) in 1980 had actually left a gaping loophole for indoor prostitution while attempting to crack down on street prostitution, which went unnoticed for more than two decades. The details are hazy, but in my mind the scene plays like this: John Turturro is a public defender, who takes the case of a massage parlor, whose employees are arrested and charged with loitering with the purpose of street prostitution. In the made-for-TV climax in the courtroom, a friend/legal aide (played by Danny DeVito) runs in at the last minute with the text of the amended law and whispers to Turturro re: the long forgotten language in § 11-34 of the General Laws of Rhode Island. When Turturro asks the judge to dismiss all charges because the law does not apply to massage parlors, the judge has no choice but to agree. The case gets a fair amount of media attention and indoor prostitution is de facto legal in Rhode Island for the next 6 something years until, that is, it is recriminalized – roll credits...

What is nice about this, other than being a fascinating story, for Cunningham and Shah is that this satisfies all you want in a shock: it is unanticipated; it is unlikely to be correlated with any other policy changes; and it has bite (as I will discuss in a minute). Hence, the exogenous change in policy in 2003 (23 years after the amendment!)…

Before talking methods and findings, what would we expect to see from such a policy shift? The authors argue that the theory is clear that the decriminalization would increase the size of the indoor sex market (perhaps by shifting some from the still illegal street market) and change its composition. But the effects on rape and STIs are ambiguous: more sex work may increase rapes and STIs. But, the composition effects may actually reduce them if safer workers and clients enter the market and there is more safe sex on the intensive margin (and more reporting of violence now that police can extract fewer rents from sex workers).

But… Rhode Island (RI) is just one state with no within-variation in policy: so, what is the counterfactual for Rhode Island? The authors start with all other states of the U.S. and run a difference-in-difference (DD) regression (pre- vs. post-2003 X RI vs. rest of the US). Since the asymptotic assumptions for DD required for proper inference are not satisfied (because there is only one treated unit), the authors have to calculate standard errors using, a variant of Fisher’s permutation or randomization test (1935). I find that many people ask me how one can do a DD regression when there are only one or a few units treated (and many untreated): this is how. David has written about doing randomization inference in Stata here. Figure 1 in the paper shows you how it works: this is a demanding test because if RI is not in the top or bottom two among the 50 states of the US, the two-tailed test is not going to be significant at the 10% level. In other words, among all the placebo treatments, RI pretty much needs to be standing by itself away from the relevant tail of the distribution. And, nicely for everyone, it does exactly that for the outcomes under examination here: Table 2 shows that “massage” provision increases and prices come down…

An alternative method is synthetic control, which is a generalization of the DD framework. Instead of the counterfactual group including all US states (and the District of Columbia), it now includes a convex combination of a subset of them. The method is more data intensive than DD, requiring repeated observations of the units in the pre-intervention period, which helps with the robustness of the method against unobserved heterogeneity. After extending their data to go back as far as 1985 (1965 for rape and STI), the authors show us the synthetic control findings. The results speak for themselves*:

The authors find convincing evidence of an intervention that has a strong first stage, meaning that the law change bites: the indoor sex market grows and prices go down. This then has knock-on effects: there is a large decrease in reported rapes and gonorrhea infections in the general population (not just among sex workers and their clients). Given the costs of these outcomes to society and the public health implications of the findings that extend beyond just gonorrhea (such as HIV transmission – directly from the policy change and indirectly), the savings from decriminalization look quite large, indeed. These savings are of course balanced by the fact that prostitution is morally repugnant for some individuals. There is also the concern that it may increase human trafficking.

Some of the most interesting ideas in the paper, for which the authors can only provide suggestive evidence, have to do with the pathways. For example, rapes may have gone down partly because men who are on the margin between rape and prostitution may choose the latter when it becomes cheaper and more available, i.e. the legal sex market may be a substitute for some men, who would otherwise commit acts of gender-based violence. Similarly, for gonorrhea, the authors provide suggestive evidence that the composition of the sex market has become safer after decriminalization in RI: the percentage of transactions by Asian workers roughly doubles after 2003, almost catching up to the share of White workers. It turns out that Asians also have the lowest rates of gonorrhea in RI by a country mile. So, the pool of sex workers may have gotten safer. Other data suggest that massage parlors mainly provide manual stimulation, which is safer than vaginal or anal sex, both of which also decrease in Cunningham and Shah’s own data.

The story does not come with happy endings, which also happens to be the name of a documentary about the efforts to re-criminalize indoor sex work in RI that finally succeeded in 2009. The authors’ DD analysis does not find symmetric effects of re-criminalization in RI, which likely speaks to the fact that, this time, the policy change was completely anticipated.

* The only thing that gives me slight hesitation with this analysis is how the “synthetic RI” is composed of completely different states of the US for each outcome (see Table 10): for rapes, RI looks like the Dakotas and New Hampshire, while for gonorrhea, it looks like Louisiana and Montana. There is no rule that synthetic controls should be stable across outcomes, just that it would soothe me if they were…

...

The other paper I want to briefly touch on is about the effect of body-worn cameras by police in Washington, DC by Yokum, Ravishankar, and Coppock. The New York Times covered it here and you can find a write-up by the authors here. Officers from 7 districts of the DCPD were block randomized into wearing body cameras or not. The authors found no effects on any outcome of interest, such as complaints or use of force – despite the fact that the police in the treatment group used the cameras and made many more videos:

But, what if we had seen a significant downtick in both groups? Would we have said “no effect” then or would we have been much more equivocal? This is a case, unlike the Rhode island case discussed above, where (a) the introduction of the cameras were anticipated; (b) spillovers within blocks are more than likely (with the direction of resulting bias ambiguous); and (c) there was already a large discussion of policing practices across the country. So, even though the assignment is random and the sample size is large, worries about SUTVA violations are first order here. The authors may well have had no choice but to individually randomize in a real world, high stakes situation. And, this is a carefully conducted study with great authors, a pre-analysis plan, etc. I am inclined to believe “these” findings. But, some pure control clusters sure would have gone a long way to improve study design and, therefore, confidence…Plus, perhaps variation in intensity of treatment within clusters.

When I aired these concerns over Twitter, one of the authors engaged nicely and wrote a little thread of responses to FAQs that you can read here: Among his points is the very reasonable one that bystanders’ phone recordings may have already had such a large effect on the conduct of police that the BWCs did not have any marginal effect. A different kind of SUTVA violation…

Finally, Chris Blattman also chimed in on Twitter saying that people should judge papers by sample size first and sample size second. I understand the sentiment re: small sample sizes and the high probability of chance findings, but I disagree. Study design is equally important if not more so here: in this case, a pilot study that treated a much smaller number of officers could have shown different effects – perhaps the estimand that we care the most about: the one without any spillovers.

If we think spillovers are important for policy at scale, then we need a cluster-randomized design, where treatment intensity is randomized (or fixed at paper for a formal treatment of these so-called “randomized saturation” designs.

Join the Conversation