“Test and punish”?

There’s a debate raging in American schools today: how (and how much) should children be tested?

The No Child Left Behind (NCLB) Act created a system where all children in all schools from grades 3 to 8 must be tested each year. Critics refer to this accountability architecture as “test and punish,” with stakes such as school funding (or closings!), bonuses for teachers, or grade promotion for students all riding on performance. There is evidence that NCLB improved learning outcomes, but improvements came at a high cost: In addition to teaching to the test, this approach can lead to a number of perverse incentives, like keeping weaker students at home on test day, narrowing the curriculum, or downright cheating. Worse, some have said they can serve to mask and contribute to the structural race and class inequalities in the United States.

What does this mean for education systems in low- and middle-income countries? They often face a combination of weak institutions, low managerial capacity of school directors, high levels of poverty and inequality, and the presence of powerful teacher unions. In such contexts, high stakes accountability interventions might not be feasible nor desirable. Instead, a shared responsibility approach emphasizing the diagnostic use of standardized tests might be a more effective intervention to improve student learning.

But in the absence of testing, educators are flying blind

Few countries have gone as far down the test-based accountability path as some American states. In fact, in developing countries, the converse is more likely to be true: teachers, school directors, and education authorities don’t have the luxury of timely data detailing how students are performing. This was the case in Argentina, an outlier under the previous government in how little they used student achievement data. As a result, provincial ministries of education were unaware of how bad things had become and uncertain as to whether learning levels were, on the whole, improving or worsening. At the school level, while they most likely had their suspicions, teachers didn’t have robust data on which parts of the curriculum students struggled with most, nor which students needed the most support.

Can communicating test results – by itself – improve learning?

In light of this situation, the education authorities in La Rioja asked for technical support in developing and rolling out a Provincial student assessment that would allow schools to get a sense of students’ performance over time. Capacity and budget constraints meant rolling the test out to only 105 schools. To prove that giving teachers, school directors, and education authorities information from test results can, in and of itself, improve student performance, we randomly assigned schools to one of three groups: (a) a first treatment group, in which we administered standardized tests in math and reading comprehension at baseline and two follow-ups, then made those results available to the schools four months later (“diagnostic feedback”); (b) a second treatment group, in which we did the same and also provided schools with support to train principals and teachers (“capacity building”); and (c) a control group, in which we administered standardized tests only at the second follow-up. The detailed description of the intervention, including the instruments and datasets, can be found on the project’s website.

We saw large impacts in a short period of time.

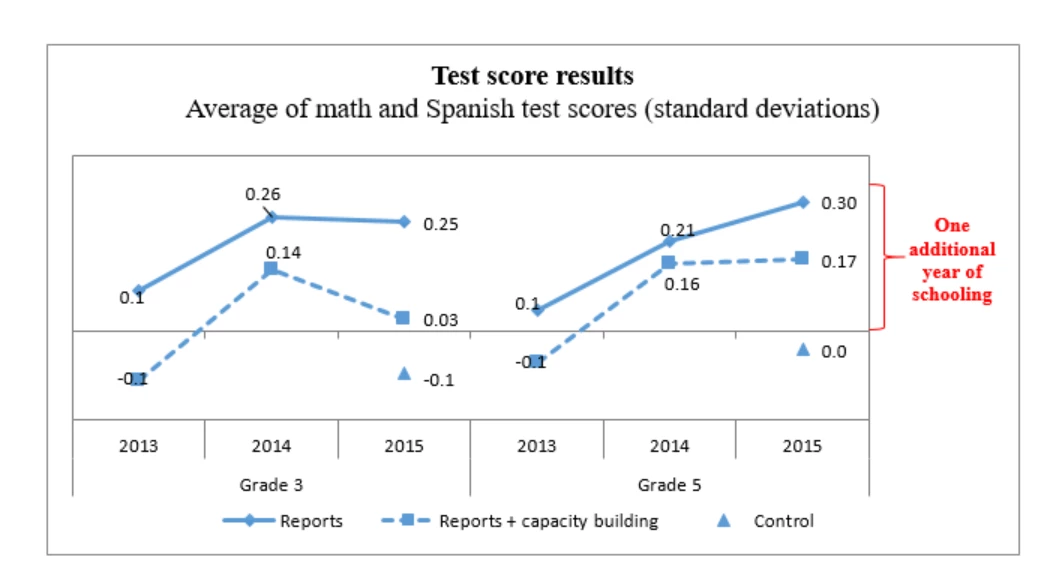

As is shown in our working paper “Teaching with the test. Experimental evidence on diagnostic feedback and capacity-building for public schools in Argentina,” after two years, schools in the diagnostic feedback group outperformed control schools by between 0.28 and 0.38 of a standard deviation, depending on the grade and subject tested. Effects of this size have been estimated to represent that treatment schools are performing as much as one academic year above control schools. When considering that most of the gains were seen after the first year of the intervention, this is a large impact in a short amount of time.

Schools that received capacity building also outperformed control schools in the fifth grade, but not in the third, and performed on par with schools that received diagnostic feedback. Upon further investigation, the low effect of the capacity building is explained by lower participation in workshops and fewer school visits than planned. It is worth noting that we did pick up a positive learning effect on those schools with principals that actually attended the sessions.

Why did student learning increase so quickly?

The paper explores two mechanisms. First, we hypothesize that more data improves school management. Principals in treatment schools used student assessment data to track the performance of their school, and to compare their schools’ performance with that of other schools in the province.

Second, we hypothesize that diagnostic feedback could improve classroom management. Students in treatment schools report that their teachers engage in a number of activities (e.g. grading homework, asking questions, explaining a topic) more frequently than their counterparts in the control schools. What is more, these teachers didn’t just engage in more activities, they also notably improved the quality of the interactions – being nice to students, giving them more time to explain their ideas, or pushing them to try their best – over those in control schools.

Perhaps having principals and teachers know that they were being monitored pushed them to exert more effort. Most likely, a combination of these and other driving mechanisms explain the impressive learning gains in just one year. Equally important to what is driving the results, however, is what we know isn’t driving the results: high-stakes.

- Was the test data used to evaluate teachers? No.

- Was the test data used to rank schools? No.

- Was the test data used to close or otherwise punish poorly performing schools? No.

- Was the test data used to incentivize/reward high performing schools, teachers, or students? No.

In light of the experiences in the United States, we also tested for “strategic behavior” on the part of the schools. There is no evidence that teachers kept poorer performers at home on test day, nor that familiarity with test items had a significant effect on results (which would be indicative of “teaching to the test”).

In sum, the information from the tests was used for teaching – what we call “teaching with the test” – not to punish. This additional support was all that was required for schools to better help students achieve remarkable learning gains in just one year. What’s more, these gains are much larger than those found in similar interventions that also attached stakes to the testing. Meaning that, in this case, the optimal option – no-stakes testing – was also the most politically palatable, a rare example of the technical and political solutions aligning for maximum impact.

Maria Cortelezzi and Maria Jose Vargas contributed to this blog.

Join the Conversation