Anna Crespo is an Economist Senior Specialist at the Inter-American Development Bank’s Office of Evaluation and Oversight

Development agencies are in a privileged position to encourage a better understanding of the results of programs and the channels through which those results are better obtained. This, in turn, improves their own ability to sponsor effective interventions. This is the rationale behind the increasing use of impact evaluations: they can isolate and measure the effect of an intervention. In addition, methodological advances and greater availability of high-quality data have allowed for an increase in the types of questions these evaluations can answer. But could impact evaluations go beyond their well-known role in contributing to knowledge and accountability, to a much broader role of supporting policy makers in executing projects?

The Inter-American Development Bank (IDB) is no newbie on the use of impact evaluations. In fact, its first step towards their use dates back 20 years, when the government of Mexico sought IDB’s support for the first evaluation of its conditional cash transfer program. After this experience, the IDB understood the power of this new tool and started to urge that similar programs in its borrowing countries include an impact evaluation on their monitoring and evaluation arrangements. As time passed, the organization slowly set different instruments to promote the use of impact evaluations. This has resulted in hundreds of evaluations being proposed over the years.

What have we learned from conducting so many evaluations? The IDB’s Office of Evaluation and Oversight (OVE) decided to take stock of the organization’s experience so far ( full report is here). Rather than focusing on the results and the learning opportunities from each evaluation, OVE’s focus was on the lessons that could be learned from conducting evaluations in organizations like the IDB. By reviewing all operations approved between 2006 and 2016, including technical assistances, OVE identified 531 proposed impact evaluations. Among them, less than 20% (94) had been completed and a little over half (286) were still ongoing and potentially concluding in the next five years. Among the ones completed and ongoing, a clear - and steep - learning curve could be identified; i.e., over time IDB has increased its capacity and engaged in better quality evaluations, which are resulting in more robust knowledge pieces.

However, knowledge is not only obtained from the successfully completed evaluations: the organization has also learned a lot from the large share of cancelled evaluations, which comprise about 30% of the cases. Among the leading causes for cancelling an evaluation, when the loans and technical cooperations were not themselves cancelled, appear to have been political challenges (30%) and implementation and design issues (34%). Political challenges go beyond the capacity of IDB to address and are usually related to changes in governments and the focus of policies. However, many lessons have been drawn from issues with implementation and design. For instance, we know that having an ex-ante well-defined method is associated with a lower probability of cancellation. Currently no operation with an impact evaluation is approved unless the evaluation method proposed meets minimum quality standards. Also, more often IDB has sought an earlier engagement with authorities in the field, ensuring they have full understanding of the costs and benefits of committing to the impact evaluation and are in full agreement with the necessary steps, therefore avoiding unnecessary midcourse adjustments.

Since IDB impact evaluations are heavily financed through loans, cost has been a real concern among borrowing countries, and a reason for cancelations as well. While 57% of the evaluations had budgets below US$250,000, some evaluations can be very costly and 9% of them had budgets above US$1 million. The main determinant is the nature of the data used, rather than the methodology applied. In response, since 2011 more evaluations have used administrative data and the number of RCTs proposed have been declining, decreasing costs. The average cost of an impact evaluation based only on administrative data is US$74,000, compared to US$468,000 for those for which data are collected, which are similar to what is suggested in the literature and observed in other organizations. The main determinants of costs include the degree of dispersion of the beneficiaries and the country.

Going beyond knowledge: can impact evaluations support implementation?

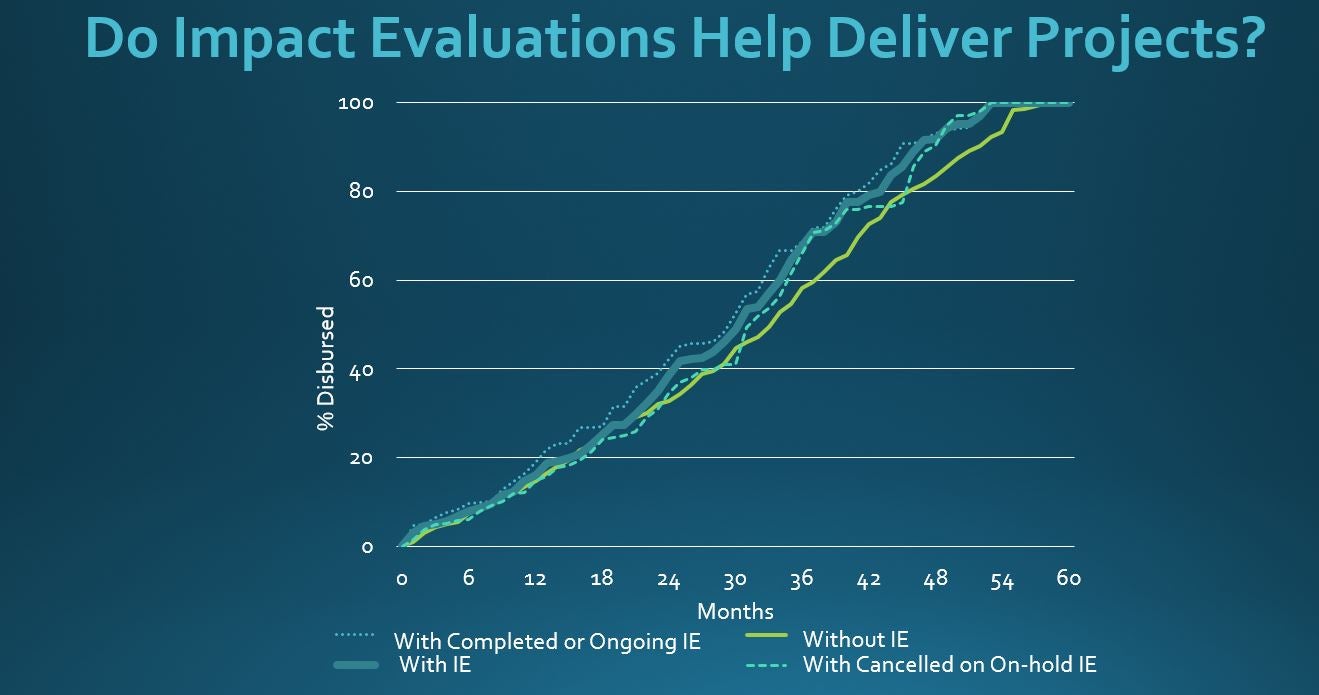

One would imagine that there would be less concern about costs and higher political commitment to the impact evaluations if they were not only thought of as a tool for learning, but rather as a tool for project implementation. A recent study from DIME argues that impact evaluations, when properly planned and implemented, can actually help in delivering projects. The authors of the study used information from World Bank projects to show that those with an accompanying impact evaluation disburse faster. They attribute this finding to several factors. First, impact evaluations could lead to better planning and evidence-based design. Second, they can increase implementation capacity through training and the support of the research team and field staff. Third, the process of preparing impact evaluations should increase the amount of data for policy decisions, and finally, “observer effect and motivation” may arise, as impact evaluations can generate expectations about the results of the interventions.

OVE’s study replicated some of the analyses done by DIME, using information from all IDB investment operations approved between 2009 and 2016. While OVE’s study is a much more simplified version, due to data constraints, similar evidence was found as we measure the difference in disbursement rates of projects with and without impact evaluations. There are some drawbacks to this type of analysis - discussed in the paper - and no causality can be attributed, but it is intended to be informative in relation to the potential of impact evaluations for speeding up projects.

OVE’s study found that while loans with and without impact evaluations have no significant differences in the ex-ante expected duration, projects with an impact evaluation have faster disbursements after the second year and are completed three months earlier. One potential explanation for this result is that projects with impact evaluations are better designed, as the process of preparing such projects seems to be more involved and takes on an average of 300 more IDB staff hours to be completed. Also, projects with an impact evaluation seem to reach approval in a more mature way, taking less time to reach eligibility; i.e. to begin disbursing once the project has been approved.

In addition, by comparing projects with impact evaluations and those whose impact evaluations have been cancelled or are on hold, OVE found a significant difference. In fact, projects with cancelled impact evaluations or impact evaluations that are on hold behave similarly to those that did not propose an impact evaluation to begin with. This may be an indication that the impact evaluations can play a role in the field as they are being conducted.

A better understanding of the channels through which impact evaluations contribute to project implementation could help establish them as a policy making instrument in their own right.

Development agencies are in a privileged position to encourage a better understanding of the results of programs and the channels through which those results are better obtained. This, in turn, improves their own ability to sponsor effective interventions. This is the rationale behind the increasing use of impact evaluations: they can isolate and measure the effect of an intervention. In addition, methodological advances and greater availability of high-quality data have allowed for an increase in the types of questions these evaluations can answer. But could impact evaluations go beyond their well-known role in contributing to knowledge and accountability, to a much broader role of supporting policy makers in executing projects?

The Inter-American Development Bank (IDB) is no newbie on the use of impact evaluations. In fact, its first step towards their use dates back 20 years, when the government of Mexico sought IDB’s support for the first evaluation of its conditional cash transfer program. After this experience, the IDB understood the power of this new tool and started to urge that similar programs in its borrowing countries include an impact evaluation on their monitoring and evaluation arrangements. As time passed, the organization slowly set different instruments to promote the use of impact evaluations. This has resulted in hundreds of evaluations being proposed over the years.

What have we learned from conducting so many evaluations? The IDB’s Office of Evaluation and Oversight (OVE) decided to take stock of the organization’s experience so far ( full report is here). Rather than focusing on the results and the learning opportunities from each evaluation, OVE’s focus was on the lessons that could be learned from conducting evaluations in organizations like the IDB. By reviewing all operations approved between 2006 and 2016, including technical assistances, OVE identified 531 proposed impact evaluations. Among them, less than 20% (94) had been completed and a little over half (286) were still ongoing and potentially concluding in the next five years. Among the ones completed and ongoing, a clear - and steep - learning curve could be identified; i.e., over time IDB has increased its capacity and engaged in better quality evaluations, which are resulting in more robust knowledge pieces.

However, knowledge is not only obtained from the successfully completed evaluations: the organization has also learned a lot from the large share of cancelled evaluations, which comprise about 30% of the cases. Among the leading causes for cancelling an evaluation, when the loans and technical cooperations were not themselves cancelled, appear to have been political challenges (30%) and implementation and design issues (34%). Political challenges go beyond the capacity of IDB to address and are usually related to changes in governments and the focus of policies. However, many lessons have been drawn from issues with implementation and design. For instance, we know that having an ex-ante well-defined method is associated with a lower probability of cancellation. Currently no operation with an impact evaluation is approved unless the evaluation method proposed meets minimum quality standards. Also, more often IDB has sought an earlier engagement with authorities in the field, ensuring they have full understanding of the costs and benefits of committing to the impact evaluation and are in full agreement with the necessary steps, therefore avoiding unnecessary midcourse adjustments.

Since IDB impact evaluations are heavily financed through loans, cost has been a real concern among borrowing countries, and a reason for cancelations as well. While 57% of the evaluations had budgets below US$250,000, some evaluations can be very costly and 9% of them had budgets above US$1 million. The main determinant is the nature of the data used, rather than the methodology applied. In response, since 2011 more evaluations have used administrative data and the number of RCTs proposed have been declining, decreasing costs. The average cost of an impact evaluation based only on administrative data is US$74,000, compared to US$468,000 for those for which data are collected, which are similar to what is suggested in the literature and observed in other organizations. The main determinants of costs include the degree of dispersion of the beneficiaries and the country.

Going beyond knowledge: can impact evaluations support implementation?

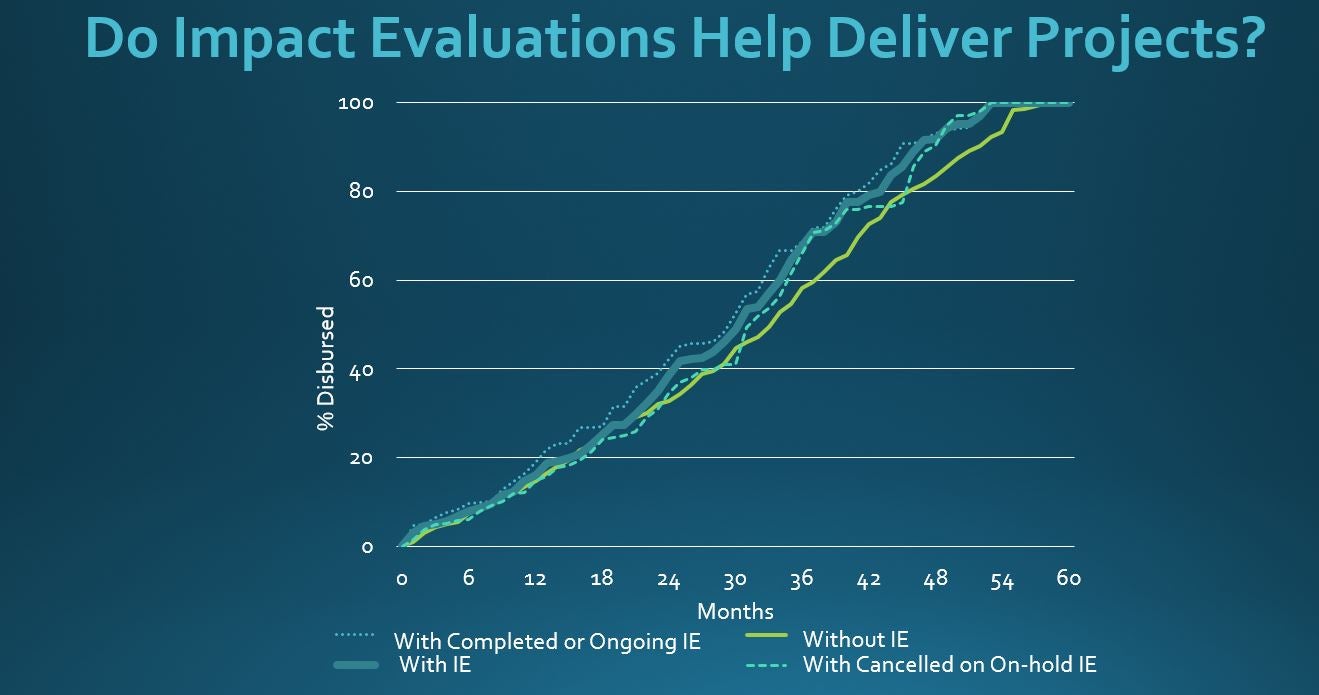

One would imagine that there would be less concern about costs and higher political commitment to the impact evaluations if they were not only thought of as a tool for learning, but rather as a tool for project implementation. A recent study from DIME argues that impact evaluations, when properly planned and implemented, can actually help in delivering projects. The authors of the study used information from World Bank projects to show that those with an accompanying impact evaluation disburse faster. They attribute this finding to several factors. First, impact evaluations could lead to better planning and evidence-based design. Second, they can increase implementation capacity through training and the support of the research team and field staff. Third, the process of preparing impact evaluations should increase the amount of data for policy decisions, and finally, “observer effect and motivation” may arise, as impact evaluations can generate expectations about the results of the interventions.

OVE’s study replicated some of the analyses done by DIME, using information from all IDB investment operations approved between 2009 and 2016. While OVE’s study is a much more simplified version, due to data constraints, similar evidence was found as we measure the difference in disbursement rates of projects with and without impact evaluations. There are some drawbacks to this type of analysis - discussed in the paper - and no causality can be attributed, but it is intended to be informative in relation to the potential of impact evaluations for speeding up projects.

OVE’s study found that while loans with and without impact evaluations have no significant differences in the ex-ante expected duration, projects with an impact evaluation have faster disbursements after the second year and are completed three months earlier. One potential explanation for this result is that projects with impact evaluations are better designed, as the process of preparing such projects seems to be more involved and takes on an average of 300 more IDB staff hours to be completed. Also, projects with an impact evaluation seem to reach approval in a more mature way, taking less time to reach eligibility; i.e. to begin disbursing once the project has been approved.

In addition, by comparing projects with impact evaluations and those whose impact evaluations have been cancelled or are on hold, OVE found a significant difference. In fact, projects with cancelled impact evaluations or impact evaluations that are on hold behave similarly to those that did not propose an impact evaluation to begin with. This may be an indication that the impact evaluations can play a role in the field as they are being conducted.

A better understanding of the channels through which impact evaluations contribute to project implementation could help establish them as a policy making instrument in their own right.

Join the Conversation