Imagine that you receive a grant from the Bill & Melinda Gates Foundation to give money transfers to families living in poverty. Yet, the selected country does not have formal income records. As a consequence, you decide to collect

income information through a household survey. On your way to collect the information, you find an economist who points out two problems: (1) income can be measured with a lot of noise; and (2) individuals may have incentives to sub-report income to participate in the program.

The economist suggests that instead of asking for income, an alternative is to ask for assets. The idea is simple. You can use information on assets to generate an index that allows differentiating between poor and non-poor households. For example, a family who has a car, refrigerator, and washing machine may be less poor than a family who does not own these items. More importantly, the use of assets has the advantage of reducing sub-reporting and measurement errors. Or at least, this seems to be the consensus among those who use information on assets to identify families in poverty.

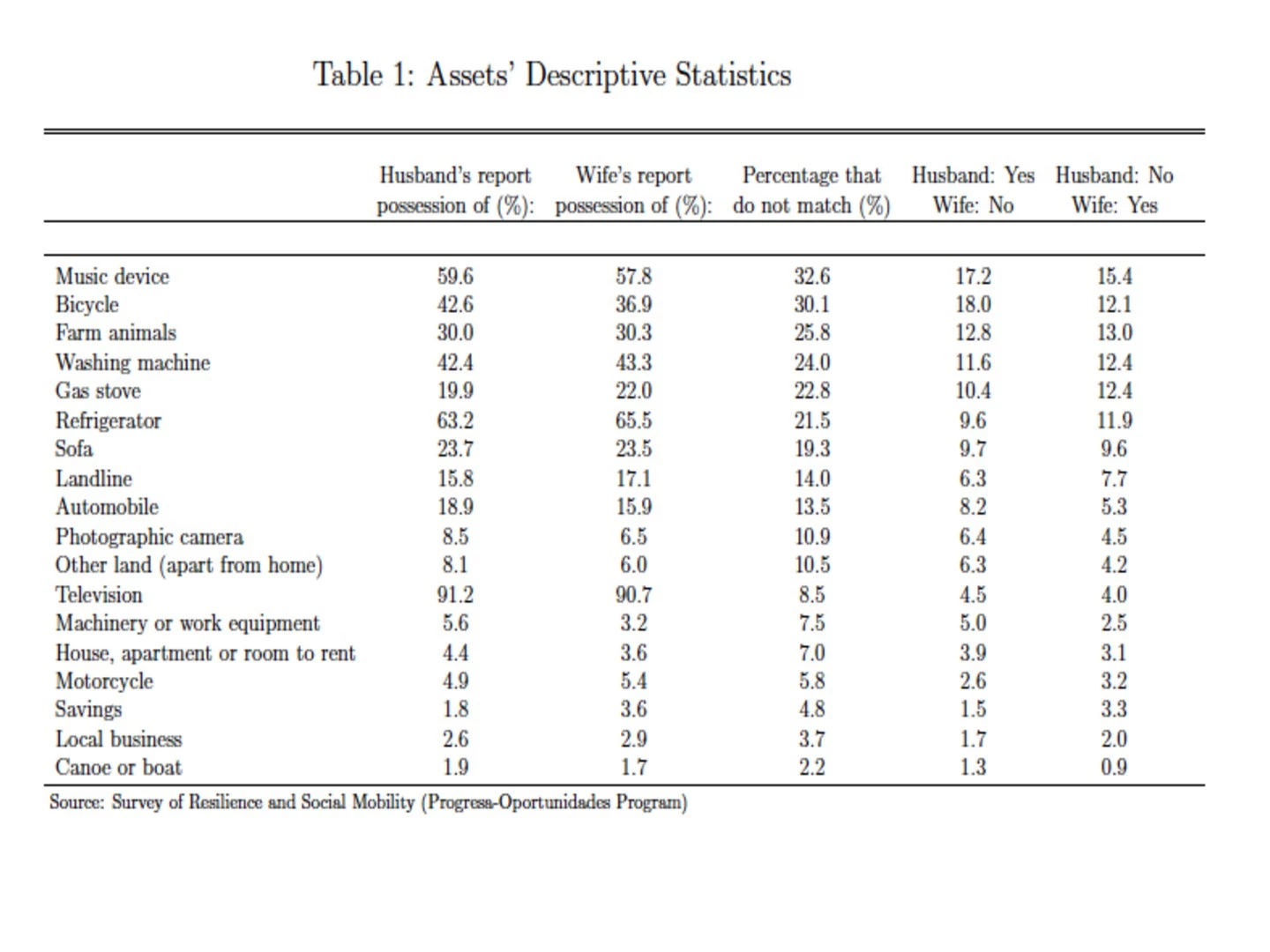

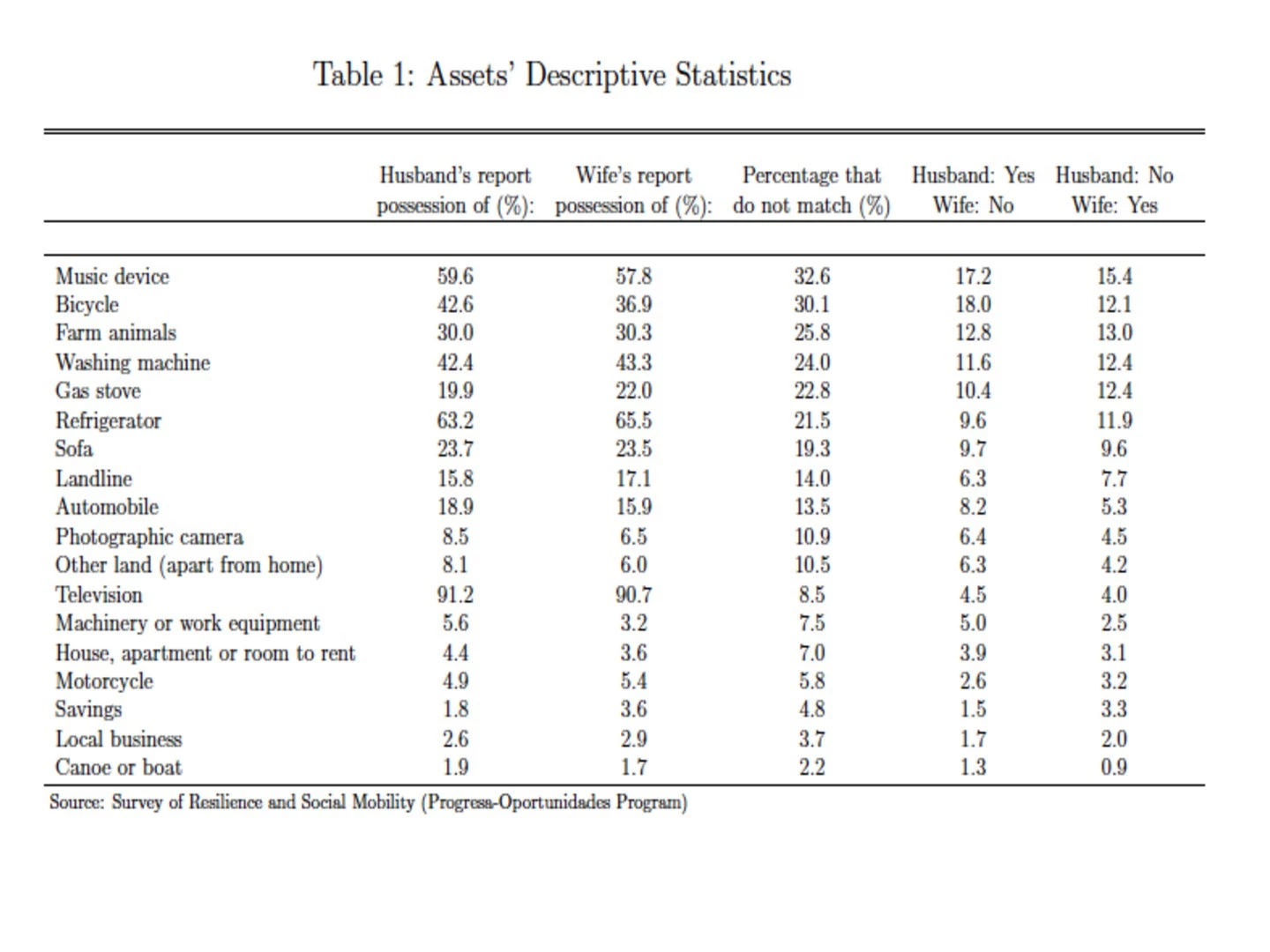

How reliable is the information related to assets? My job market paper sheds light on this question. I use a survey applied to couples participating in Mexico’s PROGRESA program (currently PROSPERA). The survey is composed of four modules with the following order: (1) cognitive test; (2) psychological tests; (3) socioeconomic aspects; and (4) questions about childhood. The survey asks questions to the wife and to the husband, separately, about possession of 18 assets within the household. The 18 assets are presented in Table 1. Columns 1 and 2 represent the percentage of households that report ownership of the assets. The first represents responses from the husbands and the second from wives. The results are quite encouraging. There are no significant discrepancies between what couples reported. For example, 63.2% of husbands and 65.5% of wives reported possession of a refrigerator inside the home.

Yet, Column 3 tells a different story. Specifically, it shows the percentage of cases where there is disagreement in the information reported by the spouses. The disagreement ranges from 2.2% to 32.6% (Column 3). For example, there is disagreement in 21.5% of the couples on the possession of a refrigerator. In particular, the husband reported having a refrigerator and the wife did not in 9.6% of the households (Column 4). And, the husband reported not having a refrigerator and the wife having one in 11.9% of the households (Column 5).

Is there a pattern that explains the disagreement in the information on assets reported by the spouses? There are many hypotheses about causes of misreporting in household surveys. Problems of misreporting can be related to cognitive process, social desirability, survey design, intra-household strategic behavior, and incentives ( Bound et al. 2001). In this paper, I pay particular attention to the cognitive process. Responding to a survey requires a cognitive effort. To analyze this hypothesis, I use the number of unanswered questions to the first module of the survey (cognitive test module). The idea is that the number of questions not answered may predict the individual's accuracy throughout the rest of the survey. The results show that the number of non-responses in the cognitive test by men (not women) predicts disagreement in the information reported on assets. Yet, it is possible that this result is a consequence of omitted variables. Following the procedures proposed by Altonji, Elder, and Taber (2005) and Oster (2017), the observed relation is unlikely to be driven by unobservables.

Does disagreement in the information reported on assets affect the identification of families living in poverty? To answer this question, I use the Progress Out of Poverty Index (PPI) managed by Innovations for Poverty Action. This index uses 11 socioeconomic variables to identify families living in poverty. Among these variables are questions related to assets such as having a washing machine or a car. The results show that who answers the survey matters for poverty measurement. For example, 10.1% of households would be classified as non-poor if asked to the husband, but as poor if asked to the wife. And, 8.1% of households would be classified as poor if asked to the husband, but as non-poor if asked to the wife.

What can we learn from these results? First, the information on assets is not free of problems of misreporting. Second, non-responses have valuable information. I found that unanswered questions to a cognitive test predict disagreement in the information reported later on assets. Finally, different people in the same household can provide significantly and meaningfully different answers. As pointed out by Philipson and Malani (1999), economists pay much more attention to the consumption of data than to the production of data. Rather than accepting information from household surveys as truth, practitioners should think in mechanisms to incentivize individuals to reveal accurate information or other ways to triangulate information.

Adan Silverio-Murillo is the Diversity Academic Post-doctoral Fellow in the School of Public Affairs at American University. He holds a PhD in Applied Economics at the University of Minnesota. You can read about his research here.

The economist suggests that instead of asking for income, an alternative is to ask for assets. The idea is simple. You can use information on assets to generate an index that allows differentiating between poor and non-poor households. For example, a family who has a car, refrigerator, and washing machine may be less poor than a family who does not own these items. More importantly, the use of assets has the advantage of reducing sub-reporting and measurement errors. Or at least, this seems to be the consensus among those who use information on assets to identify families in poverty.

How reliable is the information related to assets? My job market paper sheds light on this question. I use a survey applied to couples participating in Mexico’s PROGRESA program (currently PROSPERA). The survey is composed of four modules with the following order: (1) cognitive test; (2) psychological tests; (3) socioeconomic aspects; and (4) questions about childhood. The survey asks questions to the wife and to the husband, separately, about possession of 18 assets within the household. The 18 assets are presented in Table 1. Columns 1 and 2 represent the percentage of households that report ownership of the assets. The first represents responses from the husbands and the second from wives. The results are quite encouraging. There are no significant discrepancies between what couples reported. For example, 63.2% of husbands and 65.5% of wives reported possession of a refrigerator inside the home.

Yet, Column 3 tells a different story. Specifically, it shows the percentage of cases where there is disagreement in the information reported by the spouses. The disagreement ranges from 2.2% to 32.6% (Column 3). For example, there is disagreement in 21.5% of the couples on the possession of a refrigerator. In particular, the husband reported having a refrigerator and the wife did not in 9.6% of the households (Column 4). And, the husband reported not having a refrigerator and the wife having one in 11.9% of the households (Column 5).

Is there a pattern that explains the disagreement in the information on assets reported by the spouses? There are many hypotheses about causes of misreporting in household surveys. Problems of misreporting can be related to cognitive process, social desirability, survey design, intra-household strategic behavior, and incentives ( Bound et al. 2001). In this paper, I pay particular attention to the cognitive process. Responding to a survey requires a cognitive effort. To analyze this hypothesis, I use the number of unanswered questions to the first module of the survey (cognitive test module). The idea is that the number of questions not answered may predict the individual's accuracy throughout the rest of the survey. The results show that the number of non-responses in the cognitive test by men (not women) predicts disagreement in the information reported on assets. Yet, it is possible that this result is a consequence of omitted variables. Following the procedures proposed by Altonji, Elder, and Taber (2005) and Oster (2017), the observed relation is unlikely to be driven by unobservables.

Does disagreement in the information reported on assets affect the identification of families living in poverty? To answer this question, I use the Progress Out of Poverty Index (PPI) managed by Innovations for Poverty Action. This index uses 11 socioeconomic variables to identify families living in poverty. Among these variables are questions related to assets such as having a washing machine or a car. The results show that who answers the survey matters for poverty measurement. For example, 10.1% of households would be classified as non-poor if asked to the husband, but as poor if asked to the wife. And, 8.1% of households would be classified as poor if asked to the husband, but as non-poor if asked to the wife.

What can we learn from these results? First, the information on assets is not free of problems of misreporting. Second, non-responses have valuable information. I found that unanswered questions to a cognitive test predict disagreement in the information reported later on assets. Finally, different people in the same household can provide significantly and meaningfully different answers. As pointed out by Philipson and Malani (1999), economists pay much more attention to the consumption of data than to the production of data. Rather than accepting information from household surveys as truth, practitioners should think in mechanisms to incentivize individuals to reveal accurate information or other ways to triangulate information.

Adan Silverio-Murillo is the Diversity Academic Post-doctoral Fellow in the School of Public Affairs at American University. He holds a PhD in Applied Economics at the University of Minnesota. You can read about his research here.

Join the Conversation