The increased use of randomized experiments in development economics has its enthusiastic champions and its vociferous critics. However, much of the argument seems to be battling against straw men, with at times an unwillingness to concede that the other side has a point. During our surveys of assistant professors and Ph.D. students in development (see here for full details of the samples), we asked for views on some of the key methodological debates. The answers reveal, for the most part, a large amount of sanity and moderation:

Are experiments special?

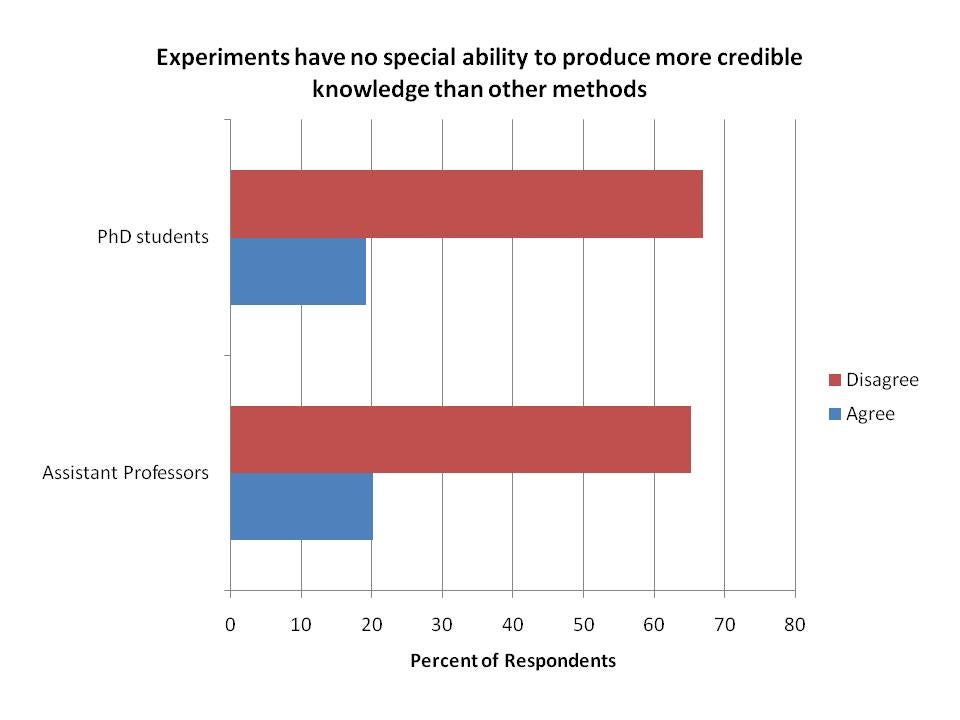

Angus Deaton argues that “experiments have no special ability to produce more credible knowledge than other methods”, a statement that seems pure hyperbole to us – as Guido Imbens notes in his reply to Deaton, it is hard to think of a situation where a researcher has the opportunity to randomize, but decides it will be more credible not to. Most respondents also disagree with Deaton:

Note: the missing category not shown is “neither agree nor disagree”

Note: the missing category not shown is “neither agree nor disagree”

But people do worry about methodology driving the questions being answered

Another critique of the increasing use of randomized experiments is a concern that “far too often it is not the question that is driving the research agenda but a preference for certain types of data or certain methods” (p 12 in this paper by Martin Ravallion). As the respondents show, it is possible to both believe that randomization does have a special ability to provide credible evidence, whilst also worrying like Martin that methods are driving the questions researchers answer.

What about other methods?

Another concern sometimes expressed by researchers is that there is less appreciation for other methods that are not experimental. Propensity-score matching is one common non-experimental approach for impact evaluation that relies on selection on observables. Is this automatically a non-starter? Most people aren’t going to rule the paper out for the method alone.

Structural models haven’t made as much in roads into development economics as in some other fields. Assistant Professors in particular seem dubious about whether the estimates of such methods are reliable.

Finally, even cross-country regressions get some love – few people agree that we’ve learnt nothing from them:

Most people aren’t ideologues

Our reading of this evidence suggests that most people aren’t ideologues – they both appreciate the benefits of randomized experiments, but also worry about methodology driving questions. They appreciate that we have learned and are continuing to learn something from other methods. So while arguing against straw men makes for nice rhetoric, it’s not reflecting the reality of most people’s beliefs.

Join the Conversation