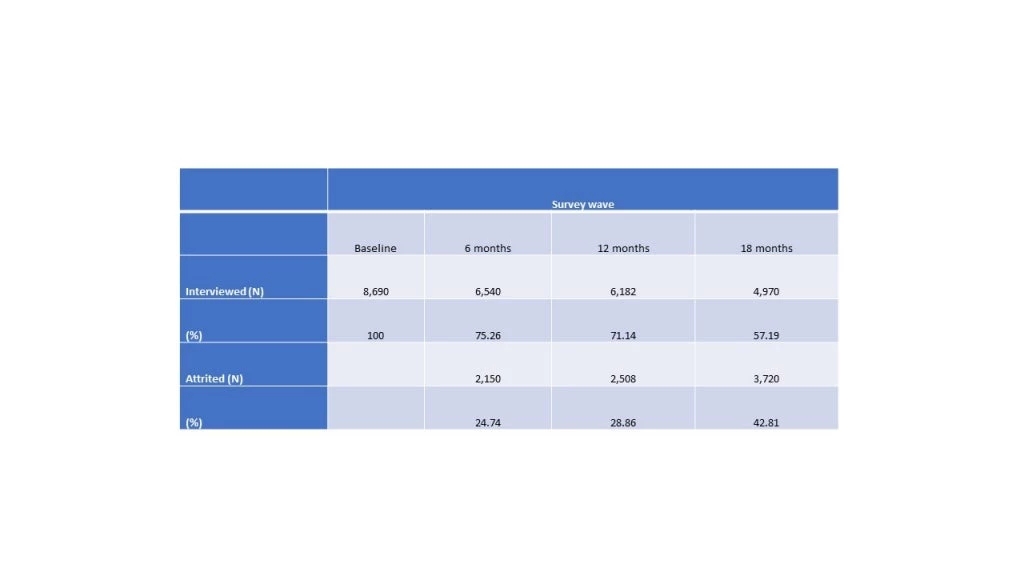

In one of my studies, where the primary outcome is binary, I was looking at one of our main impact tables: we were pleased to see that one of the treatments had a large effect, but puzzled by the wide confidence intervals. Then, I noted that the variance of a binary outcome – (p x (1-p))/n – which is maximized at 0.5. An increase from a success rate of 0.10 in control to 0.35 in treatment was great but came with this little downside. This got me thinking: should we have assigned more units to the treatment arm?

There is the obvious issue that you might have had no idea that this would happen if you have a novel intervention. So, under the null, it might make sense to assume equal variances and have a sample size ratio (N2/N1) equal to one. But, let’s set that aside for a moment and return to it at the end. Suppose you did know ex ante and wanted to act on it. When the outcome is continuous and you’re after powering a test for the difference of two means, this is straightforward: you want to allocate treatments in proportion to the standard deviations in the two groups. Here is a quick example:

Suppose that you expect a (mean, SD) of (500, 750) in the control group. You’re confident that the SD in your treatment arm will be twice as high and you want to detect an effect of 0.2 of the control SD, or 150. With 2,000 observations divided equally, you will have 0.80 power with alpha=0.05. But, with N2/N1=2, i.e. by allocating 667 units to C and 1,333 to T, you could increase your power to 0.85. Or, you could hold power constant and decrease the detectable difference or sample size. Stata’s ‘power’ command shows what would happen for allocation ratios from 1-5 (see table below) – you’re always better off with ratios above 1 and less than 4:

Figure 1: power calculations with a continuous outcome and treatment changing the variance as well as the mean

Now, this is fine: Stata’s commands along with another common software I tried perform this fine. Interestingly, they never recommend the researcher to deviate from the default ratio of N2/N1=1, which is why I think we don’t see as many studies with unequal sample sizes as we might otherwise, but the researcher has a choice to alter the ratios optimally.

But, going back to our own example, my intuition was that this logic should hold for binary outcomes as well. But, when I tried ‘power twoprop’ in Stata (and the equivalent in one other software) but varied the N2/N1 ratio away from unity, each software indicates a power loss rather than a gain. This puzzled me, so I calculated the (unpooled) standard errors by hand for equal allocation to C and T vs. proportional to SDs. In my case, I think the standard error would be minimized by allocating about 61% of the sample to T. If you had 80 subjects and allocated them equally across T and C, your SE would be 0.0891. Allocating 49 to T and 31 to C would reduce this to 0.0869. With an effect size of 0.25, these would translate to Z-stats of 2.81 vs. 2.88. These are small differences, which might only make a difference at the margin, but the direction is right: you’d gain a little power from deviating from equal allocation, whereas the commonly used power calculation tools indicate that you’d lose power:

Figure 2: Power calculations in Stata with a binary outcome

As I have not looked under the hood of these commands, it’s certainly possible that I am doing something wrong and they are correct. Or, they are doing something different, like pooled proportions and SEs for binary outcomes and this is the cause of the small difference. While the differences seem too small to matter for most, I can see them making a difference at the margin, especially for rare outcomes for which it’s hard to recruit subjects and studying them is expensive, the allocation of every subject might matter. Perhaps more so in biomedical sciences than in econ or political science…

So, if you think you know the answer, please let us know.

Now, back to the issue of whether you should be deviating from an assumption of equal variances in the first place. Suppose that your outcome of interest is continuous, but you have a novel intervention. You suspect the possibility of higher variance under treatment but not sure. This is where adaptive experimentation can come in handy (see here and here for two examples): you can pilot your experiment to get a sense of these parameters, then reassign units accordingly going forward. You can do this adaptively, keeping a live estimate of the (conditional) variance of the outcome and taking this into account in assigning units at each new batch. In Section 2.2 of this 2008 paper, Mark van der Laan discussed the assignment of subjects to treatments according to this so-called “Neyman Allocation” conditional on observables and generalizes it to experiments with more than two arms.

I thank Susan Athey and Vitor Hadad for pointing me to the van der Laan paper – without implicating them for anything in this blog post.

Join the Conversation