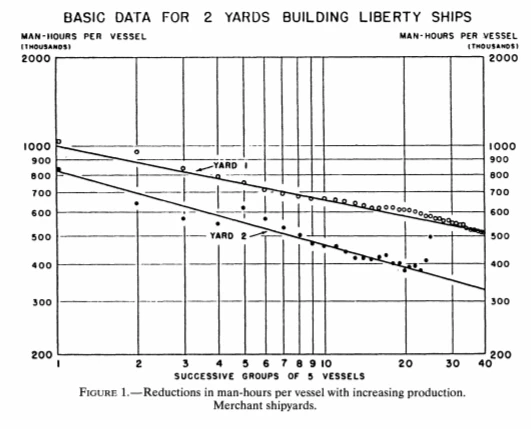

Everyone who has struggled through a PhD class in macro has probably read the famous Lucas (1993) Econometrica paper “Making a Miracle”, which asks what happened that allowed East Asian countries like Korea to dramatically increase productivity. His answer is on-the-job human capital accumulation, or learning by doing. As an example of this phenomenon, he reprints the figure below (Figure 1) from Allan Searle’s 1945 analysis of U.S. ship-building during World War II – where the time taken to produce the exact same ship falls with experience, so that doubling cumulative output reduces the hours needed to build a ship by 12 to 24%. In this short post I want to look at whether a similar learning-by-doing effect occurs with survey enumerators.

Figure 1: Learning by Doing in U.S. Ship-Building

Learning by Doing and Phone Surveys

Like many researchers who have recently had to conduct their follow-up surveys by phone instead of in-person, one of the big challenges affecting my co-authors and I has been the time constraints imposed by a phone survey. In many cases a follow-up survey in person can last one hour (or even more in some cases), whereas when it comes to phone surveys much shorter surveys are recommended: the World Bank Practical Guide for Mobile Phone surveys recommends (p. 59) that surveys be 15-30 minutes in length; J-PAL’s best practices for conducting phone surveys recommends keeping surveys to at most 30 minutes; and IPA’s remote surveying in a pandemic handbook recommends trying to stay under 20 minutes, with a max of 30 minutes.

Given this, when our first phone pilots for a follow-up survey of a project in Ecuador (I previously blogged about a separate rapid time-use survey conducted on a subsample) clocked in at an average of 59 minutes, we knew big changes needed to be made.

Our survey is conducted by Logika, with help from IPA Peru. We were targeting a follow-up sample of around 7,000 students. Given this large sample, after cutting some questions, one solution we employed was to randomize questions to subsets of the sample. The intervention here was one in which treated students had taken a course in personal initiative and negotiations behavior, and we wanted to measure both knowledge and mechanisms. We randomized so that half the sample got a longer knowledge test (long form survey), while the other half got more detailed mechanism questions (short form survey). This got us much closer to the 30 minute time on average, but, based on piloting, this still seemed on the long side, especially for the long-form survey.

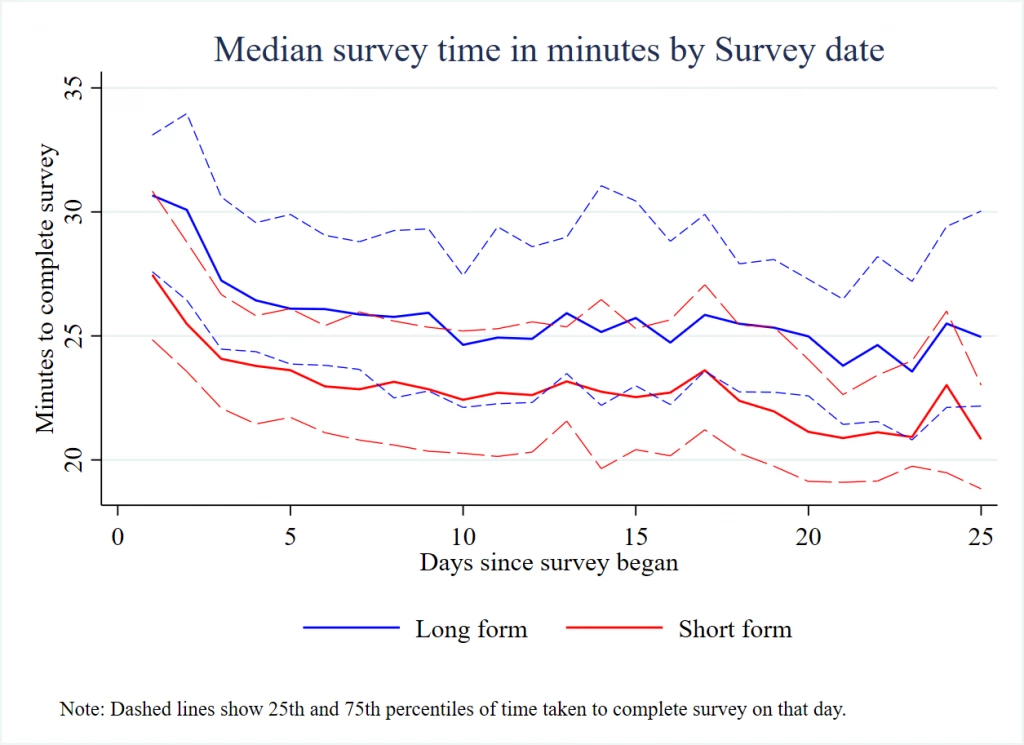

This is where the tie-in with learning-by-doing comes in. Based on previous survey experiences, the hope was that once enumerators started surveying, they would get better at it, and survey times would fall. Figure 2 shows that this is exactly what happens over the first 25 days of surveying (in which 6680 interviews were completed, an average of 267 per day). The median time for the long form survey was 31 minutes on the first day, fell to 26 minutes by day 5, and then around 25 minutes from day 10 onwards. That is, productivity improves 16 percent over the first week of the survey. We see the same holds for the short form survey, with the median time taken falling from 27 minutes on day 1 to 22 minutes by day 10.

Figure 2: Learning by Doing in Ecuadorian Phone Survey

Now this does not just reflect learning by doing on the part of enumerators – it also reflects a few enumerators dropping out or being let go for poor quality/productivity, and new replacements coming in. But even if I control for enumerator fixed effects, the survey date effects show improvements of around 4-5 minutes after 5 to 10 days. Note that we also randomized the order in which our respondents were to be called, so these effects also do not come from changes in who is getting interviewed over time (I stop the graph at 25 days, since after then we were doing more callbacks and interviewing harder to respond interviewees).

Now of course faster may not be better if quality falls as a result. Indeed, with the famous shipbuilding example, Thompson (2001) showed that the quality of the ships built, as measured by the fracture rate, declined as production speed increased. But with good survey supervision, quality can also improve over time as enumerators get suggestions of how to improve, and the lowest quality ones get replaced. We see this is what happened in our data: IPA had quality checks based on auditors listening to the audio of interviews, and scored interviews out of 5 on the quality of the complete survey. The average score on the first five days of backchecks was 4.22; this then increased to 4.38 for days 6 through 10, and 4.45 for days 11+.

I have had informal conversations in the past about these issues with survey firms, when negotiating over survey length, but have not had quantitative estimates to point to as to how much of an improvement in time we might expect. So hopefully sharing this experience helps my future self and others in planning/deciding on survey length.

Join the Conversation