There isn’t much cost analysis in impact evaluations. In McEwan’s

review of 77 randomized experiments in education, he found that “56% of treatments reported no details on incremental costs, while most of the rest reported minimal details.”

At the same time, there are calls for more of this work. As Dhaliwal et al. of J-PAL highlight, cost-effectiveness analysis helps policymakers to weigh the relative benefits of different programs. “One way to encourage policymakers to use the scientific evidence from these rigorous evaluations in their decision making is to present evidence in the form of a cost-effectiveness analysis, which compares the impacts and costs of various programs run in different countries and years that aimed at achieving the same objective.” Recently, Strand & Gaarder blogged about how cost-benefit analysis is key to “getting the most benefit from the money and efforts spent by the World Bank, on its projects and other client support.” As they say, “Every dollar badly spent is a life that wasn’t saved; a child that didn’t receive education.”

Cost analysis, coupled with impact analysis, is valuable to policy decisions. So the recent post by Strand and Gaarder left me considering the question, Why don’t more economists do cost analysis? Even more locally, Why don’t I like to do cost analysis? (And I’ve even done writing on cost analysis – paper; blog post.)

Briefly, let me clarify what I mean by cost analysis. I’m referring to cost-effectiveness and cost-benefit analysis. Cost-effectiveness (CE) analysis examines the cost-per-benefit of an intervention: For example, how much does it cost to achieve an additional standard deviation of student school participation? Cost-benefit (CB) analysis examines the rate of return of an intervention: For example, what is the present value of lifetime benefits of a program set against the costs? The cost side of the analysis is similar across both.

Back to the question: Why don’t economists do it? (Caveats: for the most part; in impact evaluations.)

Reason #1: It doesn’t feel like economics to microeconomists. Or at least, it doesn’t feel that interesting. Microeconomics (where most impact evaluation studies fall) principally concerns itself with decision-making by individuals, households, or firms. So when we have an intervention to provide information about the returns to schooling or to provide agricultural extension classes, we are most interested in the behavior change, or in other words, in the impact of the program. Many multi-arm impact evaluations vary the offered treatment without explicitly or purposely varying the costs to the beneficiaries. So the variation of interest is the variation in behavioral responses.

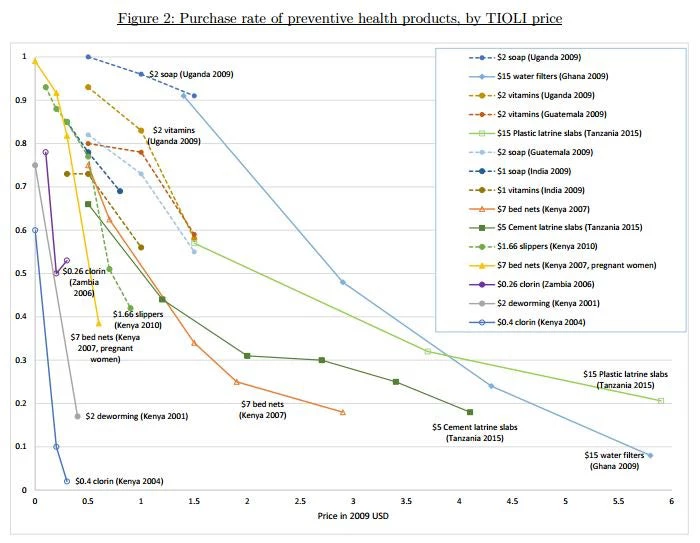

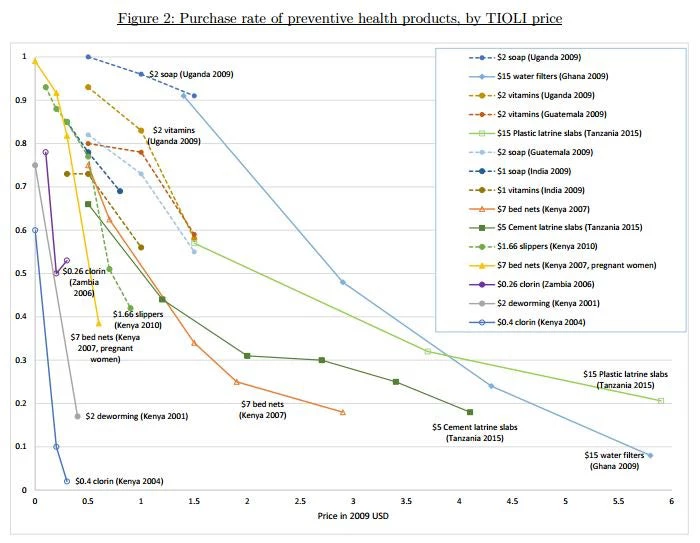

Of course, costs have non-obvious behavioral implications, i.e., there are non-linearities that make variation in costs much more interesting than “people buy less when the price is higher.” Dupas and Miguel show wide variation in the price elasticity of demand for preventive health products, all from studies that vary the cost to participants.

Source: Dupas and Miguel 2016

Good impact evaluations that have explicitly varied costs include Baird et al.’s cash transfer experiment in Malawi, Lee et al.’s experiment randomizing the price of electrical grid connections in Kenya, and Cohen et al.’s experiment varying the size of a subsidy for malaria treatment. The first was published in the Quarterly Journal of Economics; the last in the American Economic Review. (Lee et al. is too new, so we’ll see.) So there are potential publishing returns to interesting cost analysis.

But most evaluations don’t have lots of price variation, so the cost analysis seems less exciting from an economics standpoint, even though it’s still important from a policy standpoint.

Reason #2: It’s not trivial. A common method of CE/CB analysis is the “ingredients method”; Patrick McEwan has a nice introductory paper here. Essentially, you add up all the cost ingredients of a program and then adjust for “price levels, time preference, and currency.” As my kids would say, easy peasy lemon squeezy.

Not always so easy. There can be a lot of ingredients. Across a set of education impact evaluations compiled by J-PAL, Anna Popova and I identified a median of seven ingredients. And – much more difficult – when an organization or government agency is implementing a wide range of programs, it can be very tricky to deal with all the fixed costs. Impact evaluations of World Bank projects, for example, usually evaluate one or two out of several project activities, and separating costs (beyond the most obvious ones) is non-trivial.

Furthermore, in many settings, understanding the opportunity costs of time for implementers and especially for participants requires a wide range of assumptions.

J-PAL has a nice collection of spreadsheets demonstrating what this can look like, here, with more on methodology here. So it’s possible. But it’s not trivial, and after expending months or years on analyzing the behavioral responses, figuring out these costs can seem overwhelming, and the returns can seem low.

Reason #3: I don’t have any training in it. I spent two years in coursework for a graduate degree in economics. I don’t think I received a single lecture on cost analysis. A quick search suggests that courses on cost-benefit analysis are much more likely to be taught in public policy programs than in economics doctoral programs (e.g., Harvard’s Kennedy School, University of Chicago’s Harris School of Public Policy, Australia National University’s Crawford School of Public Policy). So economists don’t generally come trained for this.

Last thoughts: Here are some things that I think will make this happen more and better.

At the same time, there are calls for more of this work. As Dhaliwal et al. of J-PAL highlight, cost-effectiveness analysis helps policymakers to weigh the relative benefits of different programs. “One way to encourage policymakers to use the scientific evidence from these rigorous evaluations in their decision making is to present evidence in the form of a cost-effectiveness analysis, which compares the impacts and costs of various programs run in different countries and years that aimed at achieving the same objective.” Recently, Strand & Gaarder blogged about how cost-benefit analysis is key to “getting the most benefit from the money and efforts spent by the World Bank, on its projects and other client support.” As they say, “Every dollar badly spent is a life that wasn’t saved; a child that didn’t receive education.”

Cost analysis, coupled with impact analysis, is valuable to policy decisions. So the recent post by Strand and Gaarder left me considering the question, Why don’t more economists do cost analysis? Even more locally, Why don’t I like to do cost analysis? (And I’ve even done writing on cost analysis – paper; blog post.)

Briefly, let me clarify what I mean by cost analysis. I’m referring to cost-effectiveness and cost-benefit analysis. Cost-effectiveness (CE) analysis examines the cost-per-benefit of an intervention: For example, how much does it cost to achieve an additional standard deviation of student school participation? Cost-benefit (CB) analysis examines the rate of return of an intervention: For example, what is the present value of lifetime benefits of a program set against the costs? The cost side of the analysis is similar across both.

Back to the question: Why don’t economists do it? (Caveats: for the most part; in impact evaluations.)

Reason #1: It doesn’t feel like economics to microeconomists. Or at least, it doesn’t feel that interesting. Microeconomics (where most impact evaluation studies fall) principally concerns itself with decision-making by individuals, households, or firms. So when we have an intervention to provide information about the returns to schooling or to provide agricultural extension classes, we are most interested in the behavior change, or in other words, in the impact of the program. Many multi-arm impact evaluations vary the offered treatment without explicitly or purposely varying the costs to the beneficiaries. So the variation of interest is the variation in behavioral responses.

Of course, costs have non-obvious behavioral implications, i.e., there are non-linearities that make variation in costs much more interesting than “people buy less when the price is higher.” Dupas and Miguel show wide variation in the price elasticity of demand for preventive health products, all from studies that vary the cost to participants.

Source: Dupas and Miguel 2016

Good impact evaluations that have explicitly varied costs include Baird et al.’s cash transfer experiment in Malawi, Lee et al.’s experiment randomizing the price of electrical grid connections in Kenya, and Cohen et al.’s experiment varying the size of a subsidy for malaria treatment. The first was published in the Quarterly Journal of Economics; the last in the American Economic Review. (Lee et al. is too new, so we’ll see.) So there are potential publishing returns to interesting cost analysis.

But most evaluations don’t have lots of price variation, so the cost analysis seems less exciting from an economics standpoint, even though it’s still important from a policy standpoint.

Reason #2: It’s not trivial. A common method of CE/CB analysis is the “ingredients method”; Patrick McEwan has a nice introductory paper here. Essentially, you add up all the cost ingredients of a program and then adjust for “price levels, time preference, and currency.” As my kids would say, easy peasy lemon squeezy.

Not always so easy. There can be a lot of ingredients. Across a set of education impact evaluations compiled by J-PAL, Anna Popova and I identified a median of seven ingredients. And – much more difficult – when an organization or government agency is implementing a wide range of programs, it can be very tricky to deal with all the fixed costs. Impact evaluations of World Bank projects, for example, usually evaluate one or two out of several project activities, and separating costs (beyond the most obvious ones) is non-trivial.

Furthermore, in many settings, understanding the opportunity costs of time for implementers and especially for participants requires a wide range of assumptions.

J-PAL has a nice collection of spreadsheets demonstrating what this can look like, here, with more on methodology here. So it’s possible. But it’s not trivial, and after expending months or years on analyzing the behavioral responses, figuring out these costs can seem overwhelming, and the returns can seem low.

Reason #3: I don’t have any training in it. I spent two years in coursework for a graduate degree in economics. I don’t think I received a single lecture on cost analysis. A quick search suggests that courses on cost-benefit analysis are much more likely to be taught in public policy programs than in economics doctoral programs (e.g., Harvard’s Kennedy School, University of Chicago’s Harris School of Public Policy, Australia National University’s Crawford School of Public Policy). So economists don’t generally come trained for this.

Last thoughts: Here are some things that I think will make this happen more and better.

- Specialists in cost analysis to complement the efforts of economists who are focused on measuring behavioral changes.

- Very clear tools to guide those who want to complement their impact analysis with cost analysis.

- Incentives for that work, whether through specialized grants, or conditions or top-ups within existing funding for impact evaluation.

- And if you can vary costs within your study population, you may learn a great deal.

Join the Conversation