This blog post is co-authored by Cigdem Celik, Facundo, Cuevas, and Luca Parisotto, all of whom are with the World Bank.

We are evaluating the effects of cash transfers to refugees in Turkey with colleagues from the World Bank (WB). The Emergency Social Safety Net program (ESSN) provides cash transfers to vulnerable refugees living in Turkey and is implemented by a partnership of Turkey’s Ministry of Family and Social Services, the Turkish Red Crescent (TRC), and the World Food Programme (WFP). The World Bank was asked to lead the impact evaluation of the program. Given contextual possibilities and operational constraints, the methodology of choice was to use a propensity score matching approach. But the identification strategy is not why we’re here…

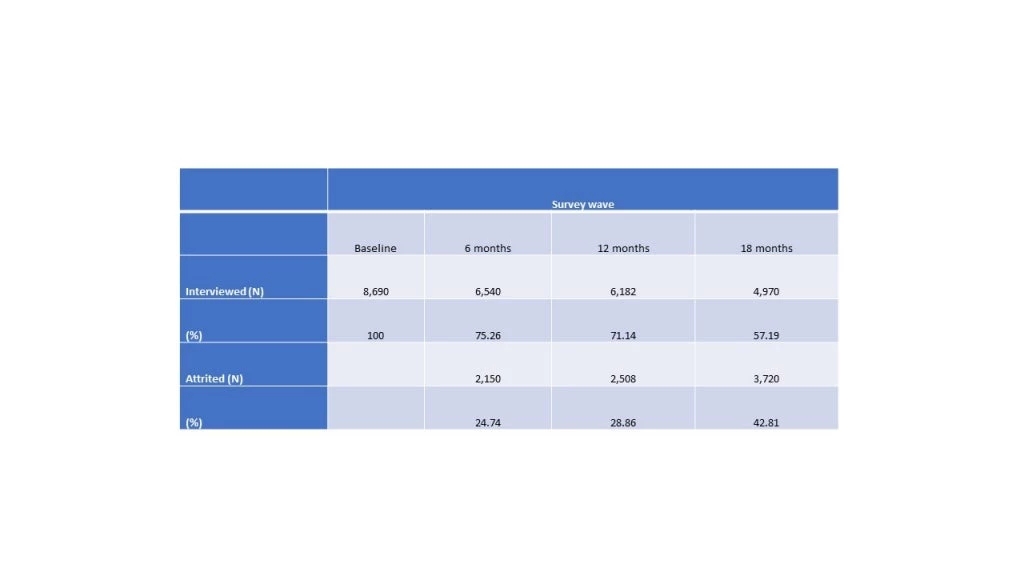

TRC and WFP set up a call center and a phone survey to collect data for the IE and for overall M&E of the program. They collected, over the course of 18 months, a large baseline sample of eligible and ineligible applicants, and three follow-up panel rounds at six-month intervals, at relatively low cost. However, we noticed that over time the attrition levels were alarmingly rising…

After 18 months, we were not only missing 43% of our respondents, but also the loss to follow-up was differential by treatment status: we were finding more of the treatment group than the control (although we could not detect a whole lot of differences in baseline observables on those lost to follow-up between beneficiaries and non-beneficiaries). This issue started alarming us much more than the identification issue that was front and center in our minds up to that point.

So, what did we do? Nothing earth shattering, but just some common-sense tweaks to survey protocols to reduce attrition, which were piloted using a sub-sample of 150 households chosen from three locations. Originally, the call center protocol for phone calls was to make up-to-three calls to sampled households (HHs) within working hours on weekdays, after which the case was abandoned. Thinking that the marginal cost of calling people more (over and above the cost of setting up the call center) must be small, the first improvement we suggested was to increase the number of calls. Second, we proposed to vary the timing of calls during the day, and to include evenings and weekends. The detailed in the protocol we shared with our counterparts is linked here for your reference. For example, we insisted that households should be tried at least once in each of four blocks of time (morning, early afternoon, late afternoon, and evening), every other day within weekdays, and called on weekends. As you can see the evenings and the weekends were new additions, as well as the explicit discipline to not call people repeatedly within a short window of time, but to try them at different hours of the day, including after dinner time. We suggested that the survey outfit not give up on a HH until they have tried them at least once in each time block on weekdays and on weekends, i.e. try for a week, having made 15 or more calls before referring this to further tracking.

What happened? Really good things – much better than we could have hoped for:

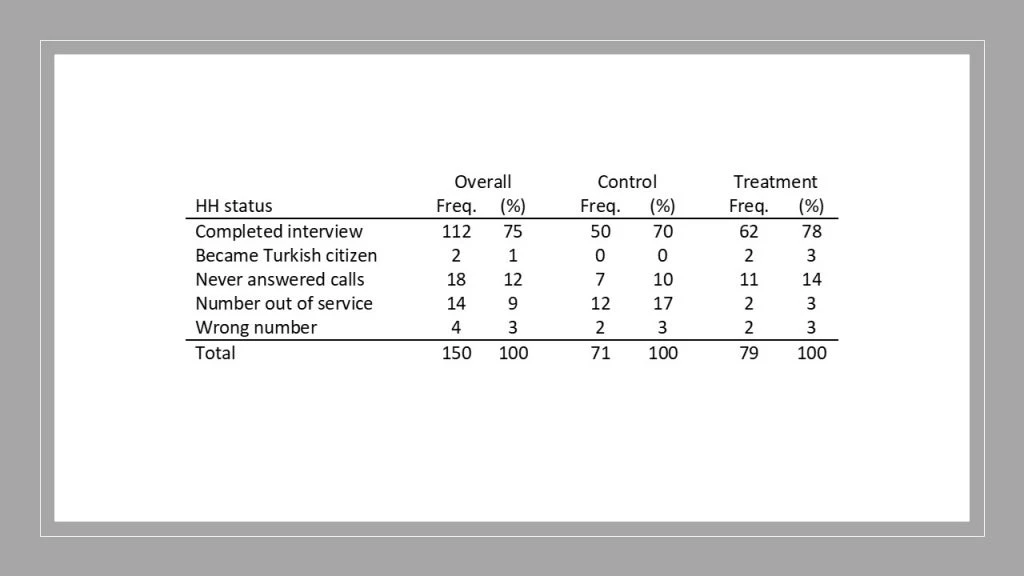

Just with an increased number of calls at different times of the day, we managed to reach 112 of 150 households, which is a 75% rate of successful follow-up. That is an 18 percentage-point (pp) increase from the 18-month follow-up in a striking reversal of trends.

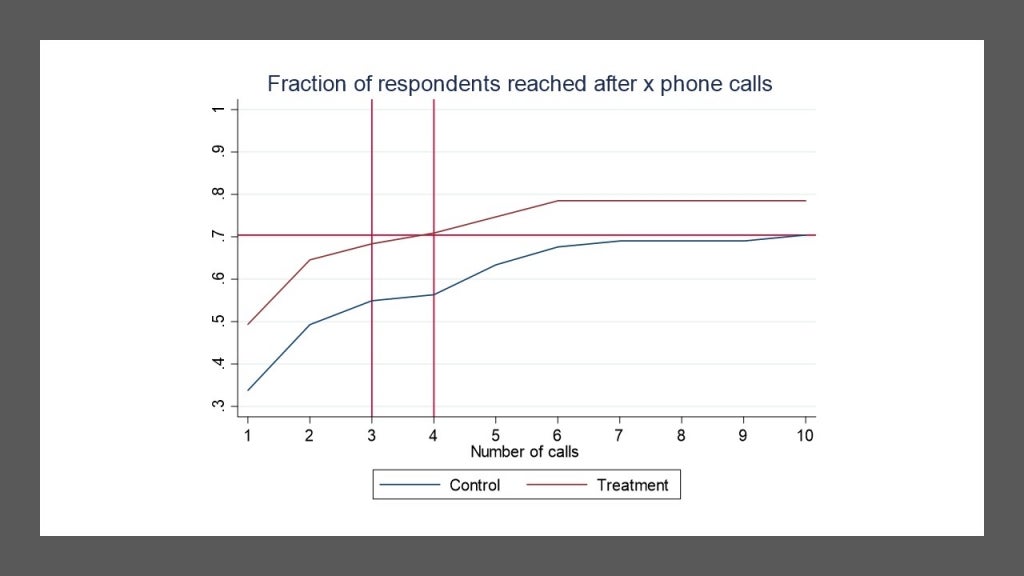

The success rates by treatment status as a function of the number (and order) of calls is also interesting:

As you can see, if we had stopped at three calls, we would have had a gap of about 13 pp in the rate of success between beneficiaries and non-beneficiaries: 55% in the former vs. 68% in the latter. But, by 10 calls, the gap had narrowed down to just 8 pp (70% vs. 78%).

The other interesting thing to note is the boost to success between calls 4 and 5, which is when the first evening call happened: so, there is a definite boost to calling people in off-hours. In contrast, there was no similar boost between calls 7 & 8, which is when the first weekend call happened, suggesting that conditional on having tried all hours of the day, adding weekends did not add that much value (which may have cost implications). Of course, we do not know whether things would have been the same, had the call center tried some households on a weekend first, then evenings. As our proposed protocol was not followed exactly to the letter, there was less variation in attempts across households, but we still had a fair amount of learning and a good deal of success.

The one nice robustness check for attrition bias we can do here is to use the number of calls it took to reach a certain percentage of beneficiaries (or non-beneficiaries), as described in the Behaghel et al. (2015) paper, discussed by David in an earlier blog post here. As you can see in the figure above, we successfully interviewed 70% of non-beneficiaries after 10 calls, while it took only three to four calls to reach the same percentage of beneficiary HHs. Limiting the sample of beneficiaries to those reached within four calls and doing some standard bounding analysis would give us another way of assessing attrition bias. We even hope to extend this to the physical tracking, second stage, of our tracking operation described below.

But we were not going to be satisfied with a 75% success rate. The second part of the pilot consisted in trying to physically track the 38 HHs that could not be reached over the phone, building on TRC’s ability to reach out to refugee HHs across the country. So, as described in the protocol linked above, we asked enumerators in three locations selected for the pilot to visit the last-known address of each household that could not be contacted by phone and see if they could either interview the HHs in person or obtain new contact information for the call center to try anew.

It turns out this provided a nice additional dent to attrition. Out of the 38 cases being tracked, 22 (58%) were reached and interviewed, and another HH was reached but declined the survey. The 58% rate of success in this second phase is not as successful as another instance of “intensive tracking exercise” that I had written about here (in an earlier blog post about dealing with attrition), but, naturally, tracking a sample of refugees has its challenges. Of the remaining 15 HHs, two had moved out of Turkey, seven had moved but new information on their whereabouts could not be obtained, and six others were untrackable due to having no address in the system (which can happen when they join other households or leave the country).

So, all in all, our effective success rate made it to a remarkable 89.5% (0.75 + 0.58*(1-0.75)). And although it’s only the pilot phase before endline, the experience has encouraged us and infused our TRC and WFP colleagues with refreshing (and much needed) positive energy for the endline.

Comments and suggestions welcome. We hope that the linked protocol is useful for those of you doing similar work.

Join the Conversation